Professor Chenhao Tan of the University of Chicago Gave a talk at the Center for Human-Computer Interaction + Design on Thursday. which focused on a new way to improve decision-making—using the power of explainable AI.

Tan isn’t advocating a world where AI makes all our decisions. Instead, he said he uses AI to replace human decision-making, with humans at the center.

This approach to artificial intelligence is called human-centered AI, and it’s the focus of work by Tan and his team at the Chicago Human + AI Lab.

“I want to create AI where the goal is to help humans,” Tan said. “We need to take into account human psychology, human goals and human values in the process of building AI.”

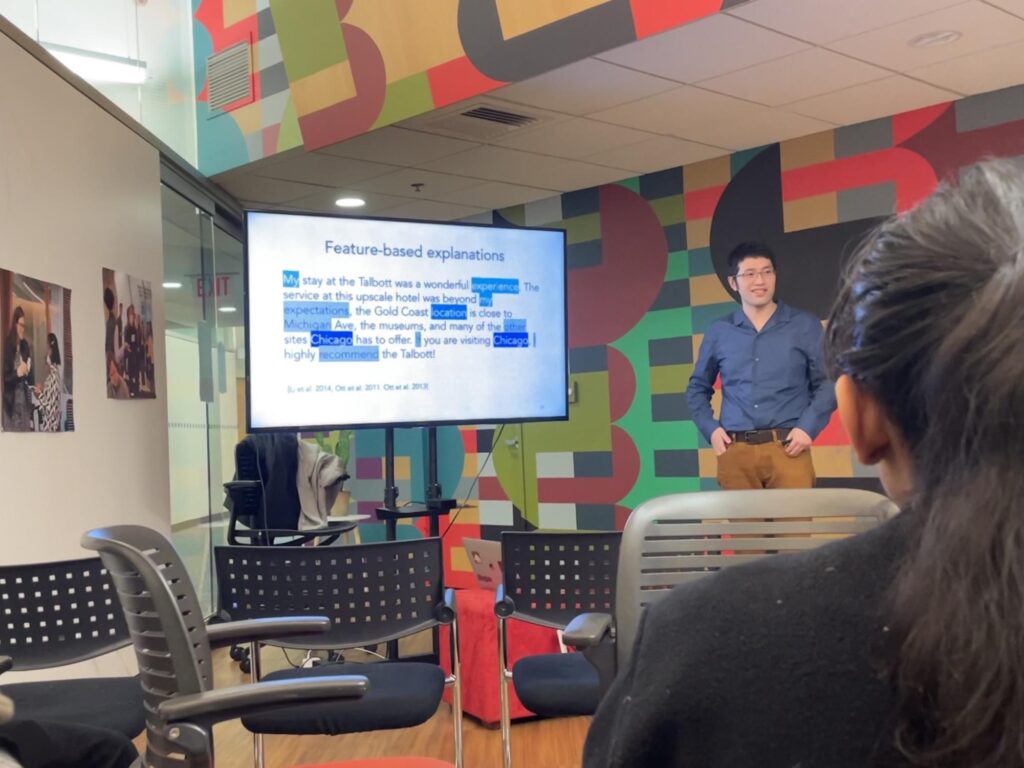

This was the central theme of Tan’s talk, titled “Towards Human-Centric AI: How to Create Useful Explanations for Human-AI Decision Making.” About 25 people gathered in the Francis Searle Building to hear Tan present his team’s research in technology and social behavior Ph.D. Program’s winter colloquium.

Tan began his presentation by drawing the connection between human decision-making and AI’s predictive processes.

“Decisions can be thought of as prediction problems,” Tan said.

He gave the example of cancer diagnosis as a prognostic problem. Tan explained that when a doctor decides whether a medical scan shows cancer, they are actually making a prediction about whether it is cancer based on their past experience.

This is exactly what predictive AI models are trained to do — analyze data to make different predictions, or decisions. According to Microsoft.

Tan said that understanding the algorithmic rationale of an AI model, called “explanation,” can help us learn from AI’s reasoning to spot flaws in AI models and become better decision makers ourselves. However, these explanations must build on knowledge of human intuition to truly help people make better decisions, he said.

He said that people are important. “There is not enough work to understand humans, and understanding humans is a prerequisite for building human-based AI.”

The TSB program offers a joint Ph.D. in computer science and communication. The program emphasizes understanding the impact of technology in a social context, according to its website, with students engaged in research beginning in their first year.

Fourth year Ph.D. candidate in technology and social behavior, Sachita Nishal, who designs AI systems for journalists, said the talk was an opportunity to meet and learn from people from different fields.

“This conversation makes a strong case for trusting human intuition when generating details, and I think that’s a really important path,” Nishal said. “When designing for (journalists), it’s important to try to understand what their intuitions are about what’s newsworthy and important to cover.”

Communication Studies Professor Nicholas Diakopoulos, director of graduate studies for the TSB program, said Tan’s interdisciplinary approach to human-centered AI was part of the reason the TSB department invited him to speak at the colloquium.

“I think (human-computer interaction) and the intersection with AI is a really, really interesting space, and a lot of people want to learn about it,” Diakopoulos said.

E-mail: [email protected]

Related Stories:

– University libraries offer workshops on artificial intelligence for students.

– The Cognitive Science Program hosts a panel on the impact of AI in politics.

– University of Chicago professor Sarah Sebo presents human-robot interaction research.