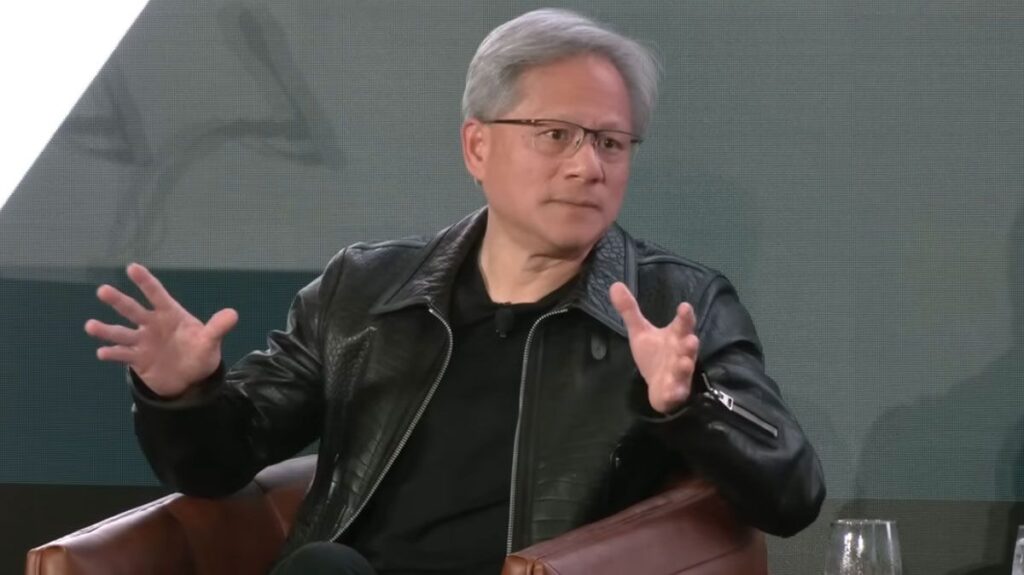

Nvidia CEO Jensen Huang recently took to the stage to claim that Nvidia’s GPUs are “so good that even when the competitor’s chips are free, it’s not cheap enough.” Huang further explained that Nvidia GPU prices are not really significant in terms of the total cost of ownership (TCO) of an AI data center. It’s hard to deny the impressive scale of Nvidia’s achievements in powering the growing AI industry. The company recently became the third most valuable company in the world thanks to its AI-accelerating GPUs, but Jensen’s comments are sure to be controversial as he dismisses a whole constellation of rivals, such as AMD, Intel And a range of competitors with ASICs and other custom AI silicon types.

Beginning at 22:32 of the YouTube recording, John Schoon, SIEPR’s former trion director and Charles R. Schwab Professor Emeritus of Economics, Stanford University, asks, “You build completely sophisticated chips. Is that possible?” That you’re going to face competition that claims to be good enough — not as good as Nvidia — but good enough and a lot cheaper? Is that a risk?”

Jensen Huang begins his response by unwrapping his small violin. “We have more competition than anyone on the planet,” the CEO claimed. He told Schoon that even Nvidia’s customers are its rivals in some respects. In addition, Huang pointed out that Nvidia actively supports customers who are designing alternative AI processors as far as informing them that upcoming Nvidia chips are on the roadmap.

That sounds like an unusual way to do business, but Huang’s next claim that Nvidia operates as a “completely open book” while working with almost everyone in the industry is hard to believe. May be. Remember, just recently Nvidia was heavily accused of running a GPU cartel, with consumers allegedly afraid to talk to rival GPU/AI accelerator makers for fear of delayed orders. An industry consortium has also formed in an effort to end the company’s dominant CUDA programming model.

Returning to the Stanford interview, Jensen Huang outlined Nvidia’s currently inaccessible unique selling points. Where you can design a chip to be good at a specific algorithm, Nvidia’s GPUs are programmable, Nvidia’s CEO said. What’s more, the Nvidia platform is “a benchmark at every cloud computing company.” A typical data center that wants to support a wide range of users, from financial services to manufacturing and the like, will therefore be drawn to Nvidia hardware.

Free is not cheap enough to compete with Nvidia.

Huang also tried to provide a contrast that might work against those who focus on GPU pricing: the people who buy and sell chips think about the price of chips, and the people who run data centers. They think about the cost of operation.

Of course, companies will be keenly aware of total cost of ownership (TCO), which essentially means that Nvidia’s claimed advantages such as time to deployment, performance, usability and flexibility are “so good that rival chips are free.” Yes, it is. Not cheap enough,” according to Huang.

Concluding his response to Schoon, Nvidia’s CEO emphasized that with this unbeatable TCO, it is Nvidia’s goal to keep it far ahead. Huang reminded summit attendees that the work Nvidia does requires a lot of hard work and innovation — there’s no luck involved — and nothing is taken for granted.

Naturally, Nvidia’s competitors will beg to differ with Huang’s statements, but the current AI world is arguably the 800lb gorilla, putting the onus on its competitors to disprove Jensen’s statements.