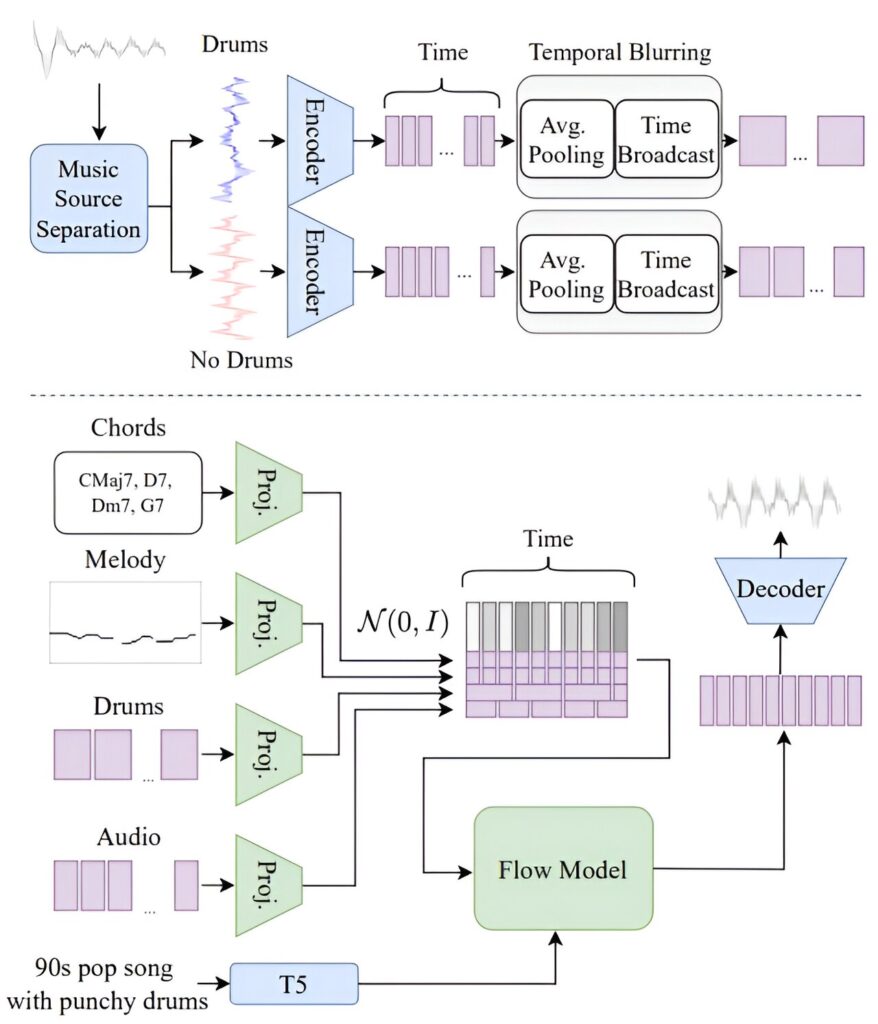

The top figure presents the temporal blurring process, showing source separation, pooling and broadcasting. The image below shows a high-level presentation of JASCO. Conditions are first presented for a low-dimensional representation and are integrated over the channel dimensions. Green blocks contain learnable parameters while blue blocks are frozen. Credit: arXiv (2024). DOI: 10.48550/arxiv.2406.10970

A team of AI researchers at Meta's Fundamental AI research team is making four new AI models publicly available for researchers and developers building new applications. The team has published a paper on this. arXiv One of the new preprint server models, JASCO, and how it can be used.

As interest in AI applications grows, major players in the field are building AI models that can be used by other organizations to add AI capabilities to their own applications. In this new effort, the Meta team has made four new models available: two versions of JASCO, AudioSeal and Chameleon.

JASCO is designed to accept a variety of audio inputs and produce a better sound. The team says the model allows users to adjust features such as drum sounds, guitar sounds or even to create melodies. The model can also accept text input and will use it to flavor a tune.

An example would be asking the model to produce a bluesy tune with lots of bass and drums. Similar explanations will be given for other instruments thereafter. Meta's team also compared JASCO to other systems designed to do much the same thing and found that JASCO outperformed them in three major metrics.

AudioSeal can be used to add watermarks to speech generated by an AI app, making the results easily recognizable as artificially generated. They note that it can also be used to watermark parts of AI speech embedded in real speech and will come with a commercial license.

The two Chameleon models both convert text to visual imagery and are being released with limited capabilities. Versions, 7B and 34B, the team notes, require both models to understand both text and images. Because of this, they can perform reverse processing, such as captioning images.

More information:

or Tal et al, Symbolic conditioning for joint audio and temporally controlled text-to-music generation, arXiv (2024). DOI: 10.48550/arxiv.2406.10970

Demo page: pages.cs.huji.ac.il/adiyoss-lab/JASCO/

arXiv

© 2024 ScienceX Network

Reference: Meta Releases Four New AI Models Publicly Available for Developer Use (2024, July 3) Retrieved July 3, 2024, from https://techxplore.com/news/2024-07-meta-ai.html gone

This document is subject to copyright. No part may be reproduced without written permission, except for any fair dealing for the purpose of private study or research. The content is provided for informational purposes only.