If you've used Keras to build neural networks, you're no doubt familiar with this. Serial API, which represents models as a linear stack of layers. gave Functional API Gives you additional options: Using separate input layers, you can combine text input with tabular data. By using multiple outputs, you can perform regression and classification simultaneously. Additionally, you can reuse layers within and between models.

With TensorFlow's agile process, you get even more flexibility. to use Custom modelsyou fully specify the forward pass through the model. Advertising Freedom. This means that many architectures become much easier to implement, including the applications mentioned above: generative adversarial networks, neural style transfer, variants of sequence-to-sequence models. Also, because you have direct access to the values, not tensors, model development and debugging is much faster.

How it works?

In avid implementations, operations are not compiled into graphs, but are defined directly in your R code. They return values, not symbolic handles to nodes in a computational graph – meaning, you don't need access to TensorFlow. session to evaluate them.

tf.Tensor(

[[ 50 114]

[ 60 140]], shape=(2, 2), dtype=int32)Eager execution, recent though it is, is already supported in current CRAN releases. keras And tensorflow. gave Avid leaders of executions Describes the workflow in detail.

Here's a quick sketch: You define a. Model, an rectifier, and a loss function. Data is streamed through. tfdatasetsincluding any pre-processing such as image resizing. Then, the model is just a loop over the training positions, giving you complete freedom as to when (and what) to execute any action.

How does backpropagation work in this setup? A forward pass is recorded by a GradientTape, and during the backward pass we implicitly calculate the gradient of the loss with respect to the model weights. These weights are then adjusted by the optimizer.

with(tf$GradientTape() %as% tape, {

# run model on current batch

preds <- model(x)

# compute the loss

loss <- mse_loss(y, preds, x)

})

# get gradients of loss w.r.t. model weights

gradients <- tape$gradient(loss, model$variables)

# update model weights

optimizer$apply_gradients(

purrr::transpose(list(gradients, model$variables)),

global_step = tf$train$get_or_create_global_step()

)See Avid leaders of executions For a complete example. Here, we want to answer the question: Why are we so excited about it? At least three things come to mind:

- Things that used to be complicated become very easy to accomplish.

- Models are easy to develop and easy to debug.

- There is a much better match between our mental models and the code we write.

We'll illustrate these points using a set of execution case studies that were recently published on this blog.

Complicated things made simple.

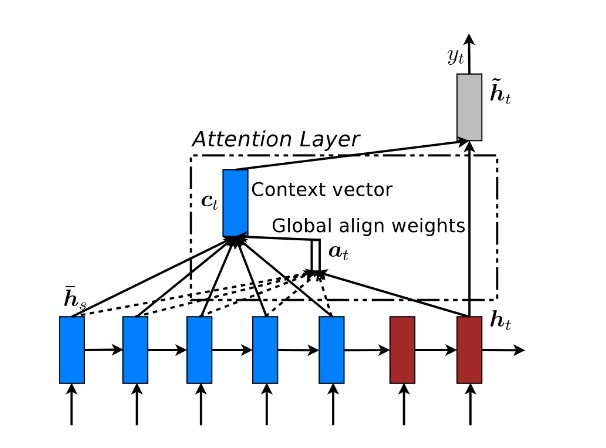

A good example of architectures that become much easier to describe with an eager implementation are attention patterns. Attention is an important component of sequence-to-sequence models, for example (but not only) in machine translation.

When using LSTMs on both the encoding and decoding sides, the decoder, being a recurrent layer, knows the sequence it has generated so far. It also (except for the simplest models) has access to the full input configuration. But where is the piece of information in the input sequence that is needed to generate the next output token? It is intended to draw attention to this question.

Now consider implementing this in code. Each time it is asked to generate a new token, the decoder needs to get the current input from the attention method. This means we can't just squeeze an attention layer between the encoder and the decoder LSTM. Before the advent of eager implementation, one solution would have been to implement it in low-level TensorFlow code. With dynamic execution and custom models, we can only use Keras.

However, attention is not limited to sequence-to-sequence problems. In image captioning, the output is a sequence, while the input is a complete image. When creating a caption, it is used to focus on certain parts of the image that correspond to different time steps in the text creation process.

Easy inspection

In terms of debugability, using only custom models (without the eager process) makes things easier. If we have a custom model. simple_dot From a recent post on embeddings and not sure if we have the formats correct, we can add logging statements, such as:

With the eager implementation, things get even better: we can print the tensor values ourselves.

But the convenience doesn't end there. In the training loop we have shown above, we can get the losses, model weights and gradients just by printing them. For example, add a line after calling. tape$gradient To print gradients for all layers as a list.

gradients <- tape$gradient(loss, model$variables)

print(gradients)Match the mental model

If you have read Deep learning with R, you know that it is possible to program less straightforward workflows using the Keras Functional API, such as those required for training GANs or performing neural style transfers. However, graph code doesn't make it easy to keep track of where you are in the workflow.

Now compare the example with generating digits with GANs post. The generator and the discriminator each stand as actors in the play:

generator <- function(name = NULL) {

keras_model_custom(name = name, function(self) {

# ...

}

}discriminator <- function(name = NULL) {

keras_model_custom(name = name, function(self) {

# ...

}

}Both are informed by their respective loss functions and modifiers.

Then, the fight begins. The training loop is just a sequence of generator operations, discriminator operations, and backpropagation through both models. No need to worry about freezing/unfreezing weights in the appropriate places.

with(tf$GradientTape() %as% gen_tape, { with(tf$GradientTape() %as% disc_tape, {

# generator action

generated_images <- generator(# ...

# discriminator assessments

disc_real_output <- discriminator(# ...

disc_generated_output <- discriminator(# ...

# generator loss

gen_loss <- generator_loss(# ...

# discriminator loss

disc_loss <- discriminator_loss(# ...

})})

# calcucate generator gradients

gradients_of_generator <- gen_tape$gradient(#...

# calcucate discriminator gradients

gradients_of_discriminator <- disc_tape$gradient(# ...

# apply generator gradients to model weights

generator_optimizer$apply_gradients(# ...

# apply discriminator gradients to model weights

discriminator_optimizer$apply_gradients(# ...The code is so close that we mentally picture the situation that there is hardly any memory needed to keep the overall design in mind.

Relatedly, this programming method lends itself to extensive modularization. It is explained by Another post on GANs that includes U-Net-like downsampling and upsampling steps.

Here, the downsampling and upsampling layers are each included in their respective models.

downsample <- function(# ...

keras_model_custom(name = NULL, function(self) { # ...so that they can be read in the generator's method call:

# model fields

self$down1 <- downsample(# ...

self$down2 <- downsample(# ...

# ...

# ...

# call method

function(x, mask = NULL, training = TRUE) {

x1 <- x %>% self$down1(training = training)

x2 <- self$down2(x1, training = training)

# ...

# ...finish

Eager implementation is still a very recent feature and under development. We believe that many interesting use cases will still emerge as this paradigm becomes more widely adopted among deep learning practitioners.

However, we now already have a list of use cases that describe the extensive options, usability enhancements, modularization and elegance offered by the execution code.

For quick reference, this cover:

-

Image caption with focus. This post makes the first point that it doesn't redefine focus in detail. However, it does harbor the notion of spatial focus applied to image regions.

-

Generating digits with convolutional generative adversarial networks (DCGANs). This post introduces using two custom models, each with their respective loss functions and modifiers, and subjecting them to forward and back propagation in sync. This is perhaps the most impressive example of how eager implementation makes coding easier by better aligning it with our mental model of the situation.

-

Image-to-image translation with pix2pix is another application of generative adversarial networks, but uses a more complex architecture based on downsampling and upsampling like U-Net. This nicely demonstrates how eager execution allows for modular coding, making the final program much more readable.

-

Nervous style transitions Finally, this post enthusiastically fixes the style transition problem, resulting in re-readable, concise code.

When diving into these applications, it's a good idea to refer to this as well. Avid leaders of executions So you don't lose sight of the forest for the trees.

We're excited about the use cases our readers will come up with!