This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see Continued note.

An introduction to the template for fixing Llama 3.1

Llama 3.1 Meta is a collection of pre-trained and instruction-tuned Large Language Models (LLMs) developed by AI. It is noted for its open source nature and impressive capabilities, such as multilingual dialog use cases, extended context length of 128K, advanced tool usage, and enhanced reasoning capabilities.

It is available in three model sizes:

- 405 billion parameters: The flagship foundation model is designed to push the boundaries of AI capabilities.

- 70 billion parameters: A high-performance model that supports a wide range of use cases.

- 8 billion parameters: A lightweight, ultra-fast model that retains many of the advanced features of its larger counterpart, making it extremely capable.

At Clarifai, we offer 8 billion parameter version of Llama 3.1, which you can fix using Llama 3.1 training template For extended context within the platform UI, instruction following, or applications such as text generation and text classification tasks. We converted it to Hugging Face Transformers format to improve compatibility with our platform and pipelines, ease of use, and deployment in different environments.

To get the most out of the Llama 3.1 8B model, we also quantized it using the GPTQ quantization method. Additionally, we used the LoRA (Low-Rank Adaptation) method to achieve efficient and fast fine-tuning of the pre-trained Llama 3.1 8B model.

Fixing Lama 3.1 is easy.: Start by creating your Clarifai app and uploading the data you want to fix. Next, add a new model to your app, and select the “Text Generator” model type. Select your uploaded data, customize fine-tuning parameters, and train the model. You can also preview the model directly in the UI after training is complete.

Act on it. Leader To fix the Llama 3.1 8b instructor model with your data.

New models published.

(Clarifai-hosted models are those we host in our Clarifai cloud. Wrapped models are those hosted externally, but we deploy them to our platform using third-party API keys. are)

- Published Llama 3.1-8b-instructionA multilingual, highly competent LLM optimized for extended contexts, instructional follow-up, and advanced applications.

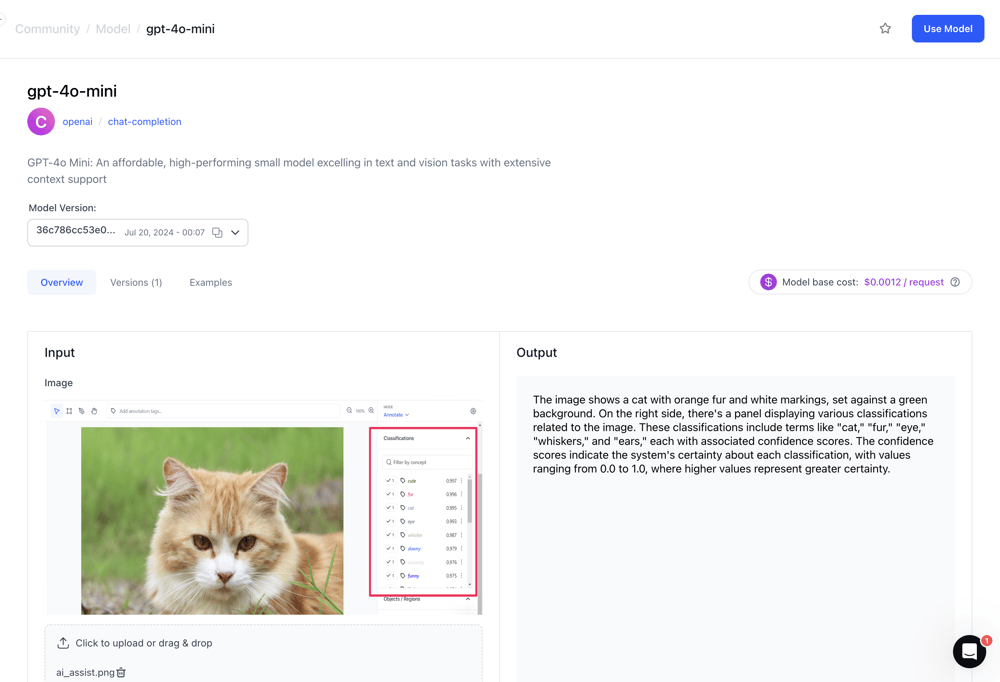

- Published GPT-4o-miniAn affordable, high-performance compact model that's perfect for text and vision tasks with wide context support.

- Published Qwen1.5-7B-chatan open source, multilingual LLM with 32K token support, excellent language understanding, alignment with human preferences, and competitive tool usage capabilities.

- Published Qwen2-7B-instructionA state-of-the-art multilingual language model with 7.07 billion parameters, excels in language understanding, generation, coding, and mathematics, and supports up to 128,000 tokens.

- Published Whisper-Large-v3A transformer-based speech-to-text model showing 10–20% error reduction compared to Whisper-Large-v2, trained on 1 million hours of weakly labeled audio, and used for translation and transcription tasks. Can be used for

- Published Llama-3-8b-Instruct-4bitan instructional LLM suitable for dialog use cases. It can outperform many of the available open source Chat LLMs on common industry standards.

- Published Mistral-Nemo-InstructA state-of-the-art 12B multilingual LLM with 128k token context length, suitable for reasoning, code generation, and global applications.

- Published Phi-3-Mini-4K-Instructa 3.8B parameter small language model offers state-of-the-art performance in reasoning and instruction-following tasks. It outperforms larger models with its high-quality data training.

Python SDK

Added patch operations.

- Introduced patch operations for input annotations and visualizations.

- Introduced patch operations for apps and datasets.

Improved the RAG SDK

- We enabled the RAG SDK to use environment variables for better security, flexibility and easier configuration management.

Improved the logging experience

- Improved the logging experience by adding a constant width value to rich logging.

Organization settings and management

Introduced a new organization user The character

- This role has access privileges such as a Contributor to the organization For all apps and scopes. However, it comes with only view permissions without create, update or delete privileges.

Restrictions apply to a user's ability to add new organizations based on the number of existing organizations and feature access

- If a user has created an organization and does not have access to the Multiple Organizations feature, the “Add an Organization” button is now disabled. We also show them a suitable tooltip.

- If a user has access to the Multiple Organizations feature but has reached the maximum creation limit of 20 organizations, the “Add an Organization” button is disabled. We also show them a suitable tooltip.

Improved functionality Hyperparameter sweep Module

- Now you can use the module to effectively train your model on a combination of threshold and hyperparameter values.

Docs Refresh

Made significant improvements to our documentation. Site

- Upgraded the site to use Docusaurus version 3.4.

- Other enhancements include aesthetic updates, more intuitive menu-based navigation, and a new comprehensive API reference guide.

Models

Enabled deletion of associated model assets when removing a model annotation.

- Now, when deleting a model annotation, the corresponding model assets are also marked as deleted.

Workflow

Improved functionality Work flow is encountered.

- You can now use the face workflow to efficiently create face landmarks and perform visual face detection in your applications.

Fixed issues with Python and Node.js SDK code snippets

If you click the “Use Model” button on the individual model page, the “Call via API / Use in Workflow” model appears. You can then integrate code snippets shown in different programming languages into your use case.

- Previously, code snippets in the Python and Node.js SDKs for image-to-text models incorrectly output visualizations instead of the expected text. We have fixed this issue to ensure that the output is now correctly rendered as text.

Added support for non-ASCII characters.

- Previously, non-ASCII characters were completely filtered out of the UI when creating visualizations. We've fixed this issue, and you can now use non-ASCII characters in all components.

Improved the display of conceptual relationships

- Concept relationships are now displayed next to their corresponding concept names, providing clear and immediate context.