This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see Continued note.

Fixing large language models using the Clarify platform

Fine-tuning allows you to adapt the foundational text-to-text models to specific tasks or domains, making them more suitable for particular applications. By training on task-specific data, you can improve the model's performance on those tasks.

Fine-tuning lets you take advantage of transfer learning and use knowledge gained from a previously trained text model to facilitate the learning process of a new model for a given problem.

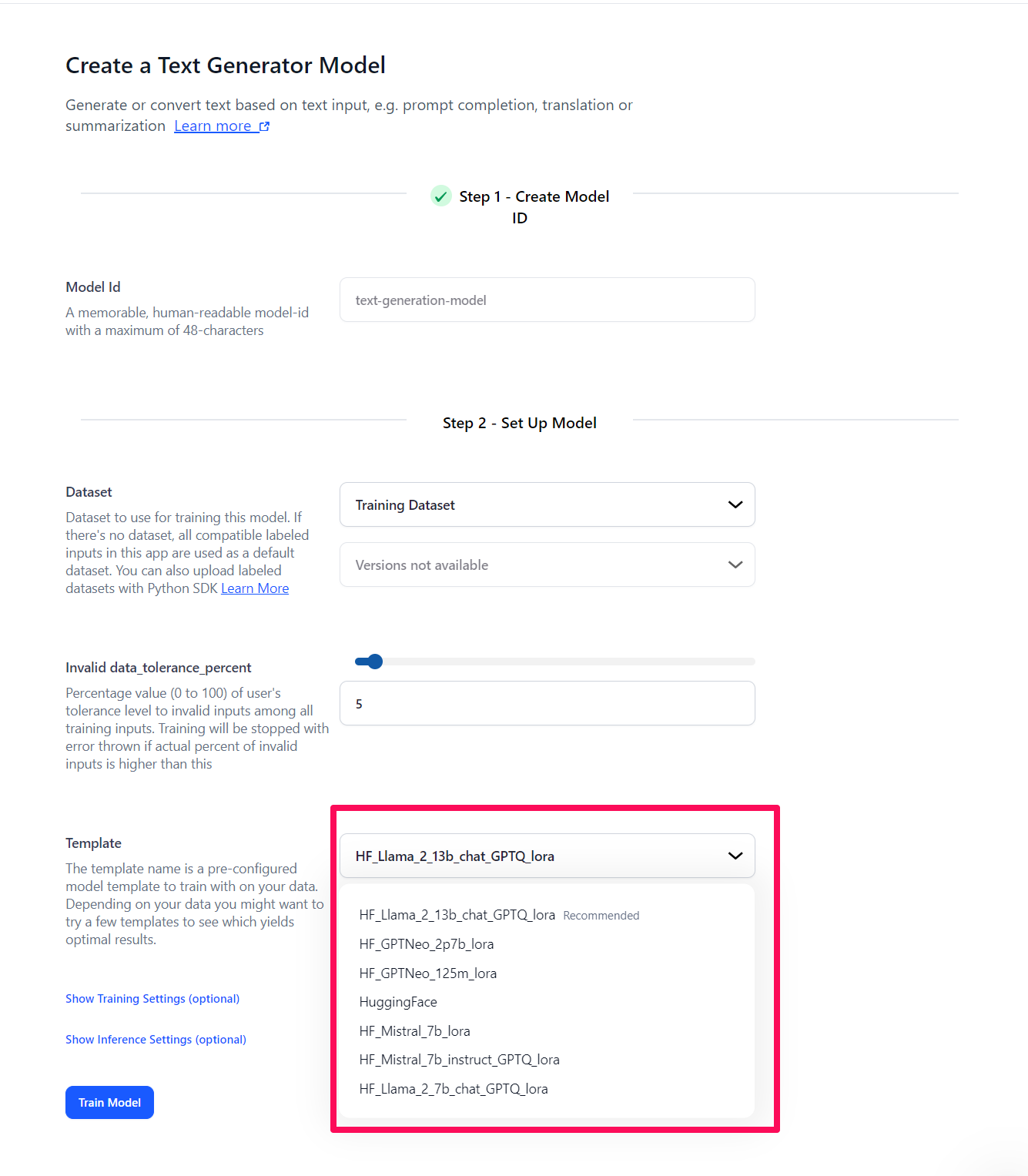

Select the predefined model template you want to use to train your data. You can choose from the following templates:

- Mistral models with Llama2 7/13B or GPTQ-Lora, featuring improved support for quantized/mixed-precision training techniques.

- GPT-Neo model, either the 125 million parameters version or the 2.7 billion parameters version.

Optionally, you can configure the training and inference settings to increase the performance of your model. Check out the complete guide on fine-tuning large language models. Here

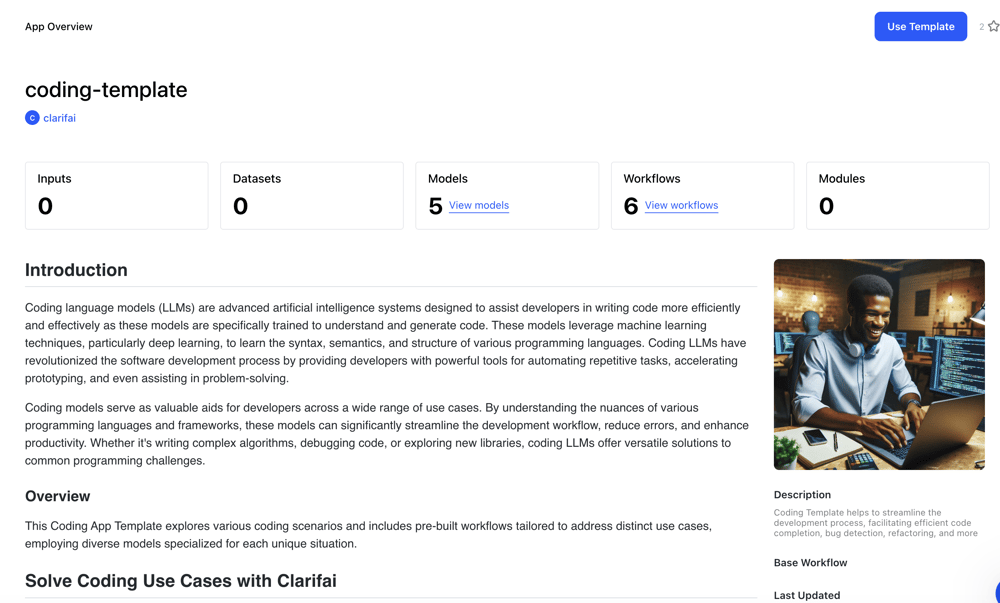

Published Coding template, which helps streamline the development process by facilitating efficient code completion, bug detection, refactoring and more. App templates are pre-built, ready-to-use apps that streamline the app creation process, making it faster and more efficient.

The Coding App template explores different coding scenarios and includes pre-built workflows designed to address different coding use cases, using different models for each unique situation. .

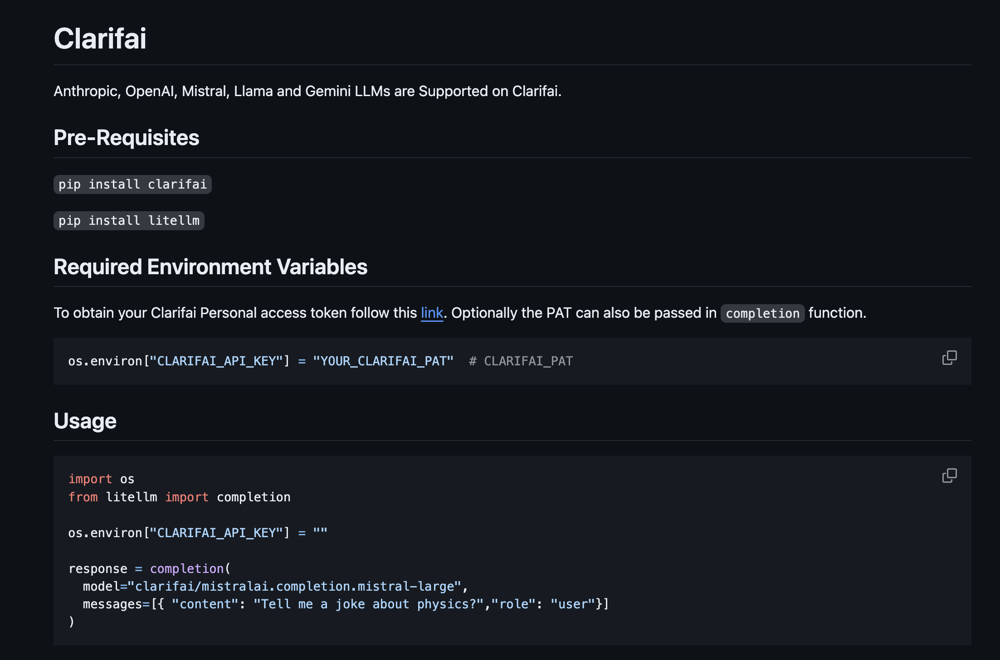

LiteLLM integrated with Clarifai

- Light LL.M is an open source Python library that provides a unified interface for calling 100+ Large Language Models (LLMs) using a single input/output format. It allows you to seamlessly interact with LLM APIs using the standard OpenAI format. The goal of this integration is to provide users with more powerful, efficient and versatile tools for their Natural Language Processing (NLP) tasks. Check usage. Here.

Improved deep fine-tuned templates.

We have made significant improvements in various. Deeply fine-tuned templatesIncreasing the capabilities available for training your models. Updates include:

- MMC Classification Visual Classification Template: Updated from version 1.5.0 to 2.1.0, offering improved features and performance for visual classification tasks.

- MMDetection visual detection template: Updated from version 2.24.1 to 3.3.0, providing advanced capabilities and optimizations for visual detection tasks.

- Yolox Added support: We introduced support for YOLOX, a state-of-the-art object detection training template, further expanding the tools available for high-performance object detection.

New models published.

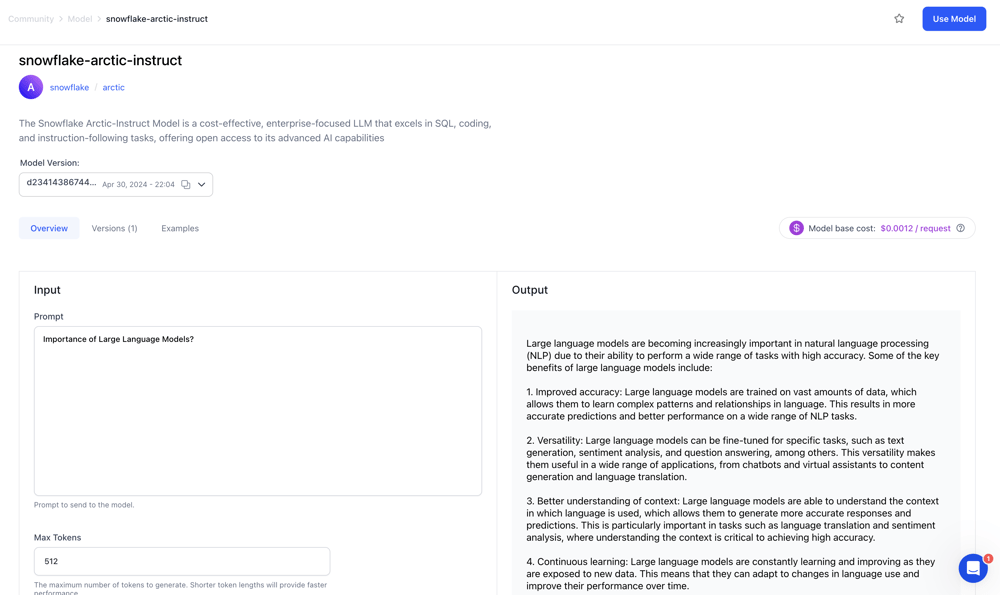

- Wrapped up Snowflake Arctic – Guide Model, a cost-effective, enterprise-focused Large Language Model (LLM) that excels in SQL, coding, and post-instruction tasks, offers open access to its cutting-edge AI capabilities.

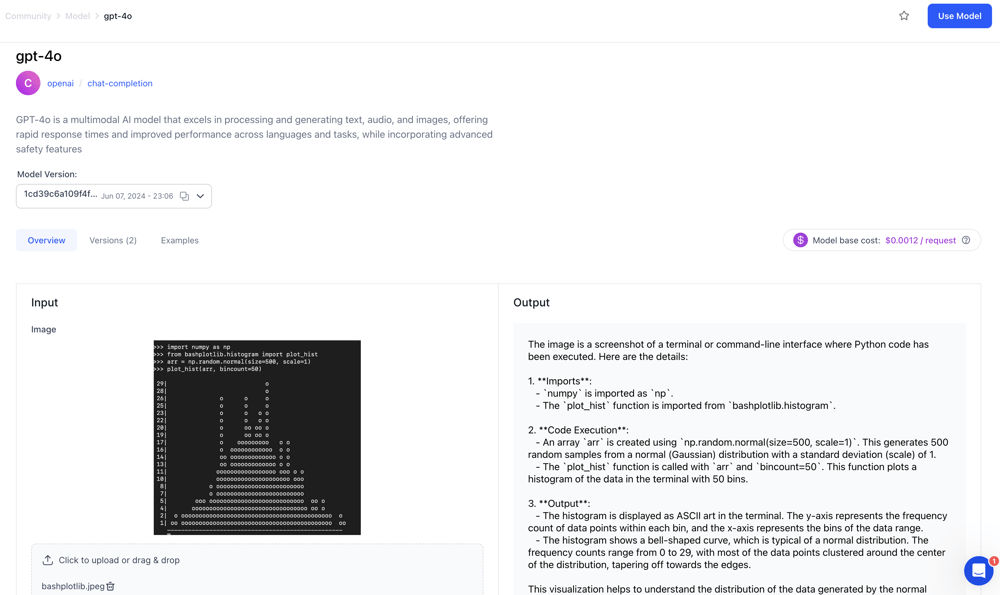

- Wrapped up GPT-4oa multimodal AI model that excels at processing and generating text, audio and images, offers faster response times and improved performance across languages and tasks, while incorporating advanced security features.

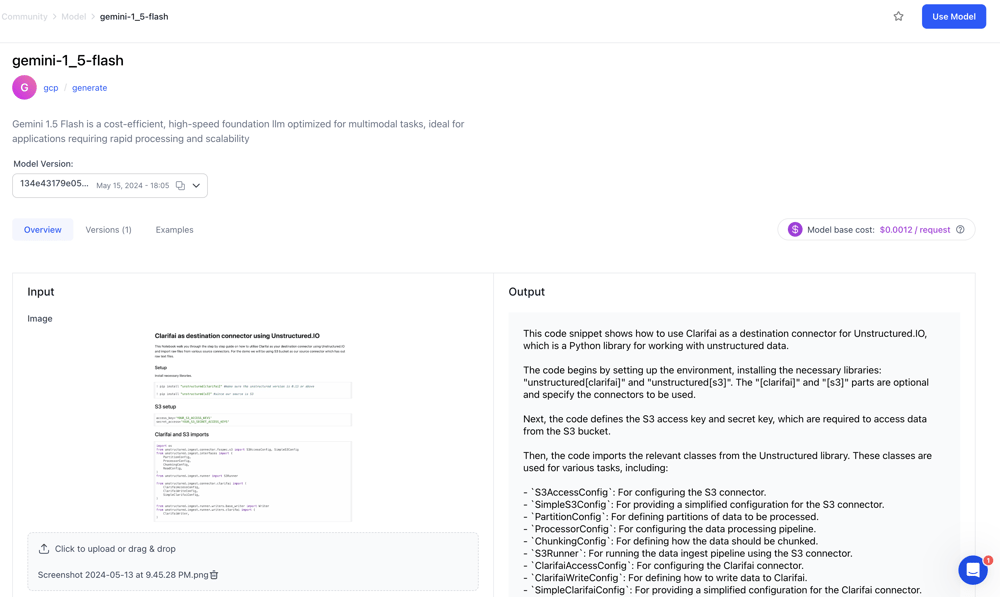

- Wrapped up Gemini 1.5 Flasha cost-effective, high-speed Foundation LLM suitable for multimodal tasks, ideal for applications requiring high-speed processing and scalability.

- Hosted by Clarify. Qwen-VL-Chata high-performance Large Vision Language Model (LVLM) through Alibaba Cloud for text-image dialog tasks, supporting zero-shot captioning, VQA, and multilingual dialog referring to expression understanding.

- Hosted by Clarify. CogVLM-chatA state-of-the-art visual language model that excels at generating context-aware, conversational responses by integrating high-level visual and textual understanding.

- Wrapped up WizardLM-2 8x22BA state-of-the-art open source LLM, which excels at complex tasks such as chat, reasoning, and multilingual understanding, closely competing with leading proprietary models.

- Wrapped up Qwen1.5-110B-chat LLM, with over 100 billion parameters, performs competitively on basic language tasks, shows significant improvement in chatbot evaluation, and boasts multilingualism.

- Wrapped up Mixtral-8x22B-Instructthe latest and greatest blend of expert LLM from Mistral AI with state-of-the-art machine learning models using Mix of Experts (MoE) 8 (MoE) 22b models.

- Wrapped up DeepSeek-V2-Chata high-performing, cost-effective 236 billion MoE LLM, excels in diverse tasks such as chat, code generation, and mathematical reasoning.

- Hosted by Clarify. MiniCPM-Llama3-V 2.5a high-performance, efficient 8B parameter multimodal model that excels in OCR, multilingual support, and multimodal tasks.

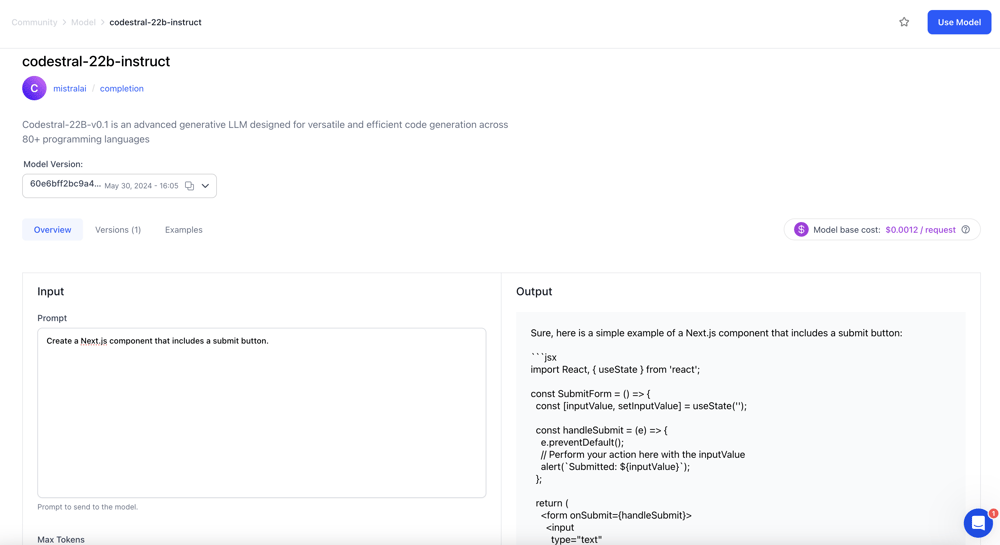

- Wrapped up Codestral-22B-v0.1An advanced generative LLM designed for versatile and efficient code generation in 80+ programming languages.

- Hosted by Clarify. Phi-3-Vision-128K-instructionA high-performance, cost-effective multimodal model for advanced text and image comprehension tasks.

- Hosted by Clarify. OpenChat-3.6-8b The model, fine-tuned with C-RLFT from a high-performance, open-source LLM Llama3-8b, provides ChatGPT-level performance in a variety of tasks.

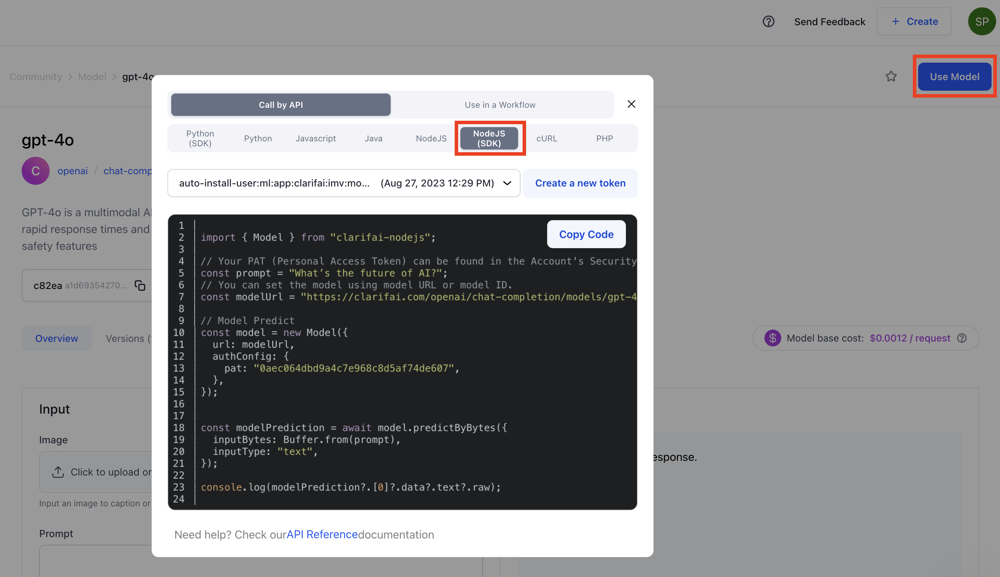

Added TypeScript (Node.js) SDK code snippets Use models/workflows. The modal window

If you want to use a model or workflow to make an API call, you need to click Use models/workflows. button in the upper right corner of an individual page of a model or workflow. The model that pops up contains snippets in various programming languages, which you can copy and use.

- We introduced TypeScript SDK code snippets as a tab. Users can now easily access and copy code snippets from the modal.

Added a feature that redirects users to their previous page after logging in.

- If you are logged out, you will now be taken back to the page you were on after logging in.

Introduced the ability to add users who are not members of the organization to teams

- This enhancement allows for greater flexibility in team formation and collaboration.

Redesigned the user activation form for new Clarifai accounts.

- After signing up, users receive a confirmation email. Clicking on the link in the email sends them to the Clarify platform to complete their profile details.

Apps / Templates

Improved handling of unauthenticated users

If a user is not logged in or does not have access to the app:

- We hide the input block and its values. Therefore, the user cannot see the input details on the app overview page.

- We direct the user to available public resources (eg models) without prompting the user to login.

Added a “Reindex” checkbox to the “Change Base Workflow” section.

Improved the app settings page.

- Remove extra spaces in the tables within the API Keys and Collaborators sections on the app settings page for a cleaner and more streamlined layout.

Expanded the list of workflows available when creating a new application.

- Now you can choose from a wider selection of base workflows to suit your needs.

Improved ability to select resource version

- We've introduced a standard version selection feature across the platform, now available on both the model-viewer and dataset-viewer pages. This feature allows you to select and view specific versions of your resources using small numbers and dates for easy identification.

Fixed an issue with the left sidebar

- We fixed an issue where the left sidebar on Input Manager and Input Viewer was not scrollable. Previously, it made all sidebar content difficult to access. With this improvement, the left sidebar is now scrollable, allowing you to easily view all sections.