What is Retrieval-Augmented Generation?

Big language models are not up-to-date, and they also lack domain-specific information, because they are trained for general tasks and not used to ask questions about your own data. can go

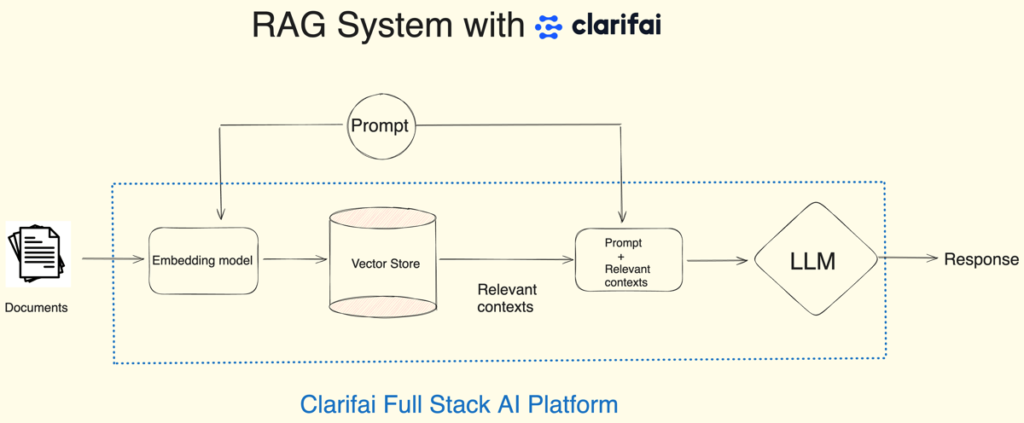

That's where Retrieval-Augmented Generation (RAG) comes in: an architecture that provides LLMs with the most relevant and contextually important data when answering questions.

There are three main components to building a RAG system:

- Embedding models, which embed data into vectors.

- A vector database for storing and retrieving these embeddings, and

- A large language model, which takes context from a vector database to provide an answer.

Clarifi provides all three in a single platform, seamlessly allowing you to build RAG applications.

Recovery – How to Build an Augmented Generation System

As part of our “AI in 5” series, where we teach you how to create amazing things in just 5 minutes, in this blog, we'll see you using Clarifai's Python SDK. How to build a RAG system in just 4 lines of code. .

Step 1: Install Clarify and set your personal access token as an environment variable

First, install the Clarifai Python SDK with the pip command.

Now, you need to set your Clarifai Personal Access Token (PAT) as an environment variable to access the LLMs and Vector Store. To create a new personal access token, Sign up For Clarifai or if you already have an account, log in to the portal and go to Security option in settings. Create a new personal access token by providing a token description and selecting Scopes. Copy the token and set it as an environment variable.

Once you install the Clarifai Python SDK and set your personal access token as an environment variable, you can see that these 4 lines of code are all you need to create a RAG system. Let's see them!

Step 2: Configure the RAG system by passing your Clarifai user ID.

First, import the RAG class from the Clarifai Python SDK. Now, set up your RAG system by passing your Clearify user ID.

You can use the setup method and pass the user id. Since you are already signed up on the platform, you can find your User ID under Account option in Settings. Here.

Now, once you pass the user ID the setup method will create:

- Clarifi app with “Text” as the main workflow. If you're not familiar with apps, they're the basic building blocks for building projects on the Clarify platform. Your data, annotations, models, predictions, and searches reside in applications. Apps act as your vector database. Once you upload data to the Clarifai application, it will embed the data and index the embeddings based on your basic workflow. You can then use these embeddings to query for matches.

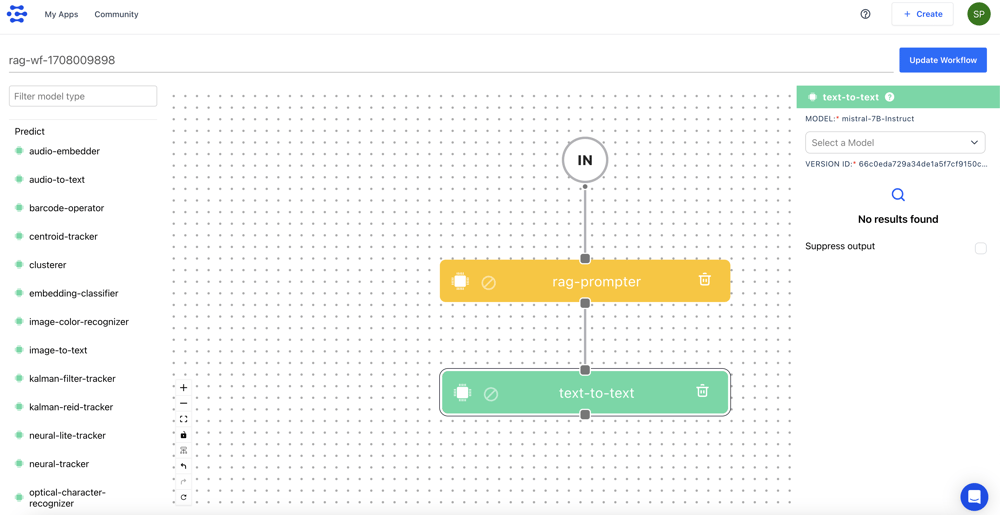

- Next, it will create a RAG prompter workflow. Workflow in Clarifai allows you to combine multiple models and operators allowing you to build powerful multimodal systems for different use cases. Within the app created above, it will create this workflow. Let's look at the RAG prompter workflow and what it does.

We have input, RAG prompter model types, and text-to-text model types. Let's understand the flow. Whenever a user sends an input prompt, RAG will use that prompt to find the relevant context from the Clarify Vector store.

Now, we'll pass the context with a prompt on a text-to-text model type to answer it. By default, this workflow uses the Mistral-7B-Instruct model. Finally, LLM uses context and to answer the user's question. So this is the RAG prompter workflow.

You don't need to worry about all these things because the setup method will handle these tasks for you. You just need to specify your App ID.

Other parameters available in the setup procedure are:

app_url: If you already have a Clarifai app that contains your data, you can pass that app's URL instead of creating the app from scratch using the user ID.

llm_url: As we've seen, by default the prompt workflow takes the Mistral 7b Instruct model, but there are many open source and third-party llms in the Clarifi community. You can pass your favorite LLM URL.

base_workflow: As mentioned, the database will be embedded in your Clarifai app based on the workflow. By default, this will be a text workflow, but other workflows are available. You can define your preferred workflow.

Step 3: Upload your documents.

Next, upload your documents for embedding and store them in the Clarifai vector database. You can provide a file path in your document, a folder path for documents, or a public URL for the document.

In this example, I am giving the path to a PDF file, which is a recent survey paper on Multimodal LLM. Once you upload a document, it will be loaded and parsed in chunks based on the chunk_size and chunk_overlap parameters. By default, chunk_size is set to 1024, and chunk_overlap is set to 200. However, you can adjust these parameters.

Once the document is parsed into chunks, it will inject the chunks into the Clarify app.

Step 4: Chat with your documents.

Finally, chat with your data using Chat Method Here, I am asking it to summarize a PDF file and research multimodal large language models.

Result

How easy it is to build a RAG system with the Python SDK in 4 lines of code. Just to summarize, to configure the RAG system, all you need to do is pass your user ID, or if you have your own Clarifai app, pass that app URL. You can also pass your favorite LLM and Workflow.

Next, upload the documents, and there is an option to specify the chunk_size and chunk_overlap parameters to help parse and fragment the documents.

Finally, chat with your docs. You can find the link to Colab notebook. Here to apply it.

If you would like to see this tutorial. You can find the YouTube video here.