It seems like every few months, someone publishes a machine learning paper or demo that makes my jaw drop. This month, it's OpenAI's new image generation model, DALL·E.

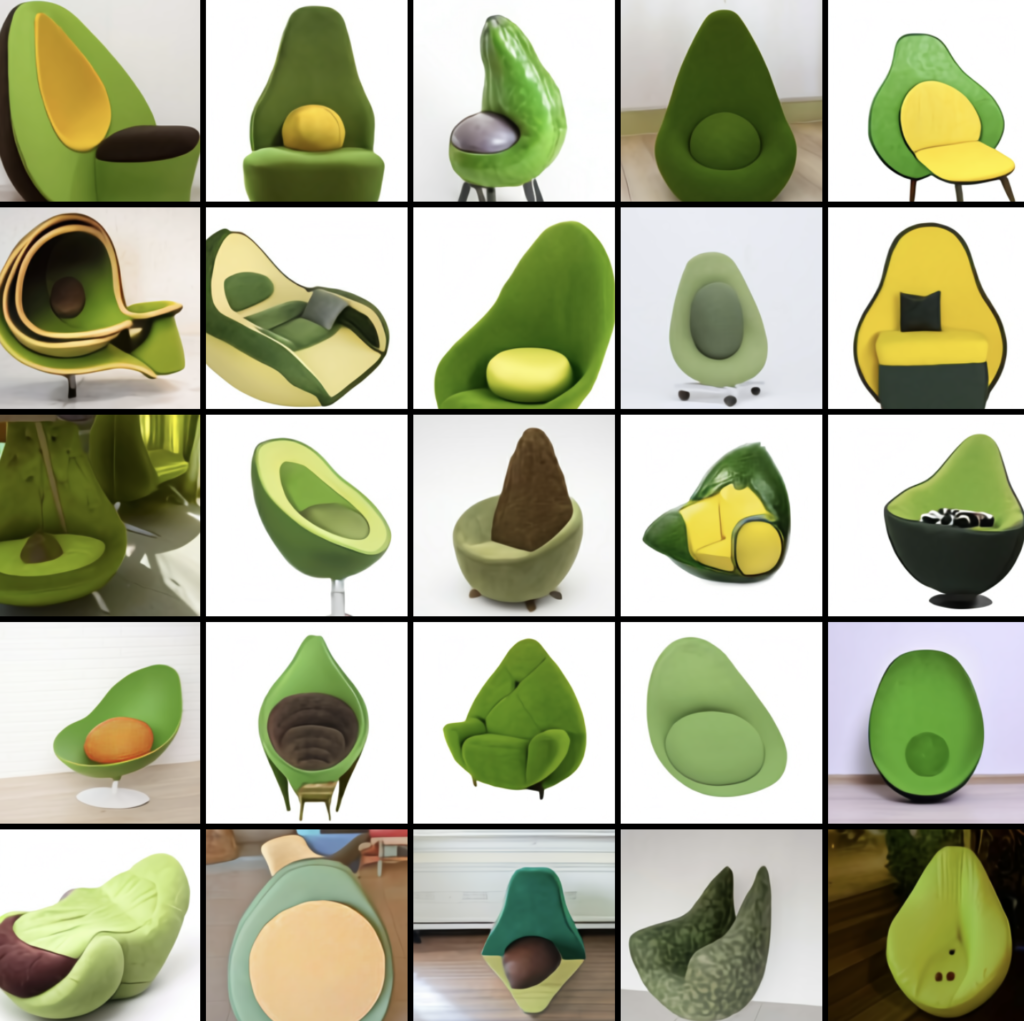

This 12-billion-parameter neural network takes text captions (ie, “a chair in the shape of an avocado”) and generates matching images:

From https://openai.com/blog/dall-e/

I think his images are impressive enough (I'd buy one of those avocado chairs), but even more impressive is DALL·E's understanding of concepts of space, time, and even logic. The ability to render (more on that in a second).

In this post, I'll give you a quick overview of what DALL·E can do, how it works, how it fits in with recent trends in ML, and why it's important. Let's go!

What is DALL·E and what can it do?

In July, the company OpenAI, the creator of DALL·E, released a similar giant model called GPT-3 that took the world by surprise. Ability to create human-like textincluding Op Eds, poems, sonnets, and even computer code. DALL·E is a natural extension of GPT-3 that parses text prompts and then responds in pictures rather than words. In an example from OpenAI's blog, for example, the model presents images from the prompt “A living room with two white armchairs and a painting of the Colosseum. The painting is mounted above a modern fireplace”:

From https://openai.com/blog/dall-e/

Pretty smart, right? You can probably already see how this could be useful for designers. Note that DALL·E can generate a large set of images from the prompt. The images are then classified by another OpenAI model, called CLIPwhich tries to determine which images are the best.

How was DALL·E created?

Unfortunately, we don't have a ton of details on this yet because OpenAI has yet to publish a full paper. But at its core, DALL·E uses the same new neural network architecture that is responsible for recent advances in ML: Transformer. Transformers, discovered in 2017, are an easily parallelized type of neural network that can be scaled and trained on large data sets. They have been particularly revolutionary in natural language processing (they are the basis of models such as BERT, T5, GPT-3, and others), improving the quality of Google search Results, translations, and even Protein structure prediction.

Most of these large language models are trained on very large text datasets (such as all of Wikipedia or Web crawls.). What makes DALL·E unique, is that it was trained on sequences that were combinations of words and pixels. We still don't know what the dataset was (it probably had images and captions), but I can guarantee you it was probably huge.

How “smart” is DALL·E?

While these results are impressive, whenever we train a model on a large dataset, a skeptical machine learning engineer is right to ask whether the results are simply high quality because they are copied from the source material. or have been memorized.

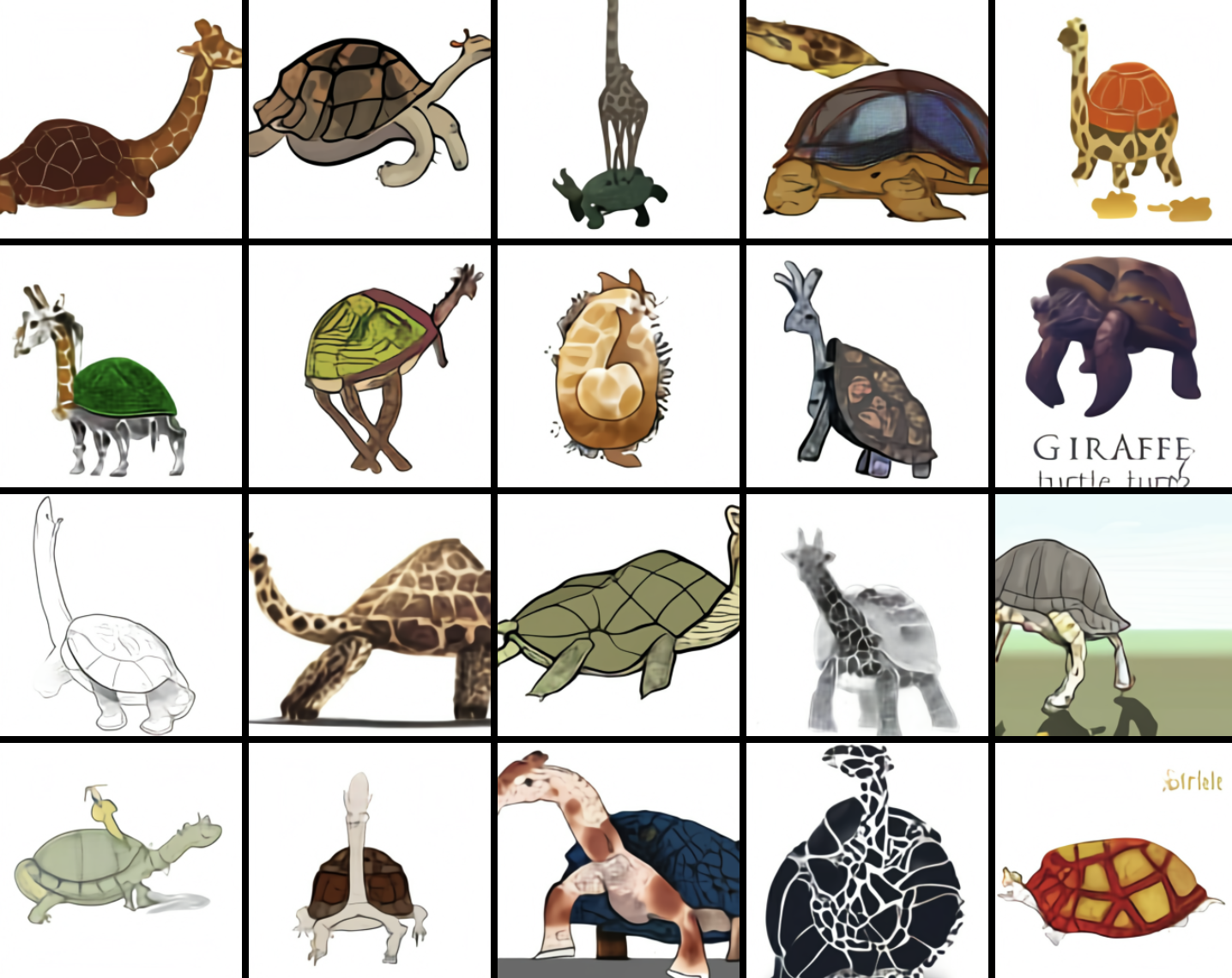

To prove that DALL·E isn't the only image regulator, the OpenAI authors forced it to give some unusual hints:

“A professional high-quality example of a giraffe camera.”

From https://openai.com/blog/dall-e/

“A snail is made of a harp.”

From https://openai.com/blog/dall-e/

It's hard to imagine that the model encountered many giraffe-turtle hybrids in its training data set, making the results all the more impressive.

Moreover, these strange clues point to something even more interesting about DALL·E: its ability to perform “zero-shot visual reasoning.”

Zero Shot Visual Reasoning

Typically in machine learning, we train models by giving them thousands or millions of examples of the tasks we want them to perform in advance and hope they perform in that pattern.

To train a model that identifies dog breeds, for example, we can show a neural network thousands of images of dogs labeled by breed and then tag new images of dogs. Can check the ability to do. It's a work of limited scope that seems almost quaint compared to OpenAI's latest achievements.

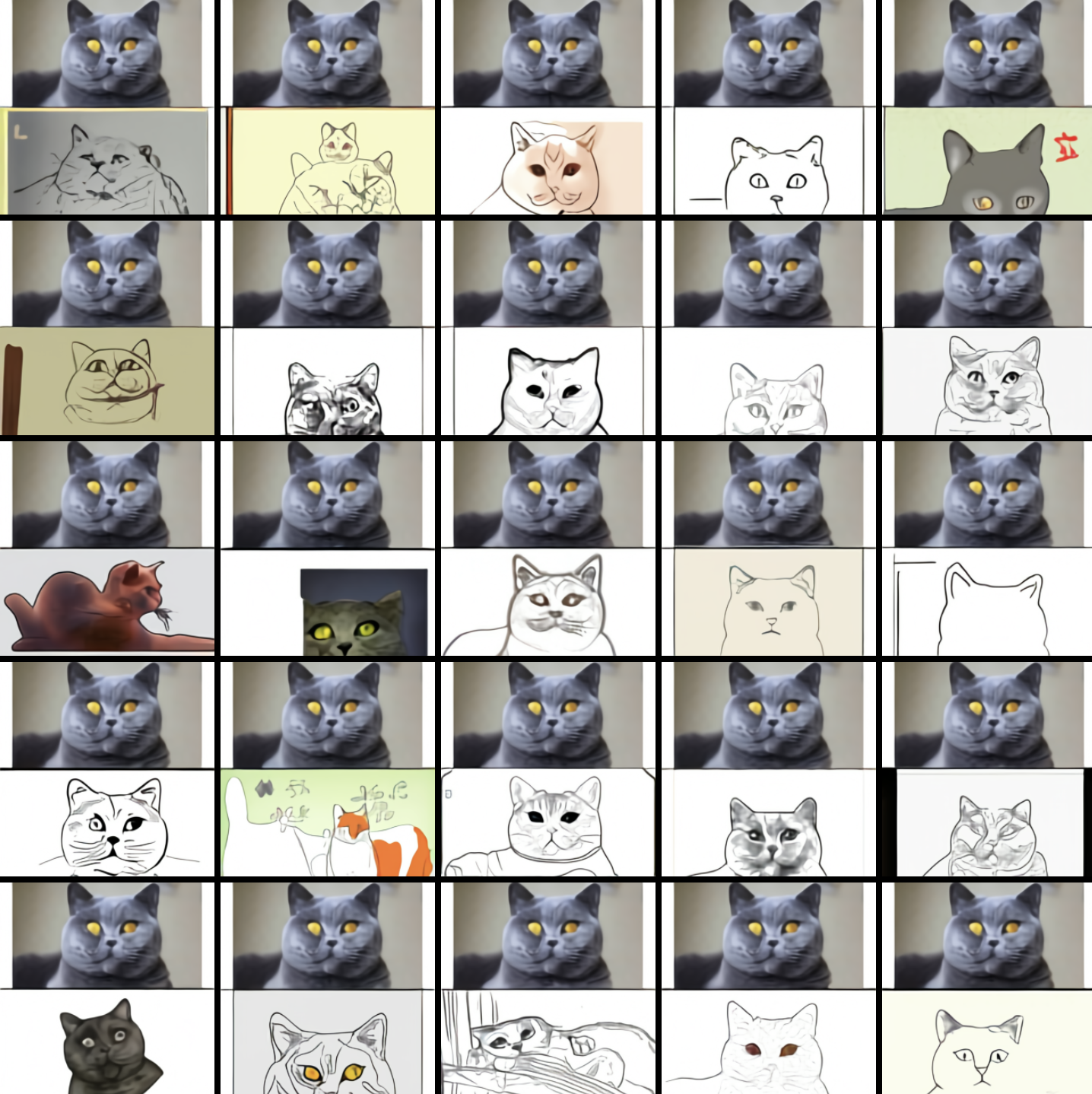

On the other hand, zero-shot learning models have the ability to perform tasks that they were not specifically trained to do. For example, DALL·E was trained to generate images from captions. But with the right text prompt, it can also turn images into diagrams:

Results from the prompt, “exactly the same cat as the diagram above as below”. From https://openai.com/blog/dall-e/

DALL·E can also offer custom text on road signs:

Results from the prompt “A storefront with the word 'openai' written on it”. From https://openai.com/blog/dall-e/

In this way, DALL·E can act almost like a Photoshop filter, even though it wasn't specifically designed to behave that way.

The model even displays an “understanding” of visual concepts (i.e., “macroscopic” or “cross-section” images), places (i.e., “image of Chinese food”), and time (i.e., image of “Alamo Square, San.” .Francisco, from an alley at night”; “20s phone picture”). For example, what he spat out in quick response to “Chinese food picture”:

“Photo of Chinese food” from https://openai.com/blog/dall-e/.

In other words, DALL·E can do more than just paint a pretty picture for a caption. It can, in a sense, also answer questions visually.

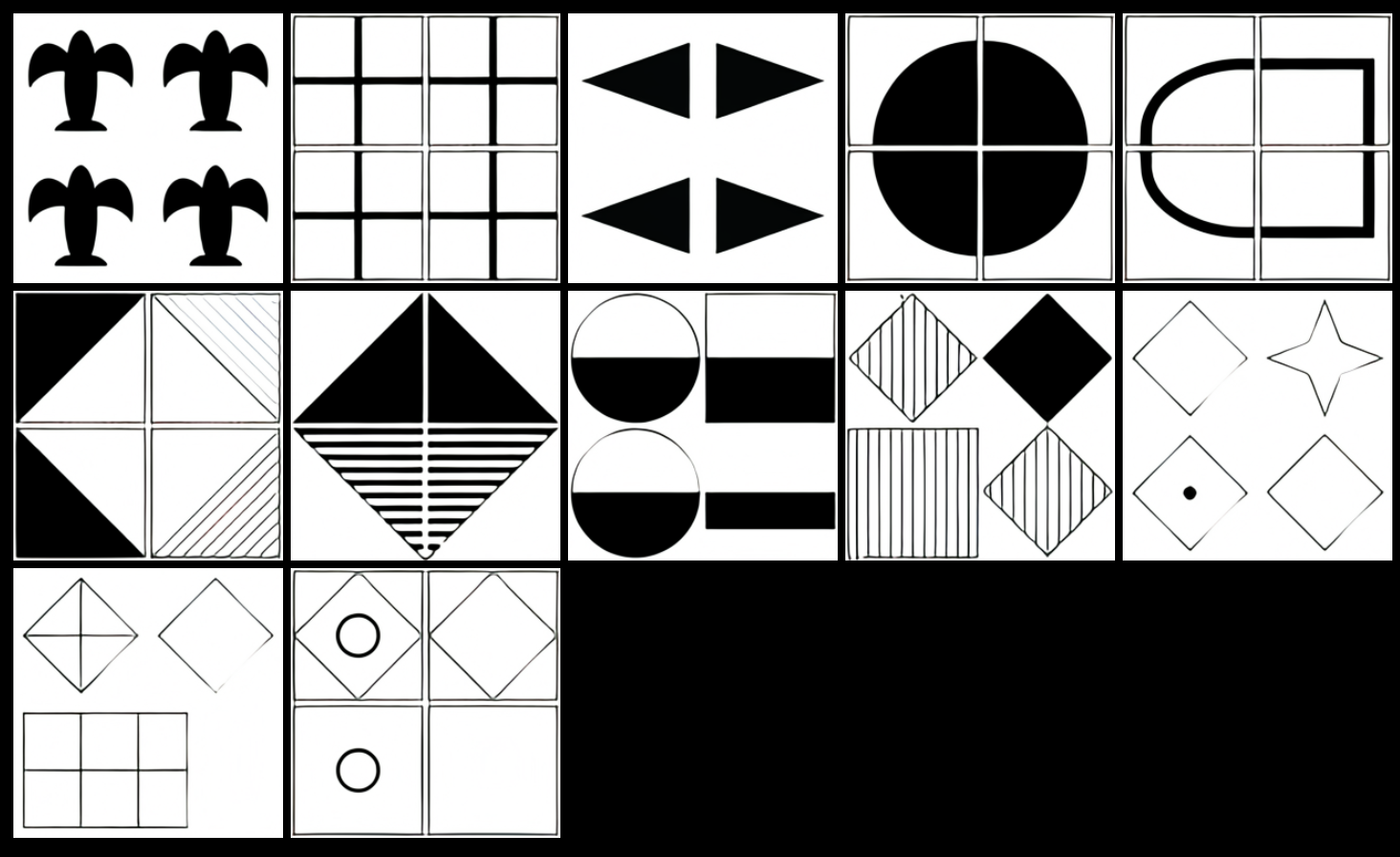

To test DALL·E's visual reasoning ability, the authors administered a visual IQ test. In the examples below, the model had to complete the bottom right corner of the grid following the hidden test pattern.

A screenshot of the visual IQ test OpenAI used to test DALL·E From https://openai.com/blog/dall-e/

“DALL·E is often able to solve matrices that involve simple patterns or basic geometric reasoning,” the authors write, “but it performed better on some problems than others.” When the colors of the puzzles were reversed, DALL·E did worse – “suggesting his abilities can break in unexpected ways.”

what is the meaning of this?

What impresses me most about DALL·E is its ability to perform surprisingly well at many different tasks, which the authors had no idea about:

“We got that DALL·E […] Capable of performing a variety of image-to-image translation tasks when properly pointed.

We did not anticipate that this ability would emerge, and did not modify the neural network or training method to induce it.

This is surprising, but not entirely unexpected; DALL·E and GPT-3 are two examples of a grand theme in deep learning: that extraordinarily large neural networks trained on unlabeled Internet data (an example of “self-supervised learning”) can be extremely versatile. Can, can be able to do many things. was not specifically designed for

Of course, don't mistake this for common sense. it is Not difficult To make these types of models look pretty dumb. We will know more when they are openly accessible and we can start playing with them. But that doesn't mean I can't be excited in the meantime.