Recent significant advances in natural language processing capabilities from large language models (LLMs) have generated tremendous excitement about their potential to achieve human-level intelligence. Their ability to produce clearly coherent texts and engage in dialogue after exposure to extensive datasets points to flexible, general-purpose reasoning skills.

However, a growing chorus of voices urges caution against unchecked optimism, highlighting the fundamental blind spots that limit the neurotic approach. LLMs still often make basic logical and mathematical errors that show a lack of organization behind their answers. Their knowledge remains intrinsically statistical without deep semantic structure.

More complex reasoning tasks further expose these limitations. LLMs struggle with causal, counterfactual, and compositional recall challenges that require going beyond superficial pattern recognition. Unlike humans who learn abstract schemas to flexibly reassemble modular concepts, neural networks remember correlations between co-occurring terms. This results in a generalization that breaks down beyond the narrow distribution of training.

Khai points out how human cognition uses structural symbolic representations to enable conceptual dynamics of systemic composability and causal models. We reason by linking modular symbolic concepts based on the principles of exact inference, chaining logical dependencies, exploiting mental simulations, and postulating mechanisms about variables. The inherently statistical nature of neural networks precludes developing such structural reasoning.

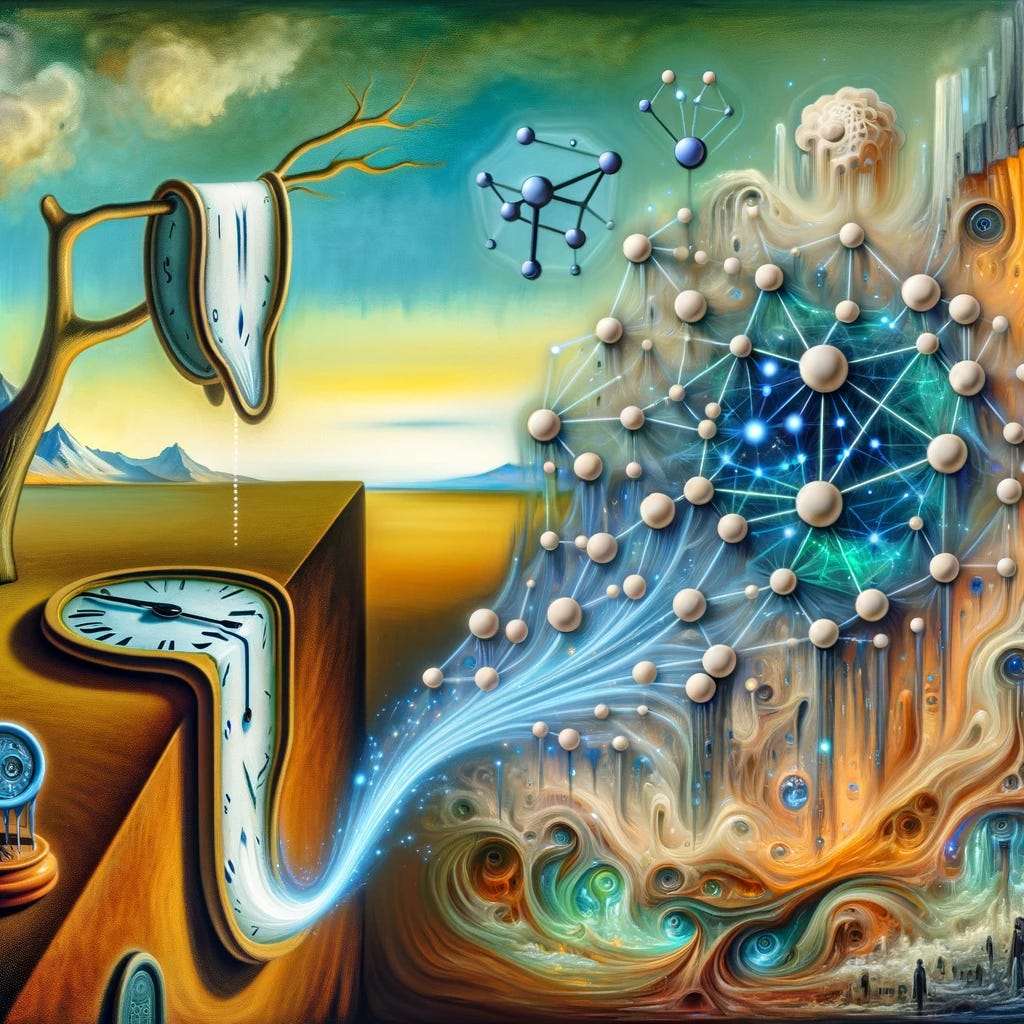

It is mysterious how symbolic-like phenomena emerge in LLM despite their sub-symbolic substrate. But a clear acknowledgment of this “hybridity gap” is essential. Real progress requires embracing complementary forces—the flexibility of neural approaches with structured cognitive representations and causal reasoning techniques—to create coherent reasoning systems.

We first outline a growing course of analyzes that expose neural networks’ lack of organization, causal intelligibility, and structural generality—reducing differences from innate human faculties.

Next, we detail salient aspects of the “reasoning gap,” including struggles with modular skill orchestration, solution dynamics, and response simulation. We show the natural human abilities…