There is a growing need to develop methods capable of efficiently processing and interpreting data from various document formats. This challenge is particularly evident in handling visually rich documents (VrDs) such as business forms, invoices and receipts. These documents, often in PDF or image formats, present a complex interplay of text, layout, and visual elements, requiring sophisticated methods to extract accurate information.

Traditionally, approaches to this problem have relied on two architectural types: transformer-based models inspired by large language models (LLMs) and graph neural networks (GNNs). These mechanisms play an important role in encoding text, layout, and image features to improve document interpretation. However, they often need help representing the spatially distant words necessary to understand complex document sequences. This challenge arises from the difficulty of capturing relationships between table cells and their headers or elements such as text across line breaks.

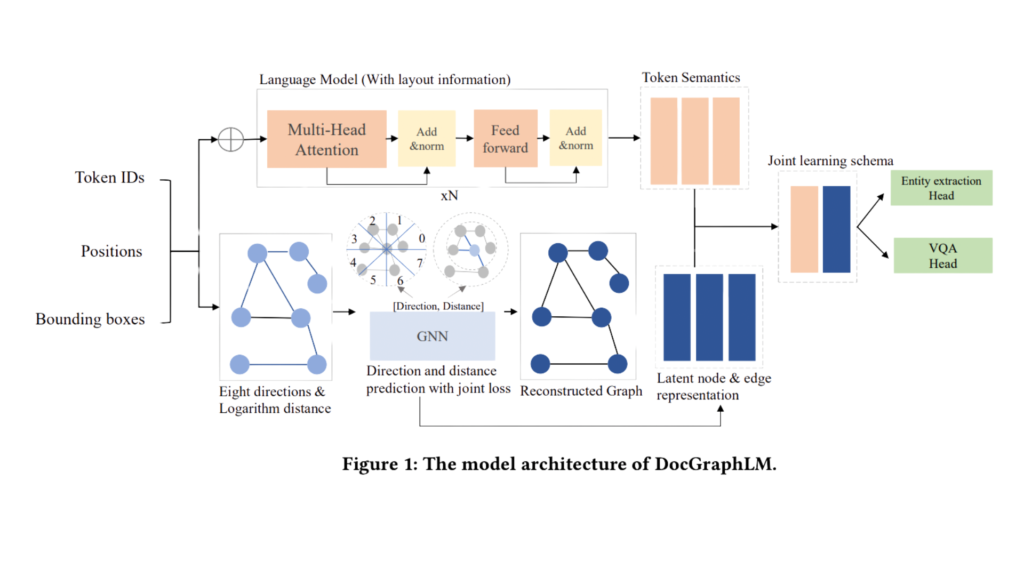

Researchers from JPMorgan AI Research and Dartmouth College Hanover have invented a new framework called ‘DocGraphLM’ to fill this gap. This framework combines graph semantics with pre-trained language models to overcome the limitations of existing approaches. The essence of DocGraphLM lies in its ability to combine the strengths of language models with the structural insights provided by GNNs, thereby offering a more robust document representation. This integration is critical to accurately modeling the complex relationships and structures of visually rich documents.

Delving deeper into the methodology, DocGraphLM introduces a common encoder architecture for document representation along with an advanced link prediction method for document graph reconstruction. This model is notable for its ability to estimate the direction and distance between nodes in a document graph. It uses a new joint loss function that balances hierarchical and regression loss. This function emphasizes restoring near-neighbor relationships while reducing focus on distant nodes. This model applies a logarithmic transformation to normalize distances, treating nodes separated by distances of a specified order of magnitude as semantically equivalent. This approach effectively captures the complex configuration of VrDs to address the challenges posed by the spatial distribution of elements.

DocGraphLM’s performance and results are remarkable. The model consistently improved information extraction and query answering tasks when tested on standard datasets such as FUNSD, CORD, and DocVQA. This performance gain was evident over existing models that either relied only on language model features or graph features. Interestingly, the integration of graph features increased the accuracy of the model and accelerated the learning process during training. This acceleration in learning suggests that the model can focus more effectively on relevant document features, allowing information to be extracted faster and more accurately.

DocGraphLM represents a significant leap forward in document understanding. Its innovative approach of combining graph semantics with pre-trained language models solves the complex challenge of extracting information from visually rich documents. This framework improves accuracy and increases learning efficiency, leading to significant advances in digital information processing. Its ability to understand and interpret complex document sequences opens up new horizons for efficient data extraction and analysis, essential in today’s digital age.

check Paper All credit for this research goes to the researchers of this project. Also, don’t forget to follow us. Twitter. participation Our 36k+ ML SubReddit, 41k+ Facebook community, Discord channelAnd LinkedIn GrTop.

If you like our work, you will like our work. Newsletter..

Don’t forget to join us. Telegram channel

Mohammad Athar Ganai, a consulting intern at Marktech Post, advocates Efficient Deep Learning, focusing on sparse training. Pursuing an M.Sc in Electrical Engineering, specializing in Software Engineering, he combines advanced technical knowledge with practical applications. His current effort is his paper “Improving Performance in Deep Reinforcement Learning”, which demonstrates his commitment to expanding the capabilities of AI. Athar’s work stands at the intersection of “Sparse Training in DNN’s” and “Deep Reinforcement Learning”.