This post was written by Anushwa Trivedi, Senior Data Scientist at Microsoft.

This post builds on that. MRC Blog where we discussed how Machine Reading Comprehension (MRC) can help us “learn transfer” any text. In this post, we introduce the concept and need for machine reading at scale, and transfer learning on large text corpora.

Introduction

Machine reading to answer questions has become an important test for evaluating how well computer systems understand human language. It is also proving to be an important technology for applications such as search engines and dialog systems. The research community has recently built large-scale datasets on textual sources including:

- Wikipedia (WikiReading, Squad, WikiHop).

- News and newsworthy articles (CNN/Daily Mail, NewsQA, RACE).

- Mock Stories (MCTest, CBT, NarrativeQA).

- Common web sources (MS MARCO, TriviaQA, SearchQA).

These new datasets, in turn, have inspired a wider array of new question-answering systems.

I MRC blog post, we trained and tested different MRC algorithms on these large datasets. We were able to successfully transfer the small learning text using these pre-trained MRC algorithms. However, when we tried to build a QA system. The Gutenberg Book Corpus (English only) Using these pre-trained MRC models, the algorithm failed. MRC usually works on text exceptions or documents but fails for large text corpora. This leads us to a new concept – Machine Reading at Scale (MRS). Building machines that can perform large-scale machine reading comprehension will be of great interest to businesses.

Machine Reading Scale (MRS)

Instead of focusing only on short text passages, Donkey Chen et al. Solved a huge problem that is machine reading. To accomplish the task of reading Wikipedia for open-domain queries, they combined search components based on biggram hashing and TF-IDF matching with a multilayer recurrent neural network model based on Wikipedia. Trained to detect answers in paragraphs.

MRC is about answering a question about a given context paragraph. MRC algorithms typically assume that a short piece of relevant text has already been recognized and fed to the model, which is not realistic for building an open-domain QA system.

In contrast, methods using document-based information retrieval use search as an integral part of the solution.

MRS strikes a balance between the two approaches. It focuses on simultaneously maintaining the challenge of machine comprehension, which requires a deep understanding of text, while maintaining the realistic constraint of searching on a large open resource.

Why is MRS important for enterprises?

The adoption of enterprise chatbots has been increasing rapidly in recent times. To further these scenarios, research and industry have turned to conversational AI approaches, particularly in use cases such as banking, insurance and telecommunications, where large volumes of text logs are involved.

A major challenge for conversational AI is to understand complex sentences of human speech in the same way that humans do. The challenge becomes more complex when we need to do this on large amounts of text. MRS can address both of these concerns where it can answer objective questions of a large corpus with high accuracy. Such an approach can be used in real-world applications such as customer service.

In this post, we want to review the MRS method for solving Automated QA capability in various large organizations.

Training MRS – DrQA model

DrQA is a reading comprehension system applied to open-domain question-answering. DrQA is specifically targeted at machine reading tasks at scale. In this setting, we are looking for an answer to a query in a potentially very large corpus of unstructured documents (which may not be redundant). Thus, the system must combine the challenges of document retrieval (i.e., finding relevant documents) with machine understanding of text (identifying answers from those documents).

We use a deep learning virtual machine (DLVMTwo NVIDIA Tesla P100 GPUs, with CUDA and cuDNN libraries as the compute environment. DLVM is a specially configured version of the Data Science Virtual Machine (DSVM) that makes it easier to use GPU-based VM instances for training deep learning models. It is supported on Windows 2016 and Ubuntu Data Science Virtual Machine. It shares the same basic VM images – and therefore the same rich toolset – as DSVM, but is structured to facilitate deep learning. All experiments were run on Linux DLVM with two NVIDIA Tesla P100 GPUs. We use the PyTorch backend to build models. We installed all dependencies in DLVM environment.

We fork Facebook Research GitHub For our blog work and we train the DrQA model on the SQUAD dataset. We use a pre-trained MRS model to evaluate our large Gutenberg corpus using transfer learning techniques.

Children's Gutenberg Corpus

We created a Gutenberg corpus of about 36,000 English books. We then created a subset of the Gutenberg corpus consisting of 528 children's books.

Preprocessing the child Gutenberg dataset:

- Download books with filters (eg children, fairy tales, etc.).

- Clear downloaded books.

- Extract text data from book content.

How to create a custom corpus for DrQA to work?

We follow the instructions available. Here To retrieve a compatible document for Gutenberg children's books.

To execute the DrQA model:

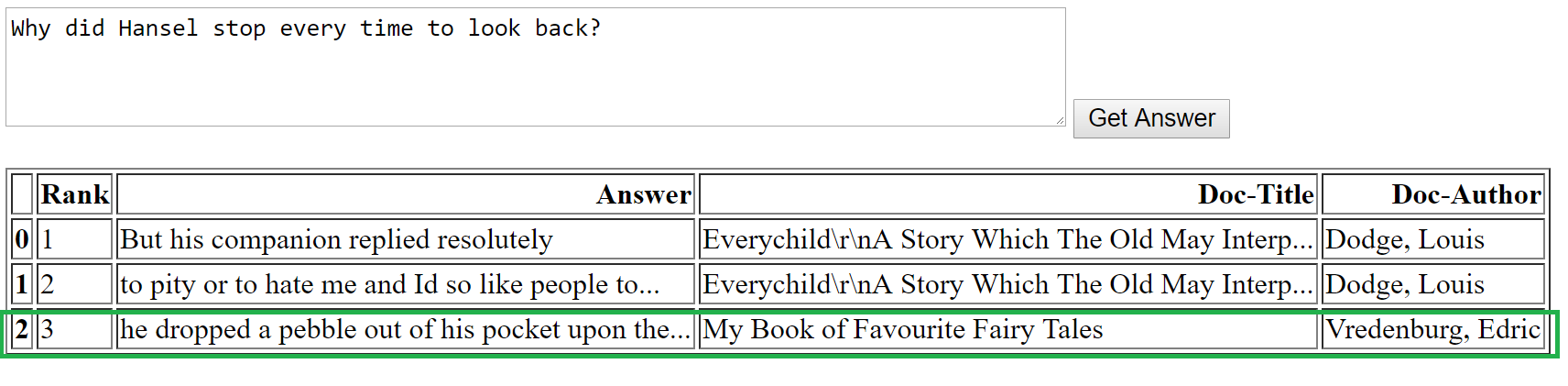

- Enter a query in the UI and click the Search button.

- This is called a demo server (flask server running in the backend).

- The demo code starts the DrQA pipeline.

- The components of the DrQA pipeline are explained. Here.

- The question is marked.

- Based on the tokenized query, the document retriever uses Bigram hashing + TF-IDF matching to match most documents.

- We retrieve the top 3 matching documents.

- A document reader (a multilayer RNN) is then invoked to retrieve responses from the document.

- We use a pre-trained model on the SQUAD dataset.

- We perform transfer learning on the children's Gutenberg dataset. You can download the pre-processed Gutenberg Children's Book Corpus for the DrQA model. Here.

- The model embedding layer is initialized using pre-trained Stanford Core NLP embedding vectors.

- The model returns the highest possible response period from each of the top 3 documents.

- We can significantly speed up model performance through data-parallel inference using this model on multiple GPUs.

The pipeline returns a list of the most likely answers from the top three most matching documents.

We then run the interactive pipeline using this trained DrQA model. Check out the Gutenberg Children's Book Corpus..

For environment setup, please follow. ReadMe.md I GitHub To download the code and install the dependencies. For all codes and related details, please refer. Our GitHub link is here..

MRS using DLVM

Please follow the similar steps listed therein. Copy To test the DrQA model on DLVM.

Learning from our assessment work

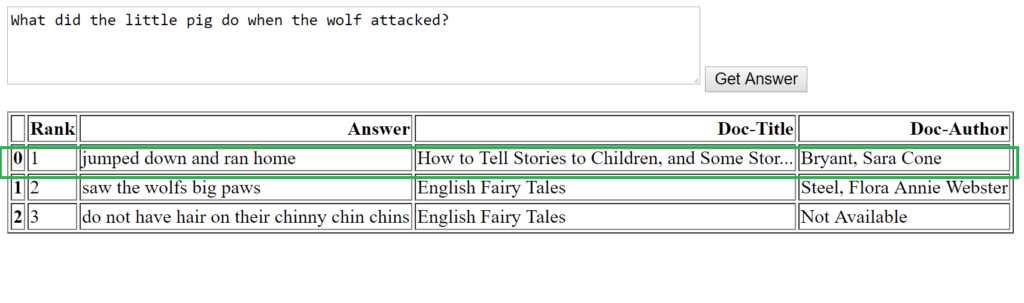

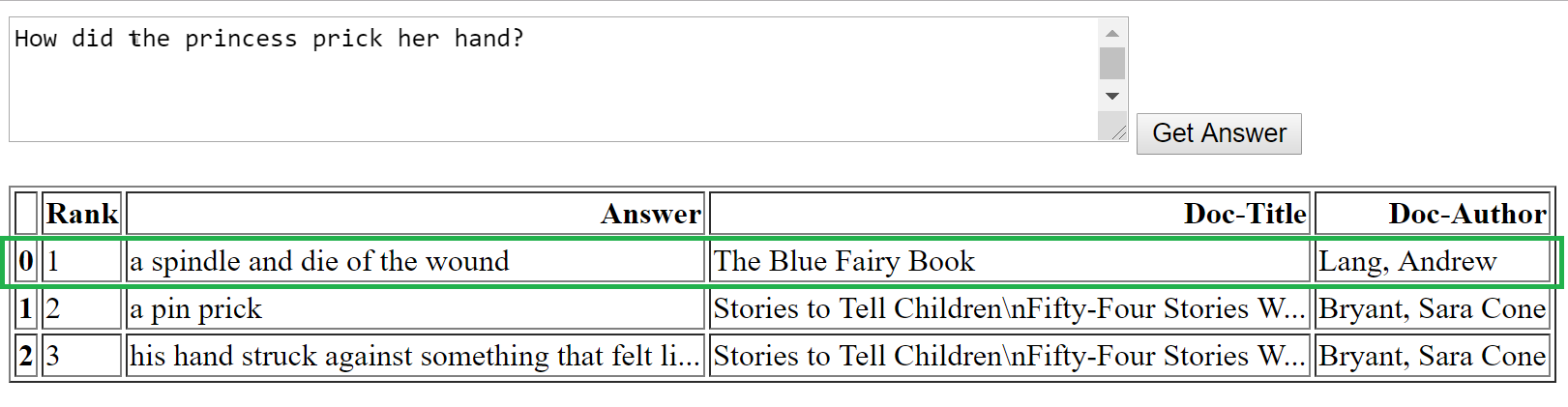

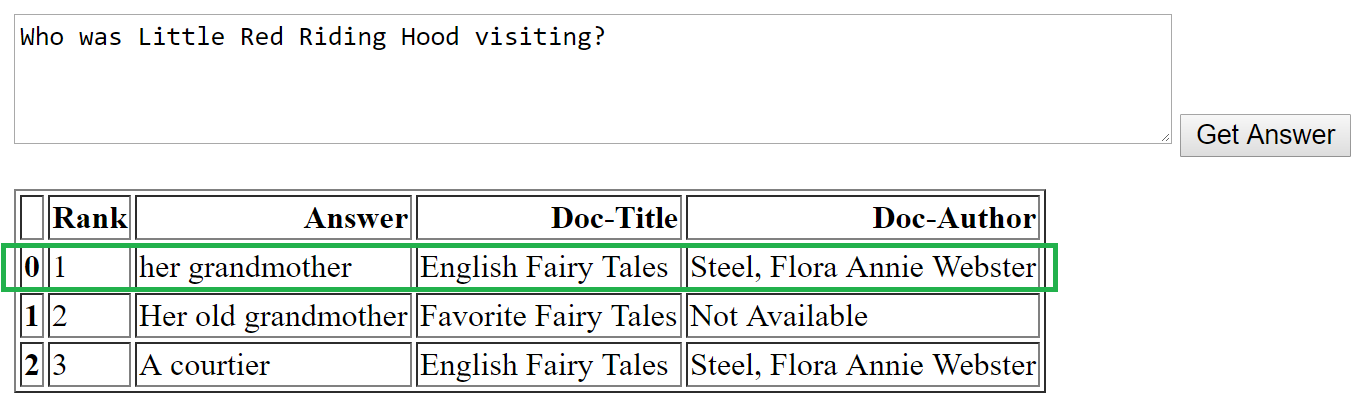

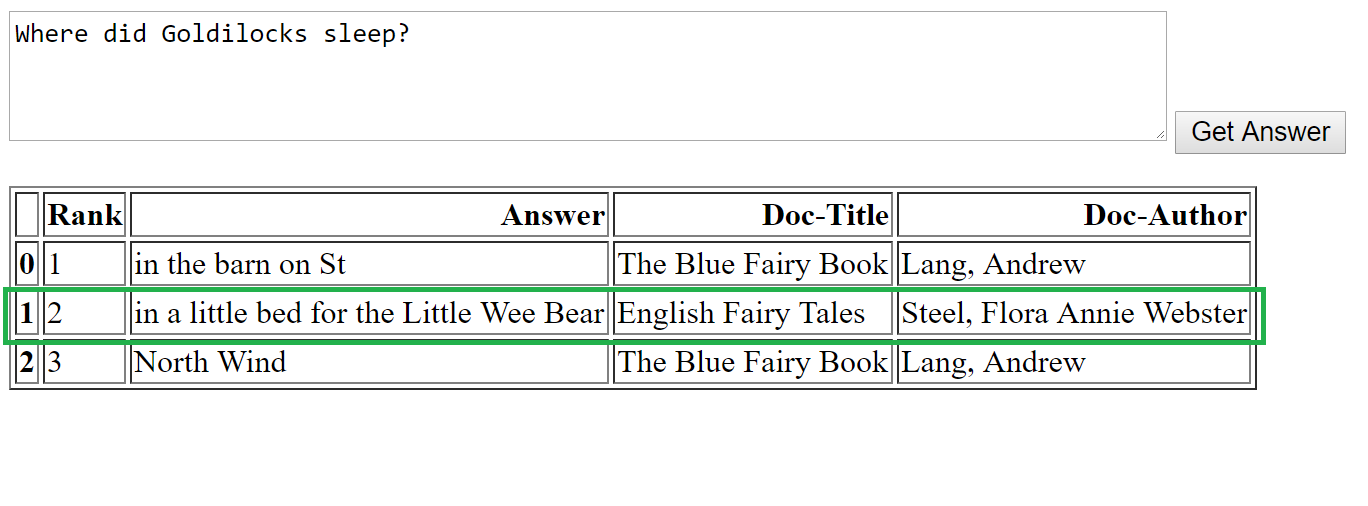

In this post, we investigated the performance of the MRS model on our custom data set. We tested the performance of a transfer learning approach to create a QA system for approximately 528 children's books. Project Gutenberg Corpus Using a pre-trained DrQA model. Our evaluation results are obtained in the exhibits below and the description that follows. Note that these results are specific to our evaluation scenario – results will be different for other documents or scenarios.

In the above examples, we tried questions starting with what, how, who, where and why – and there is one important aspect about MRS that is worth noting, namely:

- MRS is best for “real” questions. Factoid questions are about providing concise facts. For example “Who is the Headmaster of Hogwarts?” or “What is the population of Mars”. Thus, for the what, who and where types of questions above, MRS works well.

- For non-factual questions (eg why), MRS doesn't work very well.

The green box represents the correct answer to each question. As we see here, for realistic questions, the answers selected by the MRS model correspond to the correct answer. In the case of a non-factoid “why” question, however, the correct answer is the third, and it's the only one that makes any sense.

Overall, our evaluation scenario shows that for typical large document corpora, the DrQA model does a good job of answering factual questions.

Anuswa

@anurive | Email Anusua. antriv@microsoft.com For queries related to this post.