Generative Large Language Models (LLMs) are known for their outstanding performance in a variety of tasks, including complex natural language processing (NLP), generative writing, question answering, and code generation. In recent times, LLMs have been run on accessible local systems, including home PCs with consumer-grade GPUs for better data privacy, customizable models, and lower estimated costs. Local installations prefer low latency over high throughput. However, LLMs are difficult to implement on consumer-grade GPUs because of the high memory requirements.

These models, which are often automatic transformers, generate text token by token and, for each inference, require access to the full model with hundreds of billions of parameters. This limitation is noticeable in local deployments because there is less room for parallel processing when handling individual requests. Two existing strategies to deal with these memory problems are offloading and model compression.

In a recent study, a team of researchers presented PowerInfer, an efficient LLM inference system designed for local deployments using a consumer-grade GPU. Power reduces the need for expensive PCIe (Peripheral Component Interconnect Express) data transfers by preselecting and preloading hot-activated neurons on the GPU offline and predicting them online to identify active neurons at runtime. Reduces those.

The basic idea behind the design of power inference is to use the superposition that comes with LLM inference, which is typified by a power-law distribution in neuron activation. This distribution suggests that most cold neurons switch based on certain inputs, while a small fraction of warm neurons are constantly activated across different inputs.

The team shares that PowerInfer is a GPU-CPU hybrid inference engine that uses this understanding. It preloads cold-activated neurons on the CPU for computation and hot-activated neurons on the GPU for quick access. By strategically dividing the workload, the memory requirements of the GPU are greatly reduced, and there is less data transfer between the CPU and GPU.

PowerInfer combines neuron-aware sparse operators and adaptive predictors to further improve performance. Neuron-aware sparse operators interact directly with individual neurons, eliminating the need to operate on the entire matrix, while adaptive predictors help identify and predict active neurons at runtime. These optimizations increase computational sparsity and efficient neuron activation.

The team has evaluated the performance of PowerInfer, which showed an average generation rate of 13.20 tokens per second and a peak performance of 29.08 tokens per second. These results were obtained using a single NVIDIA RTX 4090 GPU and multiple LLMs including the OPT-175B model. This performance is only 18 percent lower than the best-in-class server-grade A100 GPU, which shows the effectiveness of the power infer on mainstream hardware.

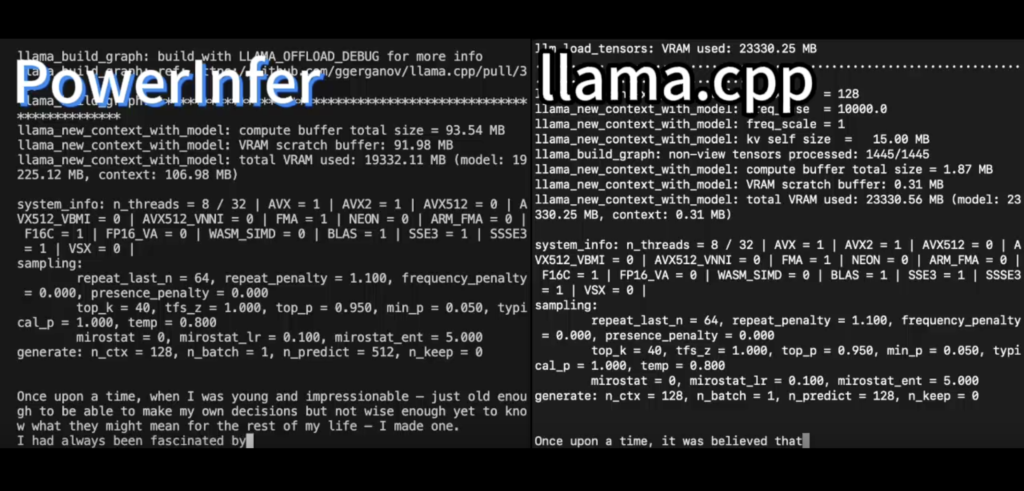

Upon evaluation, PowerInfer has also shown that it has the ability to run 11.69 times faster than the existing llama.cpp system while maintaining model fidelity. Finally, PowerInferLLM offers a significant increase in inference speed, demonstrating its potential as a solution for executing advanced language models on desktop PCs with limited GPU capabilities.

check Paper and Github. All credit for this research goes to the researchers of this project. Also don’t forget to join. Our 34k+ ML SubReddit, 41k+ Facebook community, Discord channelAnd Email newsletterwhere we share the latest AI research news, cool AI projects, and more.

If you like our work, you’ll like our newsletter.

Tanya Malhotra is a final year undergrad from University of Petroleum and Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with specialization in Artificial Intelligence and Machine Learning.

She is a data science enthusiast with good analytical and critical thinking skills, with a keen interest in acquiring new skills, leading teams and managing work systematically.