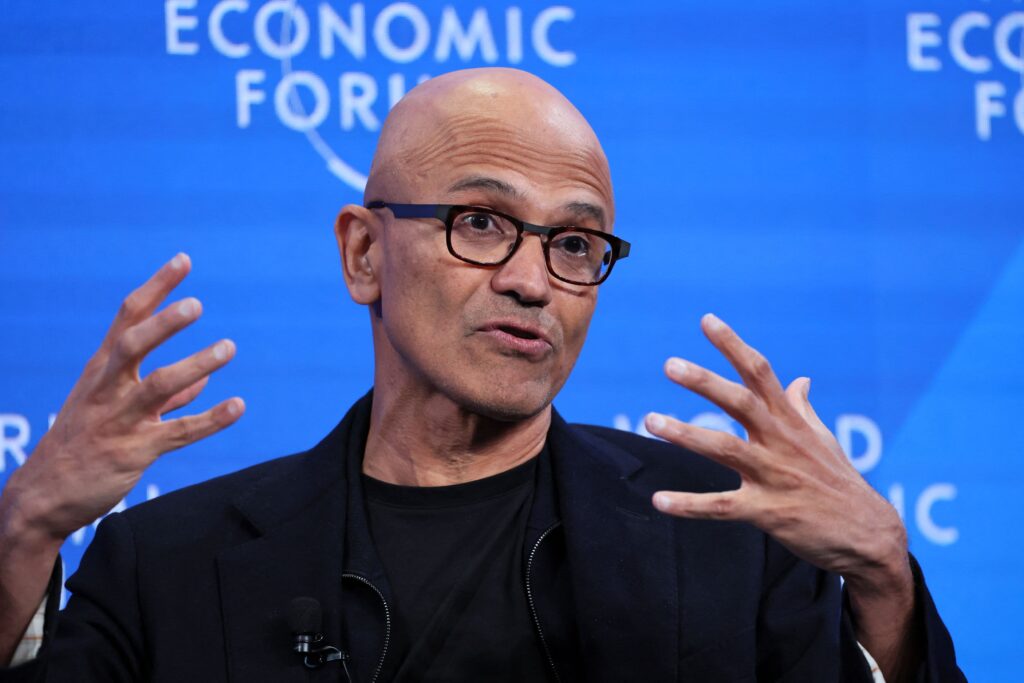

Microsoft Corporation Executive Chairman and CEO Satya Nadella attends a session during the 54th annual meeting of the World Economic Forum on January 16, 2024 in Davos, Switzerland.

Dennis Balibos Reuters

Microsoft CEO Satya Nadella said Tuesday that he sees a global consensus emerging when it comes to artificial intelligence, and that, while regulatory approaches to the tech may vary from jurisdiction to jurisdiction. Can, countries are talking about AI in the same way.

Speaking at the World Economic Forum in Davos, Switzerland, Nadella said he felt there was a need for global coordination on AI and to agree on a set of standards and appropriate checkpoints for the technology.

“I think [a global regulatory approach to AI is] Very desirable, because I think we are now at a point where these are global challenges that require global standards and global standards,” Nadella said in a conversation with WEF Chair Klaus Schwab.

“Otherwise, it will be very difficult to control, it will be difficult to implement, and even on some of the basic research that is needed, it will be difficult to move the needle cleanly,” Nadella added. “But that said, I must say that there seems to be a broad consensus emerging.

Microsoft is a major player in the race toward AI among major U.S. technology companies. The Redmond, Washington-based technology giant has invested billions in OpenAI, which is behind popular AI chatbot ChatGPT.

The company first invested in OpenAI in 2019, contributing $1 billion in cash. The company then grabbed headlines last year, when it reportedly poured another $10 billion into OpenAI, bringing its total investment to a reported $13 billion.

Microsoft has also integrated some OpenAI technology into its Office, Bing and Windows products, and provides OpenAI with its Azure cloud computing tools.

Countries are pushing for consensus on laws governing AI, in response to concerns that the technology could put millions of people out of work and disrupt elections, among other things.

Last year, at an AI safety summit in the UK, world leaders agreed on a landmark declaration to come together on global standards and frameworks to develop AI safely.

If I had to sum up the state of play, the way I think we’re all talking about it is that it’s clear that, when it comes to big language models, we have real hard Assessments and red teaming and safety and before we launch anything new,” Nadella said. Red teaming is a term that describes testing for AI vulnerabilities.

“And then when it comes to applications, we have to have a risk-based assessment of how to deploy that technology.”

Nadella said he’s not sure if a global AI agency to regulate AI is feasible, but added that he sees countries similarly talking about applying safeguards to AI.

“If you’re deploying it in healthcare, you should apply healthcare. [regulations] For AI, if you are deploying it in financial services, you should define financial risks or safeguards,” he said.

Nadella added: “So I think that, if we take something as simple as that to build some consensus and principles, I think we can come together.” “So I’m optimistic.”