As computer vision researchers, we believe that every pixel can tell a story. However, a writer's block seems to be kicking in when it comes to dealing with big pictures. Big images are no longer rare—the cameras we carry in our pockets and orbit our planet capture images so large and detailed that they stretch our current best models and hardware to their breaking points. . Typically, we encounter a quadratic increase in memory usage as a function of image size.

Today, we choose one of two suboptimal choices when handling large images: downsampling or cropping. Both of these methods result in a significant loss in the amount of information and context contained in the image. We take another look at these approaches and introduce $x$T, a new framework for end-to-end modeling of large images on contemporary GPUs while maintaining global context with local details. The race will be collected effectively.

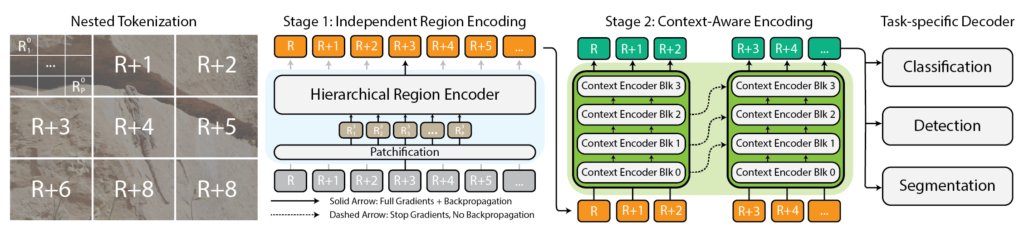

Architecture for the $x$T framework.

Why bother with big images anyway?

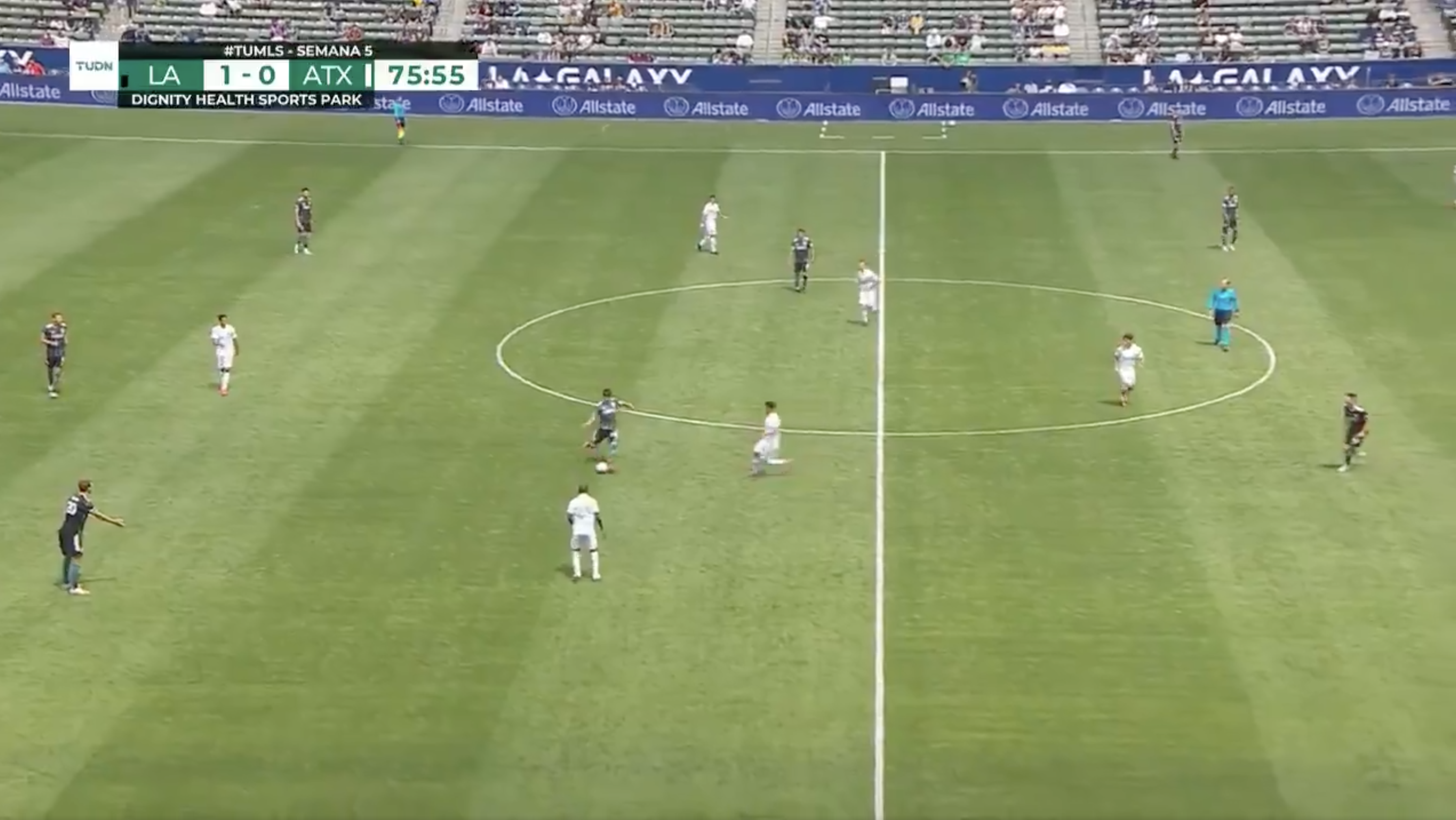

Why bother handling large images anyway? Picture yourself in front of your TV, watching your favorite football team. The field is filled with players everywhere, with the action only taking place on a small part of the screen at a time. However, would you be satisfied if you could only see a small area where the ball was at the time? Alternatively, would you be satisfied with watching the game in a lower resolution? Every pixel tells a story, no matter how far apart they are. This is true in all domains, from your TV screen to a pathologist viewing a gigapixel slide to diagnose a small patch of cancer. These images are a wealth of information. If we can't find the wealth entirely because our tools can't handle the map, what's the point?

The game is fun when you know what's going on.

That is exactly where the frustration is today. The larger the image, the more we need to simultaneously zoom out to see the whole image and zoom in for the finer details, making it a challenge to capture both the forest and the trees at the same time. Most current methods force a choice between losing sight of the forest or missing the trees, and neither option is good.

How $x$T tries to fix it.

Imagine trying to solve a giant jigsaw puzzle. Instead of solving the whole thing at once, which is overwhelming, you start with small parts, take a good look at each piece, and then figure out how they fit into the bigger picture. This is essentially what we do with large images with $x$T.

$x$T takes these huge pictures and hierarchically cuts them into smaller, more digestible chunks. It's not just about making things smaller, though. It's about understanding each piece on its own and then, using some clever techniques, figuring out how these pieces connect to the larger scale. It's like interacting with each part of a picture, learning its story, and then sharing those stories with other parts to get a complete narrative.

Nested tokenization

At the core of $x$T is the concept of nested tokenization. Simply put, tokenization in the realm of computer vision is like cutting an image into pieces (tokens) that a model can digest and analyze. However, $x$T takes this a step further by introducing a hierarchy into the process—so, The nest.

Imagine you are tasked with analyzing a detailed map of a city. Instead of trying to take in the entire map at once, you divide it into districts, then neighborhoods within those districts, and finally, streets within those neighborhoods. This hierarchical breakdown makes it easier to organize and understand map details while keeping track of where everything fits into the bigger picture. This is the essence of nested tokenization—we divide an image into regions, each of which can be further divided into subregions depending on the input size expected from the vision backbone (which we call Region Encoder), prior to complexity to be processed by this region encoder. This nested approach allows us to extract features at different scales at the local level.

Coordinating region and context encoders

Once an image is neatly segmented into tokens, $x$T uses two types of encoders to decode those chunks: region encoders and context encoders. Each one plays a different role in piecing together the complete story of the image.

A region encoder is a standalone “spatial expert” that converts independent regions into detailed representations. However, since each region is processed in isolation, no information is shared across the entire image. A region encoder can be any sophisticated vision backbone. In our experiments we have used hierarchical vision transformers viz Sven And Hera And the likes of CNN ConvNeXt!

Enter the context encoder, the big-picture guru. Its job is to take detailed representations from region encoders and combine them together, ensuring that one token's insight is understood in the context of another. A context encoder is typically a long-order model. We experiment. Transformer-XL (And it has a different name than ours. Hyper) And MambaAlthough you can use Longformer and other new developments in the area. Although these long-order models are typically built for language, we show that it is possible to use them effectively for vision tasks.

The magic of $x$T is in how these components—nested tokenization, region encoders, and context encoders—come together. By first breaking the image into manageable chunks and then systematically analyzing those chunks separately and jointly, $x$T manages to maintain fidelity to the details of the original image while preserving long-range context. Integrates context into broader context. End-to-end, fitting large-scale images on contemporary GPUs.

Results

We evaluate $x$T on challenging benchmark tasks that extend well-established computer vision baselines to rigorous large-image tasks. Specifically, the experience with us Eye Naturalist 2018 For classification of fine-grained species, xView3-SAR For context-dependent segmentation, and MS-COCO to find out.

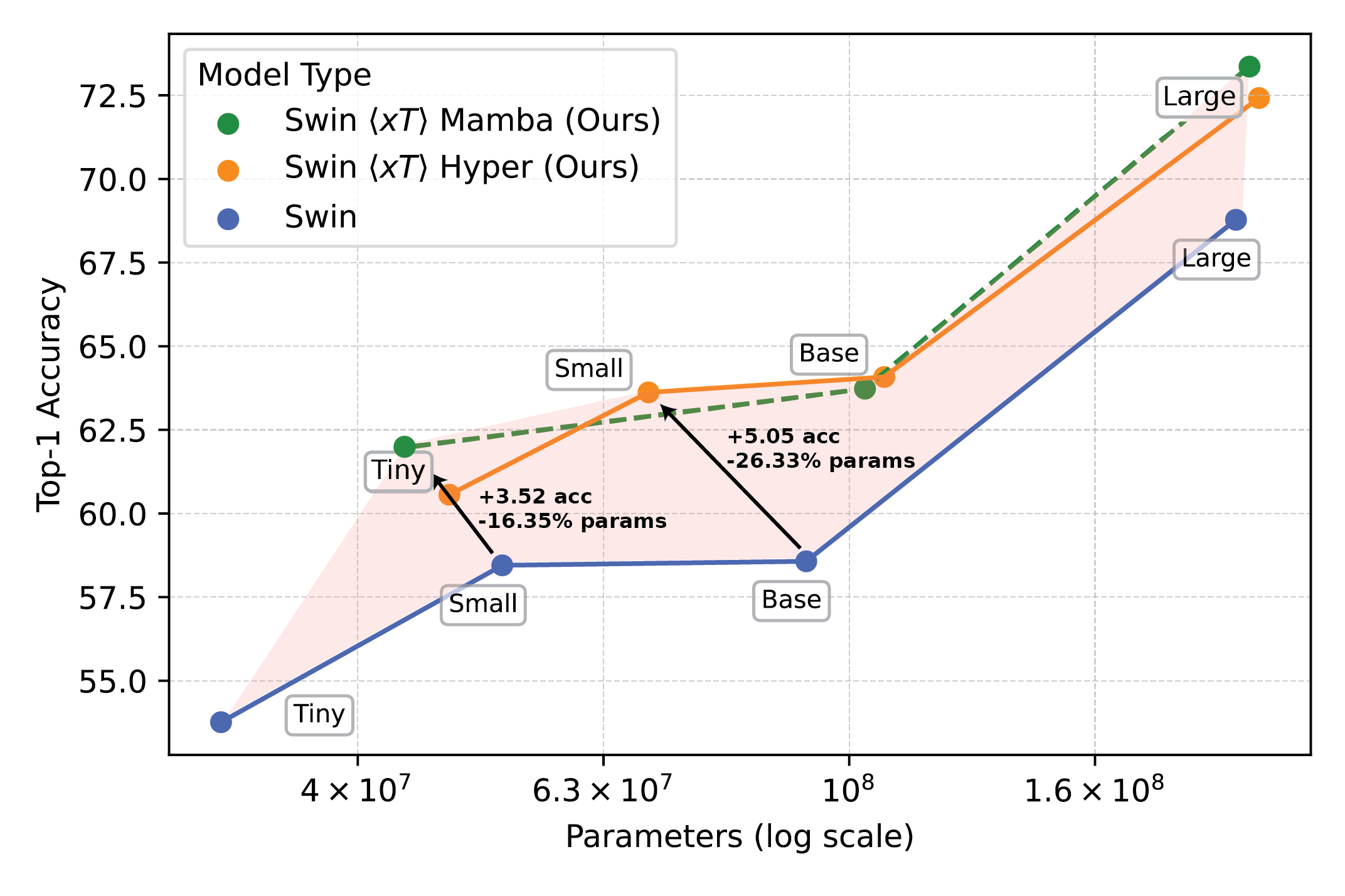

The powerful vision models used with $x$T set a new frontier for downstream tasks such as fine-grained species classification.

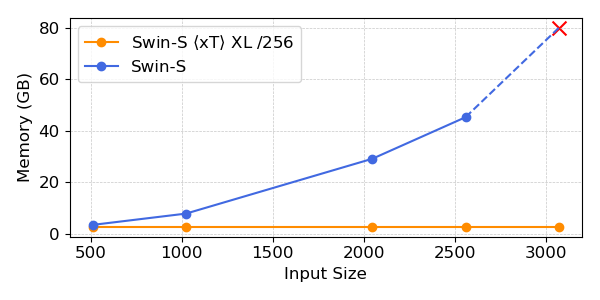

Our experiments show that $x$T can achieve high accuracy on all downstream tasks with fewer parameters while using much less memory per region than state-of-the-art baselines.*. We're able to model images as large as 29,000 x 25,000 pixels on the 40GB A100s, while comparison baselines run out of memory at just 2,800 x 2,800 pixels.

The powerful vision models used with $x$T set a new frontier for downstream tasks such as fine-grained species classification.

*Depending on your context model selection, such as Transformer-XL.

Why it's more important than you think.

This approach isn't just cool; It is necessary. For scientists monitoring climate change or doctors diagnosing diseases, this is a game changer. This means building models that understand the whole story, not just bits and pieces. In environmental monitoring, for example, being able to see both the broader changes of the broad landscape and the details of specific areas can help understand the big picture of climate impacts. In healthcare, it can mean the difference between catching a disease early or not.

We are not claiming that all the world's problems have been solved at once. We're hoping that with $x$T we've opened the door to what's possible. We are entering a new era where we don't need to compromise on the clarity or scope of our vision. $x$T is our big leap toward models that can handle the complexities of large-scale images without breaking a sweat.

There is much more to cover. Research will develop, and hopefully, our ability to process even larger and more complex images. In fact, we are working on a follow-on to $x$T that will further expand this frontier.

In conclusion

For a complete treatment of this work, please see the paper on it arXiv. gave Project page Contains the link to our issued code and weight. If you find this work useful, please cite it below:

@article{xTLargeImageModeling,

title={xT: Nested Tokenization for Larger Context in Large Images},

author={Gupta, Ritwik and Li, Shufan and Zhu, Tyler and Malik, Jitendra and Darrell, Trevor and Mangalam, Karttikeya},

journal={arXiv preprint arXiv:2403.01915},

year={2024}

}