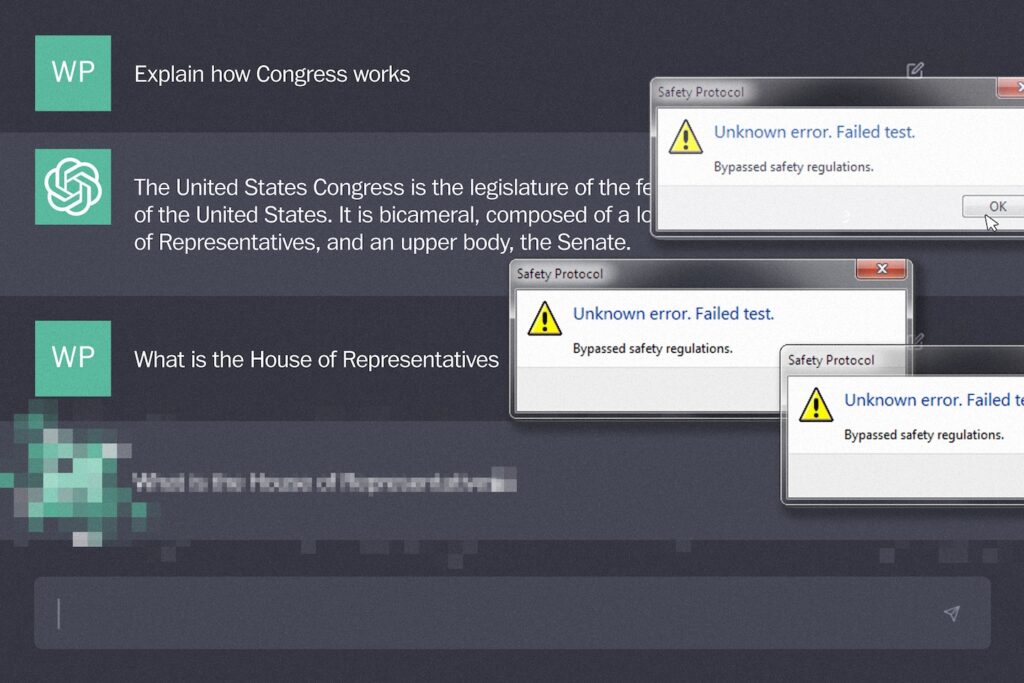

Even before testing began on the model, the GPT-4 Omni, OpenAI invited employees to celebrate the product, which will power ChatGPT, with a party at the company's San Francisco offices. “They planned the launch after the party before knowing if it was safe to launch,” said one of the people, who spoke on condition of anonymity to discuss sensitive company information. “We basically failed in that process.”

The previously unreported incident highlights the changing culture at OpenAI, where the company's leaders, including CEO Sam Altman, have been accused of prioritizing commercial interests over public safety — as a nonprofit. Total separation from the company's roots. It also raises questions about the federal government's reliance on self-policing by tech companies — in line with the White House's promise, as well as an executive order on AI passed in October — to protect the public from misuse of generative AI. For, which executives say has potential. From work to war, to remaking almost every aspect of human society.

Allowing companies to set their own standards for security is inherently dangerous, said Andrew Street, a former ethics and policy researcher at Google DeepMind who is now associate director of the Ada Lovelace Institute in London.

get caught

Stories to keep you informed

“We have no meaningful assurance that internal policies are being faithfully followed or supported in credible ways,” Street said.

Biden has said Congress needs to create new laws to protect the public from AI threats.

“President Biden has been clear with tech companies about the importance of ensuring that their products are safe, secure and reliable before they are released to the public,” said White House spokesman Robin Patterson. ” “Reputable companies have made voluntary commitments to independent security testing and public transparency, which he expects them to fulfill.”

OpenAI is one of more than a dozen companies that made voluntary commitments to the White House last year, a precursor to the AI executive order. Others include Anthropic, the company behind Cloud Chatbot. Nvidia, the $3 trillion chips juggernaut; Palantir, a data analytics company that works with militaries and governments; Google DeepMind; and meta. This commitment requires them to protect increasingly capable AI models. The White House said it would remain in effect until a similar regulation is enacted.

OpenAI's latest model, GPT-4o, was the company's first major opportunity to implement the framework, which calls for the use of human evaluators, including post-PhD professionals trained in biology. and third-party auditors, if the risks are deemed sufficiently high. But testers shortened the evaluation to a week, despite complaints from employees.

Although they expected the technology to pass the tests, many employees were disappointed to find that OpenAI presented its new manufacturing protocol as an afterthought. In June, several current and former OpenAI employees signed a confidential open letter demanding that AI companies exempt their workers from non-disclosure agreements, informing regulators and the public of the technology's security risks. Free to do.

Meanwhile, former OpenAI executive John Lake resigned days after GPT-4o's launch, writing on X that “security culture and processes have overtaken shiny products.” And William Saunders, a former research engineer at OpenAI who resigned in February, said in a podcast interview that he did one of the security tasks “in service of meeting the shipping date” for a new product. Have seen the sample.

A representative of OpenAI's development team, who spoke on condition of anonymity to discuss sensitive company information, said the evaluation took place over the course of a single week, which was enough to complete the test. But he admitted that time was “squeezed”.

We're “rethinking our whole way of doing it,” the representative said. “This [was] Not the best way to do it.”

In a statement, OpenAI spokeswoman Lindsay Held said the company “has not made any reductions in our security processes, although we recognize that the launch was stressful for our teams.” He added that to comply with White House commitments, the company conducted “extensive internal and external” tests and disabled some multimedia features “initially to continue its security work.”

OpenAI announced the initiative as an effort to bring scientific rigor to the study of catastrophic risks, which it describes as events “that could result in economic losses of hundreds of billions of dollars or more.” may cause serious injury or death to persons.”

The term has been popularized by an influential faction within the AI field who are concerned that efforts to make machines as smart as humans could weaken or destroy humanity. Many AI researchers argue that these existential risks are speculative and distract from more pressing pitfalls.

“Our goal is to set a new high-water mark for quantitative, evidence-based work,” Altman posted at X in October announcing the company's new team.

OpenAI has launched two new security teams in the past year, joining a longstanding division focused on concrete harms such as racial bias or misinformation.

The Superalignment team, announced in July, is dedicated to preventing existential threats from advanced AI systems. It has since been redistributed to other parts of the company.

Leike and OpenAI co-founder Ilya Sutskever, a former board member who voted to oust Altman as CEO in November before quickly returning, led the team. Both resigned in May. Sutskever has been absent from the company since Altman's reinstatement, but OpenAI didn't announce his resignation until the day after GPT-4o's launch.

According to an OpenAI representative, however, the development team had the full support of higher authorities.

Realizing that testing times for the GPT-4o would be tight, the representative said, he spoke with company leaders in April, including chief technology officer Meera Murthy, and they agreed on a “fallback plan.” If the tests reveal anything worrisome, the company will launch an older iteration of GPT-4o that the team has already tested.

A few weeks before the launch date, the team began a “dry run”, “all systems go as soon as we had the model,” the representative said. According to the representative, they scheduled human evaluators in various cities to be ready to run the tests, a process that costs millions of dollars.

The preparatory work also included a warning from OpenAI's Safety Advisory Group — a newly created board of advisors that receives a risk scorecard and advises leaders if changes are needed — to analyze the results. He will have limited time for

OpenAI's Hold said the company is committed to devoting more time to the process in the future.

“I definitely don't think we skirted. [the tests]” the representative said. But the process was intense, he acknowledged. “After that, we said, 'Let's not do it again.'

Razan Nakhlawi contributed to this report.