Introduction

Word embedding is a method used to map vocabulary words onto a dense vector of real numbers where words that are lexically similar are mapped to nearby points. Representing words in this vector space helps algorithms achieve better performance in natural language processing tasks such as syntactic analysis and sentiment analysis by grouping similar words. For example, we expect “cats” and “dogs” to be mapped to nearby points in the embedding space because they are both animals, mammals, pets, etc.

In this tutorial we will implement the SkipGram model created by Mikuloff et al Using in R Kira The package skip-gram model is a flavor of word2vec, a class of computationally efficient predictive models for learning word embeddings from raw text. We will not focus on theoretical details about embedding and skipgram models. If you want to get more details, you can read the above paper. Tensor flow Vector representation of words Additional details are included in the tutorial. Deep learning with R A notebook about embedding.

There are other ways to represent a vector of words. For example, GloVe Embeddings are implemented. text2vec Package by Dmitriy Selivanov. A neater method is also described in Julia Sludge's blog post. Word vector with clean data rules.

Obtaining data

We will use Amazon Fine Foods Review Dataset. This dataset consists of Amazon's fine dining reviews. The data spans over 10 years, including all ~500,000 reviews as of October 2012. Reviews include product and user information, ratings, and descriptive text.

The data (~116MB) can be downloaded by running:

download.file("https://snap.stanford.edu/data/finefoods.txt.gz", "finefoods.txt.gz")Now we will load the plain text evaluations into R.

Let's take a look at some of the reviews in the dataset.

[1] "I have bought several of the Vitality canned dog food products ...

[2] "Product arrived labeled as Jumbo Salted Peanuts...the peanuts ... Pre-processing

We'll start with some text preprocessing using keras. text_tokenizer(). The tokenizer will be responsible for converting each review into a sequence of integer tokens (which will later be used as input to the SkipGram model).

Note that tokenizer The object is changed in place by the call. fit_text_tokenizer(). Each of the 20,000 most common words will be assigned a numeric token (other words will be assigned token 0).

Skip gram model

In the SkipGram model we will use each word as a projection layer in a log-linear classifier, then predict the words before and after that word within a certain range. It would be computationally expensive to output probability distributions over all vocabulary for each target word we input into the model. Instead, we're going to do negative sampling, meaning we'll sample some words that don't appear in the context and train a binary classifier to predict whether we Whether the context word passed by is actually from the context or not.

In more practical terms, for a skip-gram model we would input a 1d integer vector of target word tokens and a 1d integer vector of sampled context word tokens. We generate a prediction of 1 if the sampled word actually appears in the context and 0 if it does not.

Now we will define the generator function to get the batch for model training.

library(reticulate)

library(purrr)

skipgrams_generator <- function(text, tokenizer, window_size, negative_samples) {

gen <- texts_to_sequences_generator(tokenizer, sample(text))

function() {

skip <- generator_next(gen) %>%

skipgrams(

vocabulary_size = tokenizer$num_words,

window_size = window_size,

negative_samples = 1

)

x <- transpose(skip$couples) %>% map(. %>% unlist %>% as.matrix(ncol = 1))

y <- skip$labels %>% as.matrix(ncol = 1)

list(x, y)

}

}Oh Generator function

is a function that returns a different value each time it is called (generator functions are often used to provide streaming or dynamic data for training models). Our generator function will receive a vector of text, a tokenizer and arguments for the skipgram (the size of the window around each target word we evaluate and how many negative samples we want to take for each target word. are).

Now let's start defining the Kira model. We will use Keras Functional API.

embedding_size <- 128 # Dimension of the embedding vector.

skip_window <- 5 # How many words to consider left and right.

num_sampled <- 1 # Number of negative examples to sample for each word.We will first write placeholders using input. layer_input Function

input_target <- layer_input(shape = 1)

input_context <- layer_input(shape = 1)Now let's define the embedding matrix. An embedding is a matrix with dimensions (words, embedding_size) that serves as a lookup table for the word vector.

The next step is to define how target_vector will be related to context_vector

To make our network output 1 when the context word actually appears in the context and 0 otherwise. We want target_vector to be similar to do context_vector

If they appear in the same context. is a common measure of similarity. Cosine similarity. Give two vectors. (A) And (B)

Cosine similarity is defined by the Euclidean dot product. (A) And (B) Normalized by their magnitude. As we do not need similarity for normalization within the network, we will simply calculate the dot product and then extract a dense layer with sigmoid activation.

dot_product <- layer_dot(list(target_vector, context_vector), axes = 1)

output <- layer_dense(dot_product, units = 1, activation = "sigmoid")Now we will create the model and compile it.

We can see the full definition of the model by calling summary:

_________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

=========================================================================================

input_1 (InputLayer) (None, 1) 0

_________________________________________________________________________________________

input_2 (InputLayer) (None, 1) 0

_________________________________________________________________________________________

embedding (Embedding) (None, 1, 128) 2560128 input_1[0][0]

input_2[0][0]

_________________________________________________________________________________________

flatten_1 (Flatten) (None, 128) 0 embedding[0][0]

_________________________________________________________________________________________

flatten_2 (Flatten) (None, 128) 0 embedding[1][0]

_________________________________________________________________________________________

dot_1 (Dot) (None, 1) 0 flatten_1[0][0]

flatten_2[0][0]

_________________________________________________________________________________________

dense_1 (Dense) (None, 1) 2 dot_1[0][0]

=========================================================================================

Total params: 2,560,130

Trainable params: 2,560,130

Non-trainable params: 0

_________________________________________________________________________________________Model training

We will fit the model using fit_generator() function we need to specify the number of training steps as well as the number of cycles we want to train. We will train 100,000 steps for 5 positions. This is quite slow (~1000 seconds per epoch on a modern GPU). Remember that you can get decent results with just one round of training.

model %>%

fit_generator(

skipgrams_generator(reviews, tokenizer, skip_window, negative_samples),

steps_per_epoch = 100000, epochs = 5

)Epoch 1/1

100000/100000 [==============================] - 1092s - loss: 0.3749

Epoch 2/5

100000/100000 [==============================] - 1094s - loss: 0.3548

Epoch 3/5

100000/100000 [==============================] - 1053s - loss: 0.3630

Epoch 4/5

100000/100000 [==============================] - 1020s - loss: 0.3737

Epoch 5/5

100000/100000 [==============================] - 1017s - loss: 0.3823 Now we can extract the embedding matrix from the model using get_weights()

function we also added. row.names in our embedding matrix so we can easily find where each word is.

Understanding embeddings

Now we can find words near each other in the embedding. We will use the cosine similarity, since we have trained the model to minimize.

find_similar_words("2", embedding_matrix) 2 4 3 two 6

1.0000000 0.9830254 0.9777042 0.9765668 0.9722549 find_similar_words("little", embedding_matrix) little bit few small treat

1.0000000 0.9501037 0.9478287 0.9309829 0.9286966 find_similar_words("delicious", embedding_matrix)delicious tasty wonderful amazing yummy

1.0000000 0.9632145 0.9619508 0.9617954 0.9529505 find_similar_words("cats", embedding_matrix) cats dogs kids cat dog

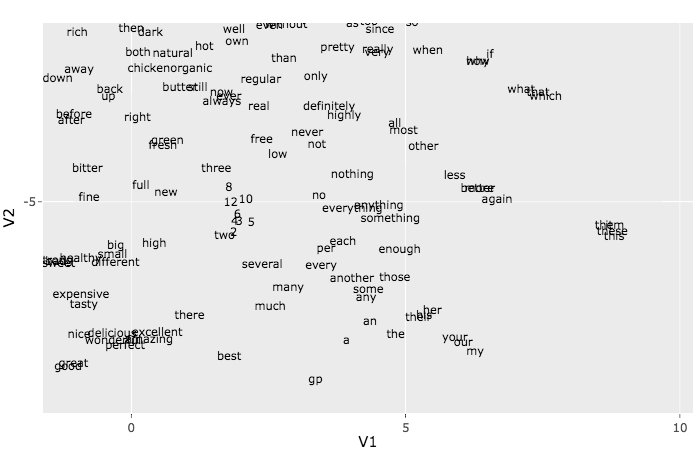

1.0000000 0.9844937 0.9743756 0.9676026 0.9624494 gave t-SNE Algorithms can be used to embed Due to time constraints we will only use it with the first 500 words. To understand more about t-SNE See how-to article How to use t-SNE effectively.

This plot might look like a mess, but if you zoom into smaller groups you'll see some nice patterns. For example, try searching for a bunch of web-related words. http, hrefetc. Another group that may be easier to pick out is the group of pronouns: she, he, heretc