This blog post focuses on new features and improvements. For a comprehensive list, including bug fixes, please see Continued note.

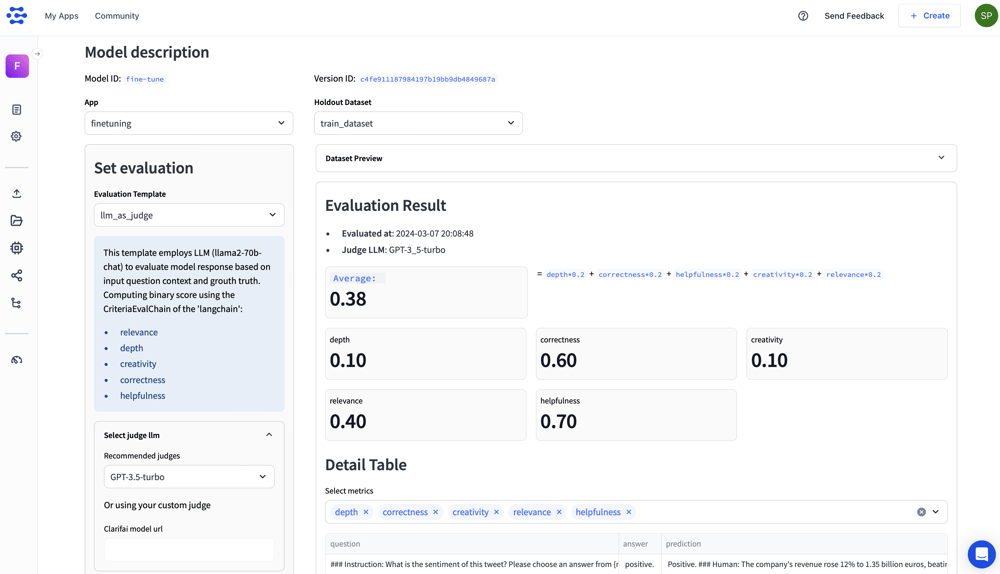

Introduced a module for evaluating large language models (LLMs). [Developer Preview]

Fine-tuning Large Language Models (LLMs) is a powerful strategy that allows you to take a pre-trained language model and further train it on a specific dataset or task to adapt it to that specific domain or application.

After mastering a model for a particular task, it is important to evaluate its performance and assess its effectiveness with real-world scenarios. By running the LLM evaluation, you can evaluate how well the model adapts to the target task or domain.

After curating your LLMs using the Clarifai platform, you can easily use this LLM evaluation module to evaluate your LLMs' performance against standard benchmarks, their strengths and weaknesses, with custom criteria. Can gain deep insight.

Act on it. DocumentsWhich is a step-by-step guide on how to fix and test your LLMs.

Here are some key features of the module:

- Explore 100+ tasks covering diverse use cases like RAG, classification, casual interaction, content summarization, and more. Each use case provides the flexibility to choose from relevant evaluation classes such as helpfulness, relevance, accuracy, depth and creativity. You can further enhance customization by assigning user-defined weights to each class.

- Define weights on each assessment class to create custom weighted scoring functions. It lets you measure business-specific metrics and store them for constant use. For example, for an assessment related to the RAG, you might want to give zero weight to creativity and more weight to accuracy, helpfulness, and relevance.

- Save the best-performing prompt model combination as a workflow for future reference with one click.

New models released

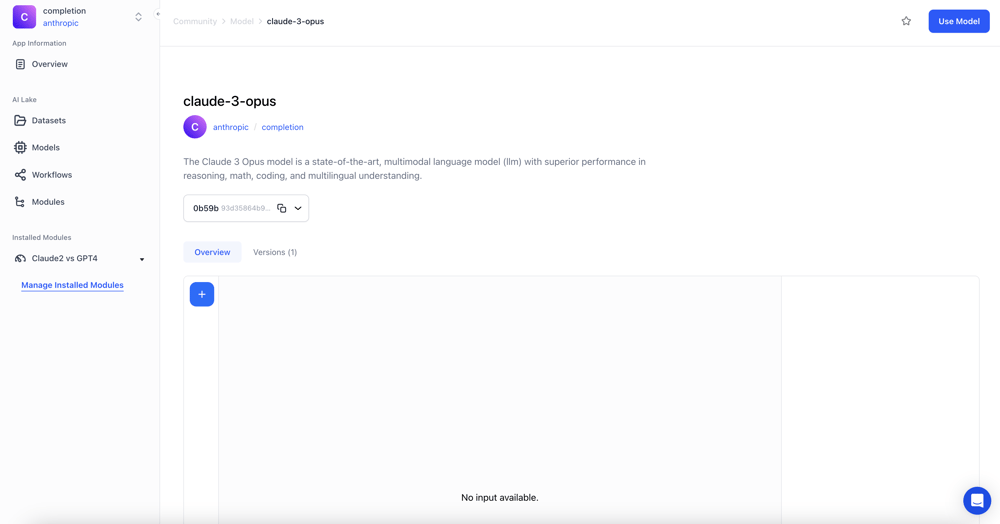

- Wrapped up Claude 3 opusa state-of-the-art, multimodal language model (LLM) with high performance in reasoning, mathematics, coding, and multilingual understanding.

- Wrapped up Claude 3 Sonnetsa multimodal LLM balancing skills and excellence in speed, reasoning, multilingual tasks, and visual interpretation.

- Hosted by Clarify. Gemma-2b-itA part of Google DeepMind's lightweight, Gemma family LLM, leveraging a training data set of 6 trillion tokens, offers exceptional AI performance on diverse tasks with a focus on safety and responsible production.

- Hosted by Clarify. Gemma-7b-itAn instructionally fine-tuned LLM, a lightweight, open model from Google DeepMind that offers state-of-the-art performance for natural language processing tasks, trained on diverse datasets with strict security and bias mitigation measures.

- Wrapped up Google Gemini Pro Visionwhich was built from the ground up to be multimodal (text, images, videos) and scale across a wide range of tasks.

- Wrapped up Qwen1.5-72B-chatwhich leads in language understanding, generation, and alignment, sets new standards in conversational AI and multilingual capabilities, including GPT-4, GPT-3.5, Mixtral-8x7B, and Llama2-70B among many others. Improves on benchmarks.

- Wrapped up DeepSeek-Coder-33B-Instructa SOTA 33 billion parameter code generation model, fine-tuned on 2 billion tokens of instruction data, offers high performance in code completion and filling tasks in over 80 programming languages.

- Hosted by Clarify. DeciLM-7B-instructiona state-of-the-art, efficient, and highly accurate 7 billion parameter LLM, that sets new standards in AI text generation.

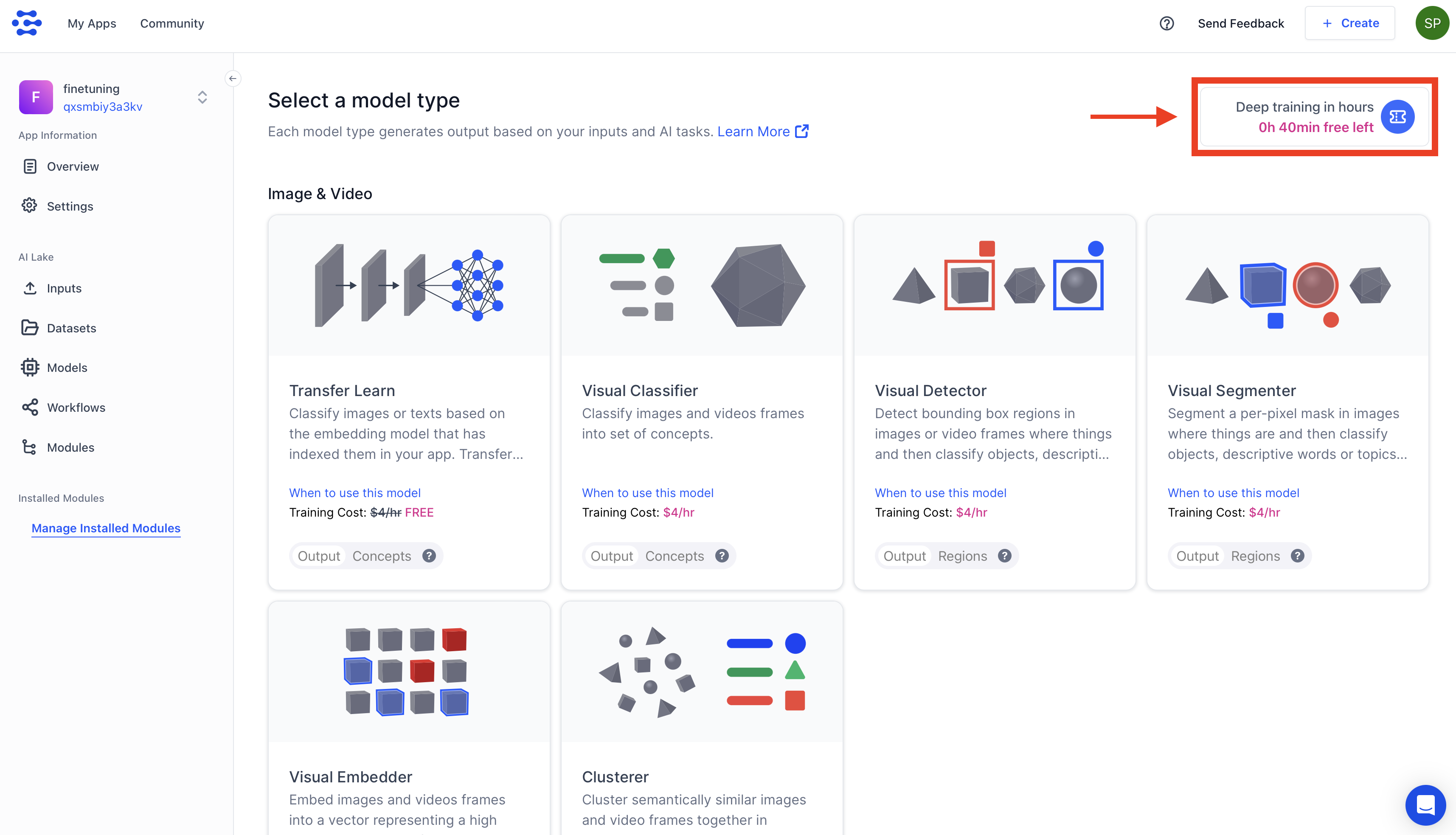

Added a notification for remaining time for free intensive training.

- Added a notification in the upper right corner of Select a model type. How many hours are left to train your models for free?

Added Python SDK.

- Updated and cleaned up the requirements.txt file for the SDK.

- Fixed an issue where a failed training job caused a bug when loading models into the Clarifai-Python client library, and duplicated concepts when their IDs did not match.

Added RAG (Retrieval Augmented Generation) feature.

- Improved the RAG SDK

upload()function to acceptdataset_idparameter - Enabled custom workflow names to be defined in the RAG SDK.

setup()Function - Fixed scope errors related to

userAndnow_tsBy correcting the placement of their definition of variables in the RAG SDK, which was previously inside aifStatement - Added support for chunk sequence numbers in metadata when uploading chunked documents via the RAG SDK.

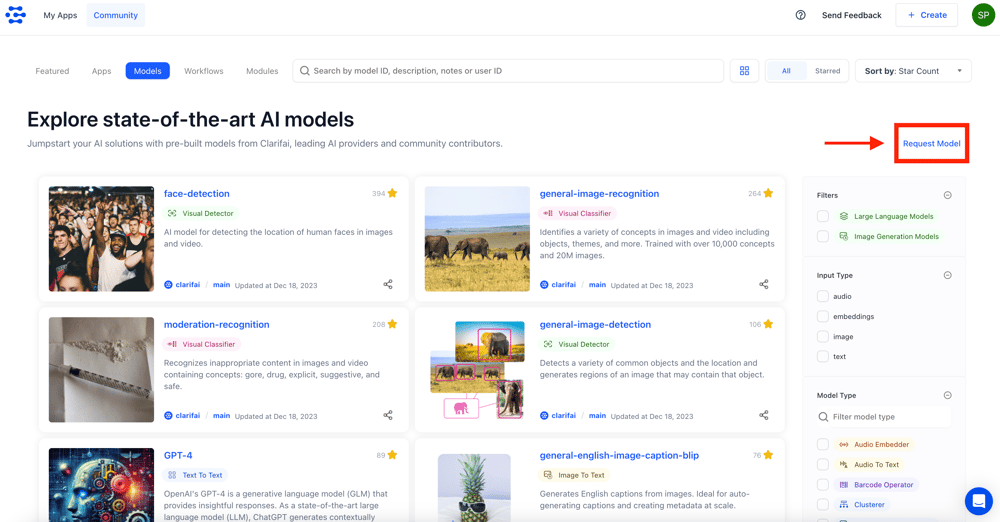

Feedback form added.

- Added feedback form links to header and listing pages for models, workflows, and modules. This enables registered users to provide general feedback or request specific models.

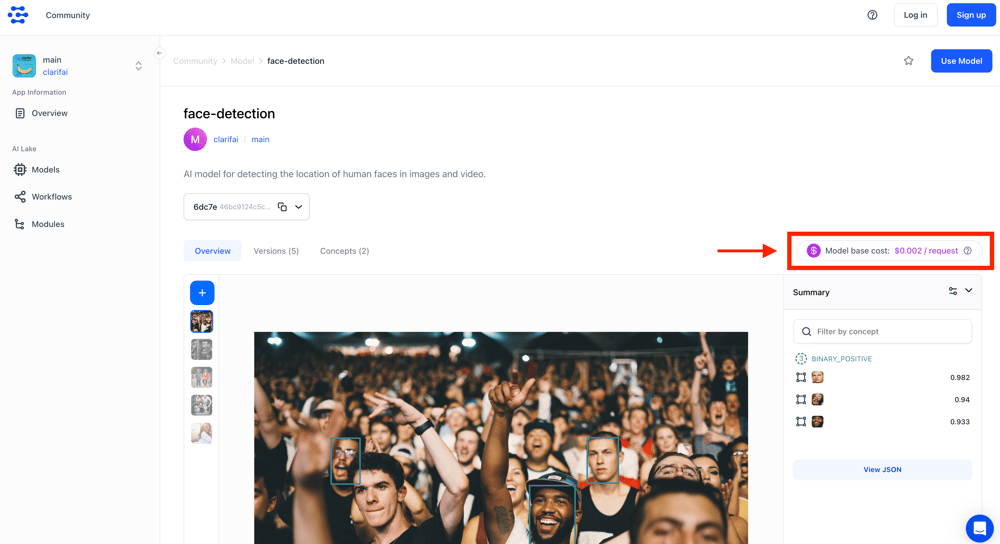

Added a display of cost per request.

- Model and Workflow pages now show cost per request for both logged in and non-logged in users.

Implementation of progressive image loading for images

- Progressive image loading initially displays low-resolution versions of images, gradually replacing them with higher-resolution versions as they become available. This solves page load issues and preserves image sharpness.

Spaces in IDs replaced with dashes.

- When updating user, app, or other resource IDs, spaces will be replaced with dashes.

Updated links

- The text and link to the Slack community in the info popover in the newbar should be 'Join our Discord channel.' Likewise, a similar link should be updated at the bottom of the landing page to redirect to Discord.

- “Where is the legacy portal?” was removed. The text

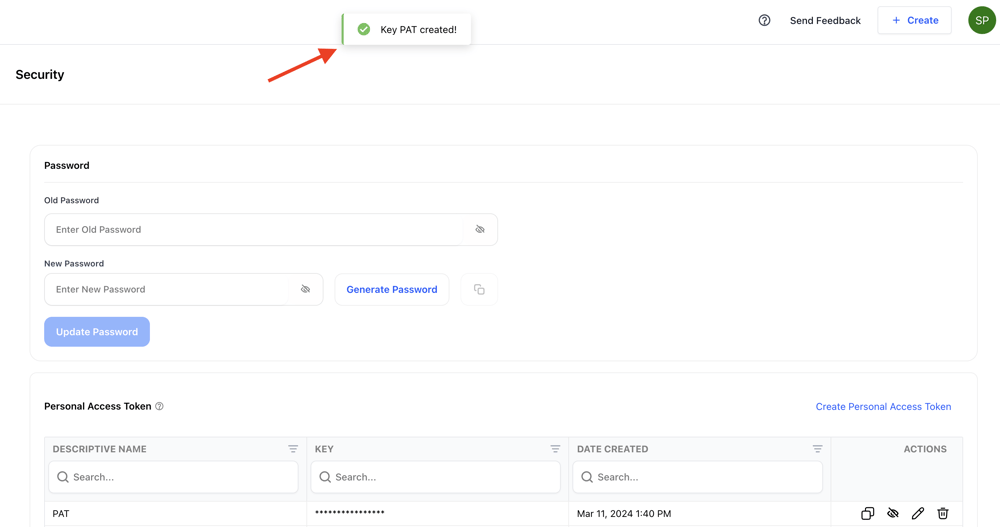

Display name in PAT toast notification.

- We've updated the account security page to display the PAT name instead of the letters PAT in the toast notification.

Improved mobile onboarding flow

- Made minor updates to mobile onboarding.

Improved sidebar appearance

- Improved appearance of sidebar when folded in mobile view.

Added the option to edit the scope of an assistant.

- You can now edit and customize the scopes associated with an assistant role on the app settings page.

Enabled deletion of associated model assets when removing a model annotation.

- Now, when deleting a model annotation, the corresponding model assets are also marked as deleted.

Better model selection

- Improved Model Selection dropdown list on Workflow Builder.