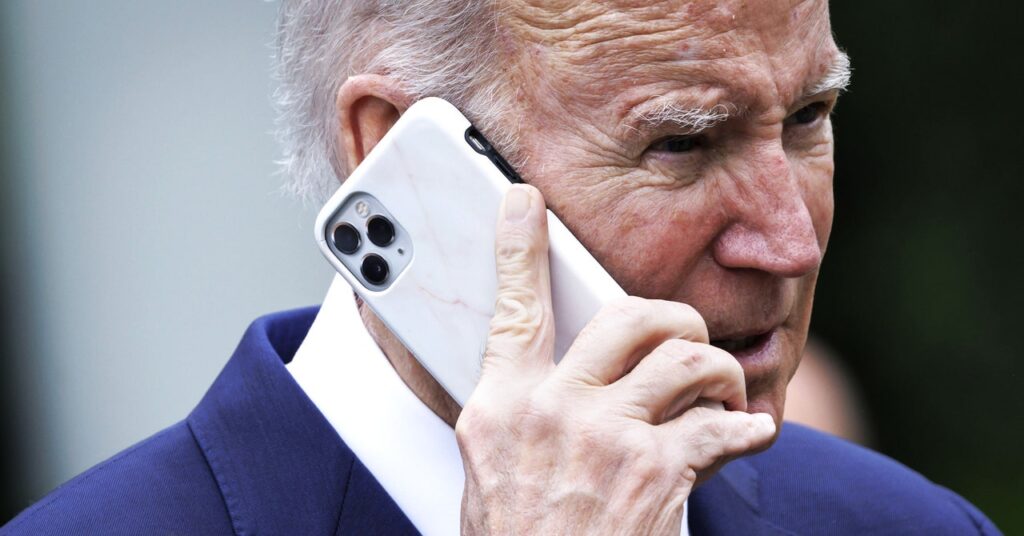

Last week, some voters in New Hampshire received an AI-generated robocall impersonating President Joe Biden asking them not to vote in the state’s primary election. It’s unclear who was responsible for the call, but two separate teams of audio experts tell WIRED that it was likely created using technology from voice-cloning startup ElevenLabs.

ElevenLabs markets its AI tools for uses such as audiobooks and video games. It recently achieved “unicorn” status by raising $80 million at a $1.1 billion valuation in a new funding round backed by venture firm Andreessen Horowitz. Anyone can sign up for the company’s paid service and clone a voice from an audio sample. The company’s privacy policy says it’s best to get someone’s permission before cloning their voice, but that cloning without permission can be fine for a variety of non-commercial purposes, including “participating in public political debates.” Speech”. ElevenLabs did not respond to multiple requests for comment.

PinDrop, a security company that makes tools to identify artificial audio, claimed in a blog post Thursday that the analysis of audio from its calls points to ElevenLabs’ technology or “systems using similar components.” Indicates. The Pindrop research team checked patterns in the audio clip against more than 120 different sound synthesis engines looking for a match, but they weren’t expecting to find one because AI-generated audio recognition Can be difficult. Pindrop CEO Vijay Balasubramanian says the results were surprisingly clear. “It came back well north of 99 percent that it was ElevenLabs,” he says.

The Pindrop team worked on a 39-second clip of one of the company’s AI-generated robocalls. He also attempted to confirm his findings by analyzing audio samples created using ElevenLabs’ technology, and with another voice synthesis tool to test the method.

ElevenLabs offers its own AI speech detector on its website that can tell if an audio clip was created using the company’s technology. When Pindrop ran its sample of suspected robocalls through the system, it came back as 84 percent likely to have been generated by ElevenLabs tools. WIRED independently found the same result when checking Pindrop’s audio sample with an ElevenLabs detector.

Hani Fareed, a digital forensics expert at the UC Berkeley School of Information, was initially skeptical of claims that the Biden robocall came from ElevenLabs. “When you hear the audio from the ElevenLabs clone sound, it’s really good,” he says. “The version of the Biden call I heard wasn’t particularly good, but the cadence was really funky. It just didn’t sound the quality I expected from ElevenLabs.

But when Farid had his team at Berkeley conduct their own independent analysis of audio samples obtained by Pindrop, he came to the same conclusion. “Our model says with great confidence that it’s AI-generated and possibly ElevenLabs,” he claims.

This is not the first time that researchers have suspected that ElevenLabs tools were used for political propaganda. Last September, NewsGuard, a company that tracks online misinformation, claimed that TikTok accounts shared conspiracy theories using AI-generated voices, including a clone of Barack Obama’s voice. Used technology from ElevenLabs. “More than 99 percent of users on our platform are creating interesting, innovative, useful content,” ElevenLabs said in an emailed statement. The New York Times At this time, “but we recognize that instances of misuse exist, and we are continually developing and rolling out safeguards to prevent them.”