Introduction

In this tutorial we will build a deep learning model to classify words. We will use tfdatasets to handle data IO and preprocessing, and Keras For model building and training.

We will use Speech Commands Dataset which consists of 65,000 one-second audio files of people saying 30 different words. Each file contains an English spoken word. The dataset was released by Google under the CC license.

Our model is Keras port. TensorFlow tutorial on Simple audio recognition Which resulted in motivation. Convolutional Neural Networks for Small Footprint Keyword Spotting. There are other ways speech recognition works, e.g Recurrent Neural Networks, Atherosclerotic convolutions or Learning from in-class examples for deep voice recognition.

The model we will implement here is not state-of-the-art for audio recognition systems, which are more complex, but it is relatively simple and fast to train. In addition, we show how to use it effectively. tfdatasets To pre-process and present the data.

Audio representation

Many deep learning models are end-to-end, meaning we let the model learn useful representations directly from the raw data. However, audio data grows much faster – up to 16,000 samples per second with very rich texture over many time scales. To avoid dealing with raw waveform data, researchers typically use some form of feature engineering.

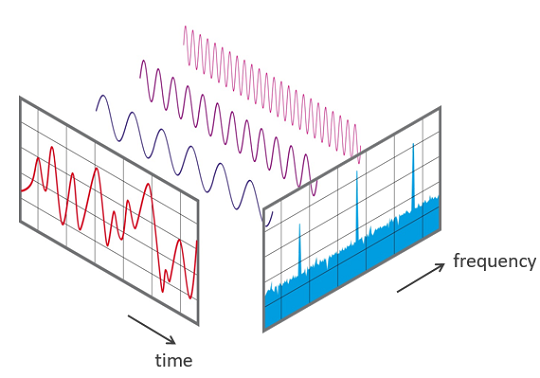

Each sound wave can be represented by its spectrum, and digitally calculated using it. Fast Fourier Transform (FFT).

A common way to represent audio data is to break it into smaller chunks, which usually overlap. For each segment we use the FFT to calculate the magnitude of the frequency spectrum. The spectra are then binned together, which we call a. Spectrogram.

It is also common for speech recognition systems to further transform and calculate the spectrum. Mel-frequency septal coefficients. This change takes into account that the human ear cannot perceive the difference between two closely spaced frequencies and intelligently creates bins on the frequency axis. A great tutorial can be found on MFCCs. Here.

After this procedure, we have an image for each audio sample and can use perceptual neural networks, a standard architecture type in image recognition models.

Downloading.

First, let's download the data into our project's directory. You can download from either This link (~1GB) or from R with:

dir.create("data")

download.file(

url = "http://download.tensorflow.org/data/speech_commands_v0.01.tar.gz",

destfile = "data/speech_commands_v0.01.tar.gz"

)

untar("data/speech_commands_v0.01.tar.gz", exdir = "data/speech_commands_v0.01")Within data Directory We will have a folder. speech_commands_v0.01. Within this directory the WAV audio files are organized into subfolders with label names. For example, all one-second audio files of people saying the word “bed” are inside. bed There are 30 of these directories and one called special. _background_noise_ which consists of different patterns that can be combined to simulate background noise.

to import

In this step we will import all the audio .wav files into a tibble with 3 columns:

fname: filename;class: label for each audio file;class_id: A unique zero-based integer for each class – used to encode the classes one-hot.

This will be useful for the next step when we create a generator using tfdatasets The package

The generator

Now we will make our own. Datasetin the context of which tfdatasets, TensorFlow adds operations to the graph to read and preprocess the data. Since they are TensorFlow ops, they are executed in C++ and parallel to model training.

The generator we will create will be responsible for reading the audio files from disk, creating a spectrogram for each one, and selling the output.

Let's start by creating a dataset from the pieces. data.frame with the audio file names and classes we just created.

Now, let's define the parameters for generating the spectrogram. We need to explain. window_size_ms which is the size in milliseconds of each segment into which we will break the audio wave, and window_stride_msthe distance between the centers of adjacent segments:

window_size_ms <- 30

window_stride_ms <- 10Now we'll change the window size and sample by millisecond. We are considering that our audio files have 16,000 samples per second (1000 ms).

window_size <- as.integer(16000*window_size_ms/1000)

stride <- as.integer(16000*window_stride_ms/1000)We will obtain other quantities that will be useful for generating the spectrogram, such as the number of slices and the FFT size, i.e. the number of bins on the frequency axis. The function we are going to use to compute the spectrogram does not allow us to change the FFT size and instead uses the first power of 2 above the window size by default.

Now we will use dataset_map which allows us to define a preprocessing function for each observation (line) of our data set. It is in this step that we read the raw audio file from disk and create its spectrogram and a thermally encoded response vector.

# shortcuts to used TensorFlow modules.

audio_ops <- tf$contrib$framework$python$ops$audio_ops

ds <- ds %>%

dataset_map(function(obs) {

# a good way to debug when building tfdatsets pipelines is to use a print

# statement like this:

# print(str(obs))

# decoding wav files

audio_binary <- tf$read_file(tf$reshape(obs$fname, shape = list()))

wav <- audio_ops$decode_wav(audio_binary, desired_channels = 1)

# create the spectrogram

spectrogram <- audio_ops$audio_spectrogram(

wav$audio,

window_size = window_size,

stride = stride,

magnitude_squared = TRUE

)

# normalization

spectrogram <- tf$log(tf$abs(spectrogram) + 0.01)

# moving channels to last dim

spectrogram <- tf$transpose(spectrogram, perm = c(1L, 2L, 0L))

# transform the class_id into a one-hot encoded vector

response <- tf$one_hot(obs$class_id, 30L)

list(spectrogram, response)

}) Now, we will explain how we want to batch observations from the dataset. We are using dataset_shuffle Since we want to shuffle the observations from the dataset, otherwise it will follow the order. df thing Then we use dataset_repeat To tell TensorFlow that we want to keep fetching observations from the dataset even if all the observations have already been used. And most importantly here, we use dataset_padded_batch To specify that we want batches of size 32, but they must be padded, ie. If some observation has a different size, we pad it with zero. Bold format is accepted dataset_padded_batch through padded_shapes argument and we use NULL To specify that this dimension does not need to be padded.

This is our dataset specification, but we'll need to rewrite all the code for the validation data, so it's good practice to wrap it in a data function and other important parameters e.g. window_size_ms And window_stride_ms. Below, we will define a function called data_generator Which will create a generator depending on the input.

data_generator <- function(df, batch_size, shuffle = TRUE,

window_size_ms = 30, window_stride_ms = 10) {

window_size <- as.integer(16000*window_size_ms/1000)

stride <- as.integer(16000*window_stride_ms/1000)

fft_size <- as.integer(2^trunc(log(window_size, 2)) + 1)

n_chunks <- length(seq(window_size/2, 16000 - window_size/2, stride))

ds <- tensor_slices_dataset(df)

if (shuffle)

ds <- ds %>% dataset_shuffle(buffer_size = 100)

ds <- ds %>%

dataset_map(function(obs) {

# decoding wav files

audio_binary <- tf$read_file(tf$reshape(obs$fname, shape = list()))

wav <- audio_ops$decode_wav(audio_binary, desired_channels = 1)

# create the spectrogram

spectrogram <- audio_ops$audio_spectrogram(

wav$audio,

window_size = window_size,

stride = stride,

magnitude_squared = TRUE

)

spectrogram <- tf$log(tf$abs(spectrogram) + 0.01)

spectrogram <- tf$transpose(spectrogram, perm = c(1L, 2L, 0L))

# transform the class_id into a one-hot encoded vector

response <- tf$one_hot(obs$class_id, 30L)

list(spectrogram, response)

}) %>%

dataset_repeat()

ds <- ds %>%

dataset_padded_batch(batch_size, list(shape(n_chunks, fft_size, NULL), shape(NULL)))

ds

}Now, we can define training and validation data generators. It's worth noting that executing this will not actually compute a spectrogram or read a file. It will simply define in the TensorFlow graph how it should read and preprocess the data.

To actually get a batch from the generator we can create a TensorFlow session and tell it to run the generator. For example:

sess <- tf$Session()

batch <- next_batch(ds_train)

str(sess$run(batch))List of 2

$ : num [1:32, 1:98, 1:257, 1] -4.6 -4.6 -4.61 -4.6 -4.6 ...

$ : num [1:32, 1:30] 0 0 0 0 0 0 0 0 0 0 ...Every time you run. sess$run(batch) You should see a different batch of observations.

Model definition

Now that we know how we will feed our data we can focus on defining the model. A spectrogram can be treated like an image, so architectures commonly used in image recognition tasks should also work well with spectrograms.

We will create a convolutional neural network similar to the one we created. Here For the MNIST dataset.

The input size is defined by the number of slices and the FFT size. As we mentioned earlier, they can be obtained from it. window_size_ms And window_stride_ms Used to create a spectrogram.

We will now define our model using the Keras Sequential API:

model <- keras_model_sequential()

model %>%

layer_conv_2d(input_shape = c(n_chunks, fft_size, 1),

filters = 32, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_conv_2d(filters = 128, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_conv_2d(filters = 256, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_dropout(rate = 0.25) %>%

layer_flatten() %>%

layer_dense(units = 128, activation = 'relu') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 30, activation = 'softmax')We used 4 layers of convolutions combined with multiple pooling layers and 2 dense layers on top to extract features from spectrogram images. Our network is relatively simple when compared to more advanced architectures such as ResNet or DenseNet which perform very well on image recognition tasks.

Now we compile our model. We will use categorical cross-entropy as the loss function and use the Adadelta optimizer. Here we also specify that we will look at the accuracy metric during training.

Model fitting

Now, we will fit our model. In Keras we can use TensorFlow datasets as input. fit_generator function and we'll do it here.

Epoch 1/10

1415/1415 [==============================] - 87s 62ms/step - loss: 2.0225 - acc: 0.4184 - val_loss: 0.7855 - val_acc: 0.7907

Epoch 2/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.8781 - acc: 0.7432 - val_loss: 0.4522 - val_acc: 0.8704

Epoch 3/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.6196 - acc: 0.8190 - val_loss: 0.3513 - val_acc: 0.9006

Epoch 4/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.4958 - acc: 0.8543 - val_loss: 0.3130 - val_acc: 0.9117

Epoch 5/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.4282 - acc: 0.8754 - val_loss: 0.2866 - val_acc: 0.9213

Epoch 6/10

1415/1415 [==============================] - 76s 53ms/step - loss: 0.3852 - acc: 0.8885 - val_loss: 0.2732 - val_acc: 0.9252

Epoch 7/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.3566 - acc: 0.8991 - val_loss: 0.2700 - val_acc: 0.9269

Epoch 8/10

1415/1415 [==============================] - 76s 54ms/step - loss: 0.3364 - acc: 0.9045 - val_loss: 0.2573 - val_acc: 0.9284

Epoch 9/10

1415/1415 [==============================] - 76s 53ms/step - loss: 0.3220 - acc: 0.9087 - val_loss: 0.2537 - val_acc: 0.9323

Epoch 10/10

1415/1415 [==============================] - 76s 54ms/step - loss: 0.2997 - acc: 0.9150 - val_loss: 0.2582 - val_acc: 0.9323The accuracy of the model is 93.23%. Let's learn how prediction is done and take a look at confusion matrix.

Making predictions

We can usepredict_generator A function to make predictions on a new dataset. Let's make predictions for our validation dataset. gave predict_generator The function requires a step argument which is the number of times the generator will be called.

We can calculate the number of steps by knowing the batch size, and the size of the validation dataset.

df_validation <- df[-id_train,]

n_steps <- nrow(df_validation)/32 + 1Then we can use predict_generator Function:

predictions <- predict_generator(

model,

ds_validation,

steps = n_steps

)

str(predictions)num [1:19424, 1:30] 1.22e-13 7.30e-19 5.29e-10 6.66e-22 1.12e-17 ...This will output a matrix with 30 columns – one for each word and n_steps*batch_size number of rows. Note that it starts iterating over the dataset at the end to create a complete batch.

For example we can calculate the predictive class by taking the most likely column.

classes <- apply(predictions, 1, which.max) - 1A good idea of the confusion matrix is to create an alveolar diagram:

library(dplyr)

library(alluvial)

x <- df_validation %>%

mutate(pred_class_id = head(classes, nrow(df_validation))) %>%

left_join(

df_validation %>% distinct(class_id, class) %>% rename(pred_class = class),

by = c("pred_class_id" = "class_id")

) %>%

mutate(correct = pred_class == class) %>%

count(pred_class, class, correct)

alluvial(

x %>% select(class, pred_class),

freq = x$n,

col = ifelse(x$correct, "lightblue", "red"),

border = ifelse(x$correct, "lightblue", "red"),

alpha = 0.6,

hide = x$n < 20

)

We can see from the diagram that the most relevant error of our model is to classify “tree” as “three”. Other common mistakes are classifying “go” as “no”, “up” as “off”. At 93% accuracy for 30 classes, and considering the errors we can say that this model is quite reasonable.

The saved model occupies 25Mb of disk space, which is adequate for a desktop but may not be on smaller devices. We can train a smaller model, with fewer layers, and see how the performance drops.

It is also common in speech recognition tasks to add some form of data augmentation by adding background noise to the spoken audio, which makes it more useful for real applications where other unrelated sounds are common in the environment.

The complete code for reproducing this tutorial is available. Here.