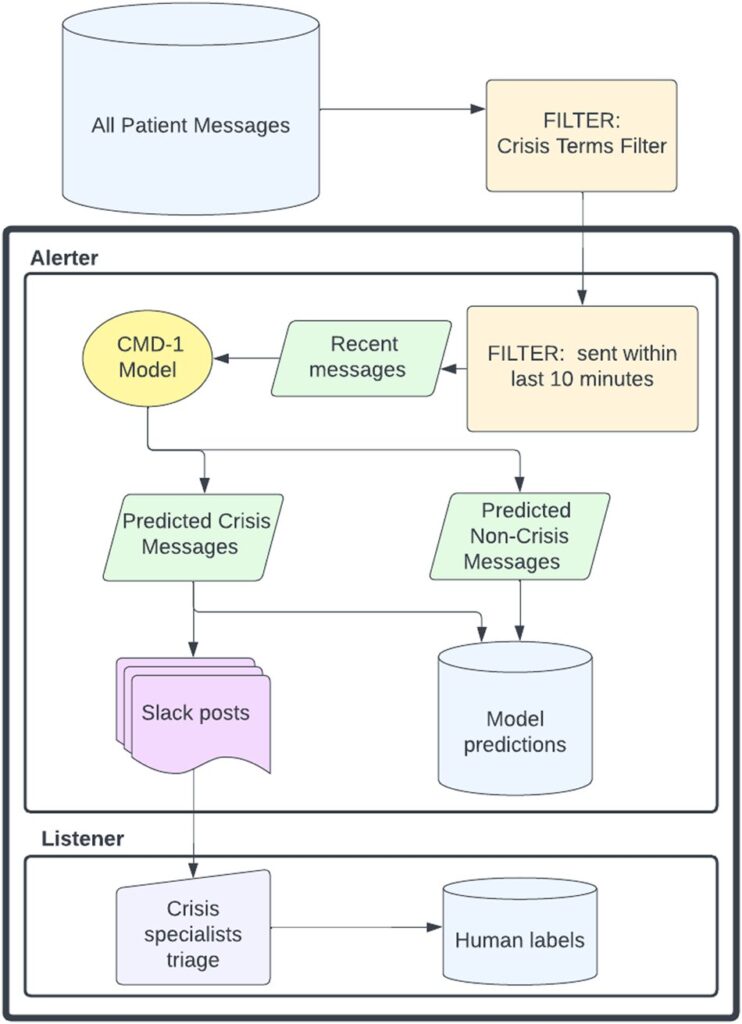

Diagram showing the flow of patient messages through the CMD-1 system in a model deployment. Credit: NPJ Digital Medicine (2023). DOI: 10.1038/s41746-023-00951-3. NPJ Digital Medicine (2023). DOI: 10.1038/s41746-023-00951-3

× Close

Diagram showing the flow of patient messages through the CMD-1 system in a model deployment. Credit: NPJ Digital Medicine (2023). DOI: 10.1038/s41746-023-00951-3. NPJ Digital Medicine (2023). DOI: 10.1038/s41746-023-00951-3

Mental health needs are increasing. Today one in five Americans lives with a mental health condition, and suicide rates have increased by more than 30% in the past two decades. Organizations like the National Alliance on Mental Illness (NAMI), which provides free support for those experiencing crisis, saw a 60 percent increase in help-seekers between 2019 and 2021.

To cope with this increase, organizations and healthcare providers are turning to digital tools. Technology platforms such as crisis hotlines, text lines, and online chat lines provide trained and staffed support for patients in crisis. Yet, dropped call rates for such organizations are as high as 25%. Furthermore, the majority of these services are silent on the part of callers’ physicians.

One of the primary factors contributing to this high attrition rate is that patient demand significantly exceeds the number of responders available. In 2020, the National Suicide Prevention Lifeline reported a response rate of only 30% for chat and 56% for text messages, leaving many patients in crisis without help. Additionally, these systems use a standard queuing method for incoming messages, where patients are served on a first-come, first-served basis, as their need is urgent.

What if these platforms could distinguish between urgent and non-urgent messages, thereby improving efficiency in handling crisis cases?

That’s exactly what Akshay Swaminathan and Ivan Lopez—Stanford medical students—set out to do with their team of interdisciplinary collaborators, including clinical experts and operational leaders at Cerebral, a national online mental health company, where Swaminathan leads Data Science.

The research team includes Jonathan Chen, a Stanford HAI affiliate and assistant professor of medicine in the Stanford Center for Biomedical Informatics Research, and Oliver Givert, Stanford associate professor and biomedical data science. The authors published their work. NPJ Digital Medicine.

Using natural language processing, the team developed a machine learning (ML) system called Crisis Message Detector 1 (CMD-1) that can identify and auto-triage messages, reducing patient wait times by 10 hours. Reduces it to less than 10 minutes.

“For suicidal clients, wait times were too long. Our research means that data science and ML can be successfully integrated into clinician workflows, resulting in dramatic improvements when It’s about identifying patients at risk, and automating those really manual tasks,” says Swaminathan.

Their results highlight the importance of applying CMD-1 in scenarios where speed is critical. “CMD-1 increases the efficiency of crisis response teams, allowing them to handle more cases more efficiently. With faster triage, prioritize urgent cases,” Lopez says. Resources can be allocated more efficiently.”

Empowering crisis professionals

The data the team used as the basis for CMD-1 came from Cerebral, which receives thousands of patient messages daily in its chat system. Messages can include topics as diverse as appointment scheduling and medication refills, as well as messages from patients in emergency situations.

Starting with a random sample of 200,000 messages, they labeled patient messages as “crisis” or “non-crisis” using a filter that included factors such as key crisis words and patient identity that had reported the crisis within the previous week. Crisis messages warranting further attention note expressions of suicidal or homicidal ideation, domestic violence, or non-suicidal self-injury (self-harm).

“For messages that are vague, like ‘I need help,’ we erred on the side of calling it a crisis. Just from that sentence you don’t know if the patient is going to make an appointment or get out of bed. I need help,” says Swaminathan.

The team was also steadfast in ensuring that their approach would complement but not replace human review. CMD-1 surfaces crisis messages and sends them to a human to review as part of their specific crisis response workflow in a Slack interface. Any true crisis messages that the model fails to surface (false negatives) are reviewed by humans as part of the routine chat support workflow.

As López says, “This approach is critical to ensure that we minimize the risk of false negatives as much as possible. Ultimately, the human factor in evaluating and interpreting messages is the technical efficiency and ensures a balance between compassionate care, which is essential in this context. mental health emergency.”

Given the sensitivity of the subject area, the team was extremely conservative with how it classified messages. They considered false negatives (missing a true crisis message) and false positives (incorrectly conveying a non-crisis message), and, working with clinical stakeholders, determined that false negatives were missing. The cost of doing is 20 times more undesirable than addressing a wrong. positive

“This is a really key point when it comes to deploying ML models. Any ML model that is making a classification—be it ‘crisis’ or ‘no crisis’—first sets a probability to zero. This creates a probability that the message is a crisis, but we have to pick a threshold above which the model calls it a crisis and below which the model says it’s not a crisis. Choosing that cutoff is an important decision, and it shouldn’t be made by the people building the model, it should be made by the end users of the model. For us, that’s the clinical teams,” says Swaminathan.

Notably, CMD-1 was able to detect high-risk messages with impressive accuracy (97% sensitivity and 97% specificity), and the team reduced the response time for help-seekers to less than 10 hours. Just made it 10 minutes. This speed is critical, as rapid intervention has the potential to divert high-risk patients from suicidal attempts.

The potential of ML in health care

Given their remarkable results, the team hopes to see more machine learning models deployed in healthcare settings, which is currently a rare occurrence, as models are often deployed in clinical settings. Careful translation and close attention to technical and operational considerations are required. technical infrastructure.

“Often, data scientists create highly accurate ML models without fully addressing stakeholders’ pain points. As a result, these models, although technically proficient, may create additional work or existing clinical problems. may fail to integrate seamlessly into workflows. For more widespread adoption, Data Lopez says scientists should involve healthcare professionals from the start, this Ensuring that models address the challenges they are designed to solve, streamline rather than complicate tasks, and fit organically within existing clinical infrastructure.”

The development team for CMD-1 took a unique approach of assembling a cross-functional team of clinicians and data scientists to ensure that the model met key clinical parameters and was meaningful in real clinical operations settings. Can get results. “The compelling system developed here is not just an analytical result, but the very difficult task of integrating it into a real workflow that enables human patients and clinicians to reach each other at a critical moment,” says Chen. ” says Chen.

This cross-functional approach, combined with the remarkable results of CMD-1, showed Swaminathan and Lopez how technology could be used to increase the impact of clinicians. Swaminathan says, “This is the direction AI in medicine is moving towards, where we can make healthcare delivery more human, make clinicians’ lives easier, and provide them with higher quality care. Using data to empower.”

More information:

Akshay Swaminathan et al., A Natural Language Processing System for Rapid Detection and Intervention of Mental Health Crisis Chat Messages, NPJ Digital Medicine (2023). DOI: 10.1038/s41746-023-00951-3

Journal Information:

NPJ Digital Medicine