Humans are skilled at processing vast arrays of visual information, a skill critical to achieving artificial general intelligence (AGI). Over the decades, AI researchers have developed visual question answering (VQA) systems to interpret scenes within an image and answer related questions. Although recent advances in foundational models have significantly bridged the gap between human and machine visual processing, traditional VQA has been limited to causality. alone images at a time rather than the entire collection of visual data.

This limitation creates challenges in more complex scenarios. For example, the challenges of discerning patterns in medical image collections, monitoring deforestation through satellite imagery, mapping urban changes using autonomous navigation data, analyzing thematic elements in large art collections, or understanding consumer behavior from retail surveillance footage. Each of these scenarios involves not only visual processing of hundreds or thousands of images, but also requires cross-image processing of these results. To fill this gap, this project focuses on the “multi-image question answering” (MIQA) task, which is beyond the reach of traditional VQA systems.

Visual Haystacks: The first “visual-centric” Needle-In-A-Haystack (NIAH) benchmark designed to rigorously evaluate large multimodal models (LMMs) in processing long-context visual information.

How to benchmark VQA models on MIQA?

The “Needle-In-A-Haystack” (NIAH) challenge recently introduced LLM's “long context”, large sets of input data (such as long documents, videos, or hundreds of images). In this work, the necessary information (the “needle”), which answers a specific question, is embedded within a large amount of data (the “haystack”). The system should then retrieve the relevant information and answer the question correctly.

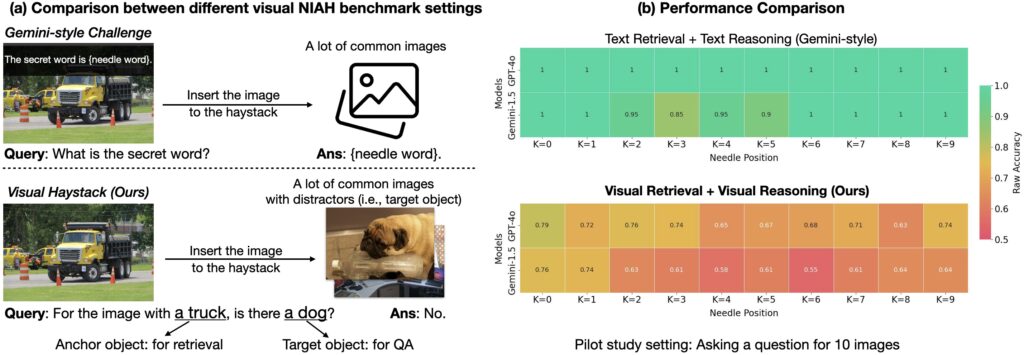

The first NIAH benchmark for visual reasoning was introduced by Google in Gemini-v1.5. Technical report. In this report, they asked their models to retrieve text wrapped around a single frame in a large video. It turns out that current models perform quite well at this task – mainly due to their strong OCR retrieval capabilities. But what if we ask more visual questions? Do models still perform?

What is the Visual Hystics (VHs) benchmark?

In pursuit of evaluating “visual-centric” long context reasoning capabilities, we introduce the “visual hysteria (VHs)” benchmark. This new benchmark is designed to evaluate large multimodal models (LMMs) in visual. recovery And Reasoning In large uncorrelated image sets. VHs contain about 1K binary question-answer pairs, each set containing 1 to 10K images. Unlike previous benchmarks that focused on textual retrieval and reasoning, VHs queries focus on recognizing the presence of specific visual content, such as objects, using images and annotations from the COCO dataset.

The VHs benchmark is divided into two main challenges, each designed to test the model's ability to accurately locate and analyze relevant images before answering questions. We have carefully designed the dataset to ensure that guessing or relying on common-sense reasoning without seeing the image will not provide any benefit (i.e. results in a 50% accuracy rate on a binary QA task). I).

-

The Single Needle Challenge: There is only one image of a needle in a haystack of images. The question is formulated as, “For an image with an anchor object, is there a target object?”

-

The Multi-Needle Challenge: Hay stack of images contains two to five needle images. The question is formulated as either, “For all images containing an anchor object, do all of them contain a target object?” or “For all images with an anchor object, do any of them contain a target object?”

Three main results from VHs

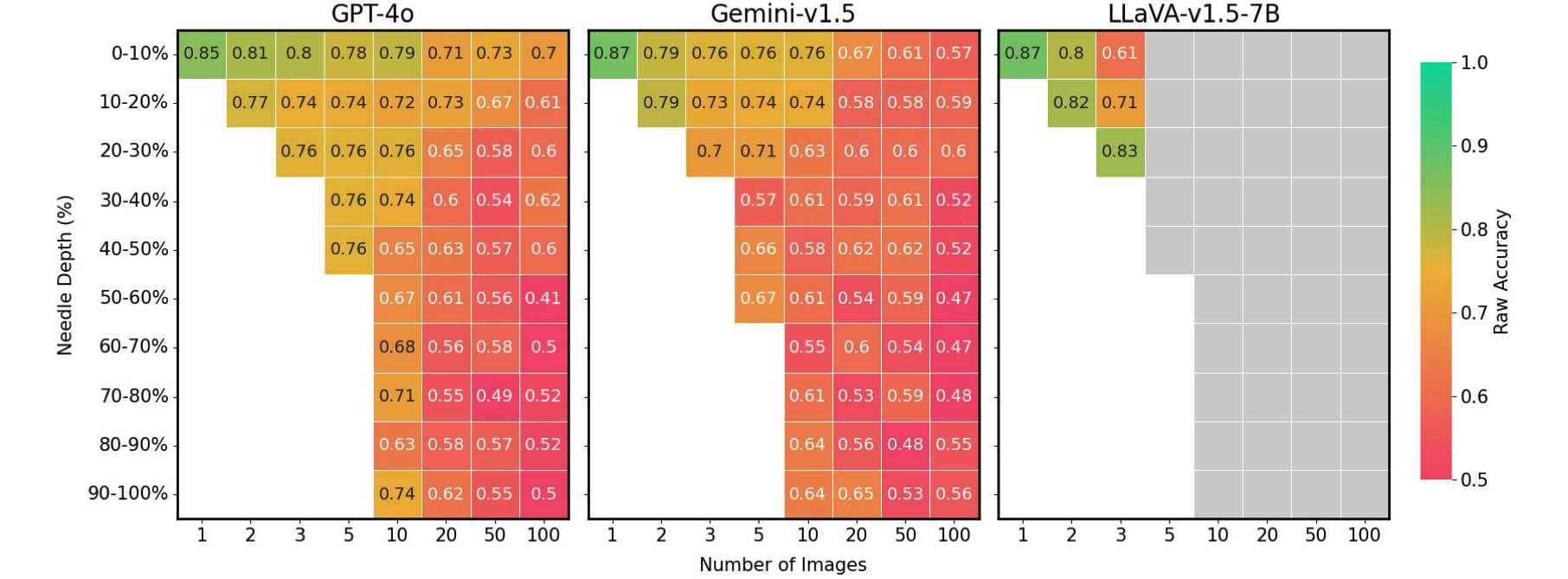

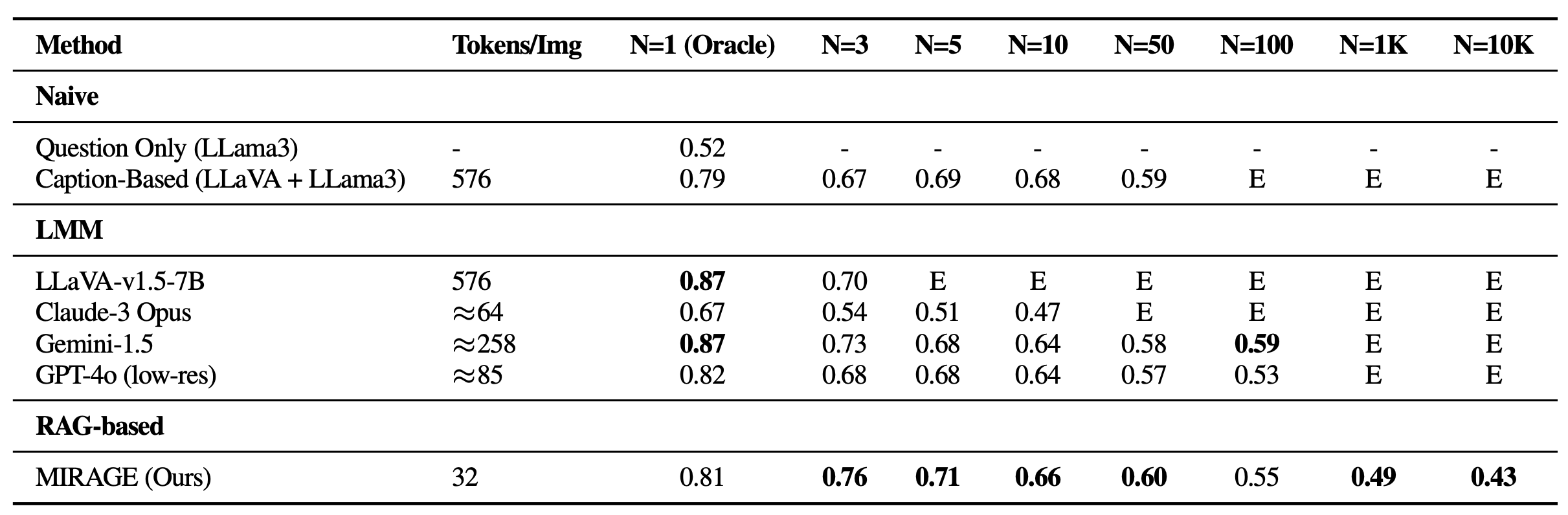

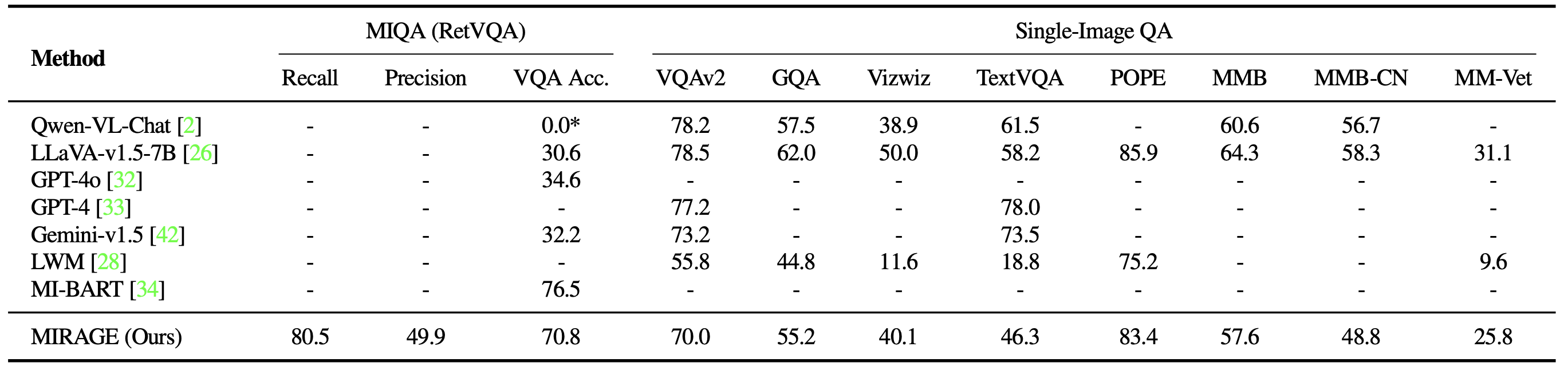

The Visual Hystax (VHs) benchmark demonstrates the main challenges faced by existing large multimodal models (LMMs) when processing extensive visual input. In our experiments In both single- and multi-needle approaches, we reviewed several open-source and proprietary approaches including LLaVA-v1.5, GPT-4o, Claud-3 OpsAnd Gemini-v1.5-pro. Additionally, we include a “captioning” baseline, which uses a two-step approach where images are initially captioned using LLaVA, followed by caption textual content. The question is answered using Lama 3. Below are three key insights:

-

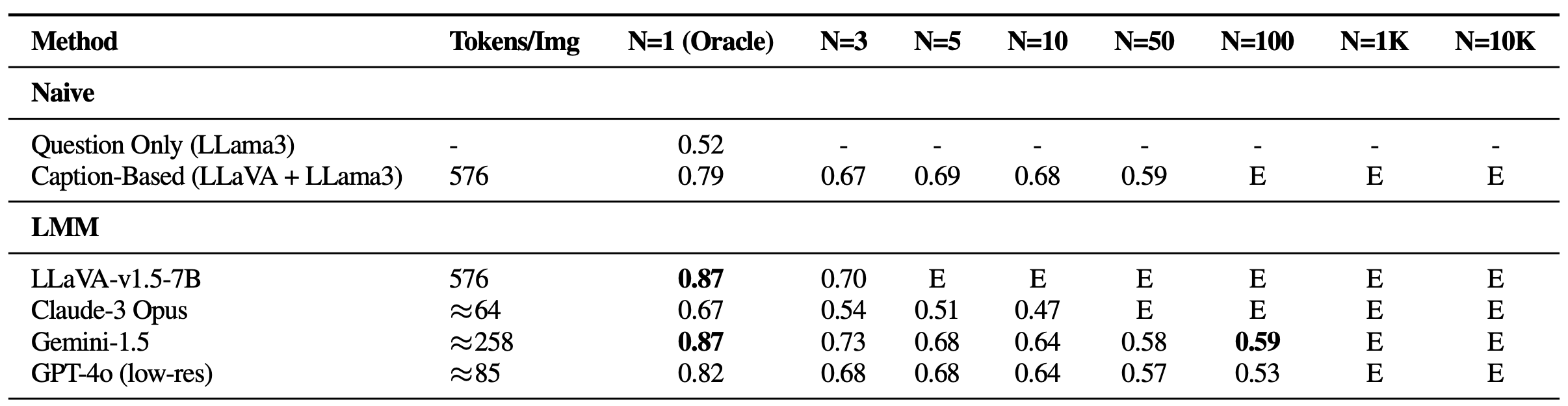

Struggles with visual distractions

At single-needle settings, a significant drop in performance was observed as the number of images increased, despite maintaining high oracle accuracy—a scenario not previously seen in text-based Gemini-style benchmarks. . This indicates that existing models may struggle with primarily visual retrieval, particularly in the presence of challenging visual distractors. Additionally, it is important to highlight the constraints on open-source LMMs such as LLaVA, which can only handle up to three images due to the 2K context length limit. On the other hand, proprietary models such as Gemini-v1.5 and GPT-4o, despite their claims of extended context capabilities, allow images to exceed 1K due to payload size limitations when using API calls. But often they fail to manage the requests.

Performance on VHs for single-needle queries. All models experience significant fall-off as the size of the grass stack (N) increases, suggesting that none of them is robust against visual distraction. E: Exceeds context length. -

Difficulty reasoning across multiple images

Interestingly, all LMM-based methods showed poor performance in single-image QA with 5+ images and all multi-needle settings compared to the baseline approach combining a caption model (LLaVA) with an LLM aggregator (Llama3). This discrepancy suggests that while LLMs are capable of efficiently integrating long contextual topics, existing LMM-based solutions are inadequate for processing and integrating information across multiple images. Notably, performance deteriorates significantly in multi-image scenarios, with Claude-3 Opus showing poor results with Oracle images only, and Gemini-1.5/GPT-4o with large sets of 50% accuracy ( just like a random guess). Pictures

Results on VHs for multi-needle queries. All visually-aware models perform poorly, indicating that the models find it difficult to clearly integrate visual information. -

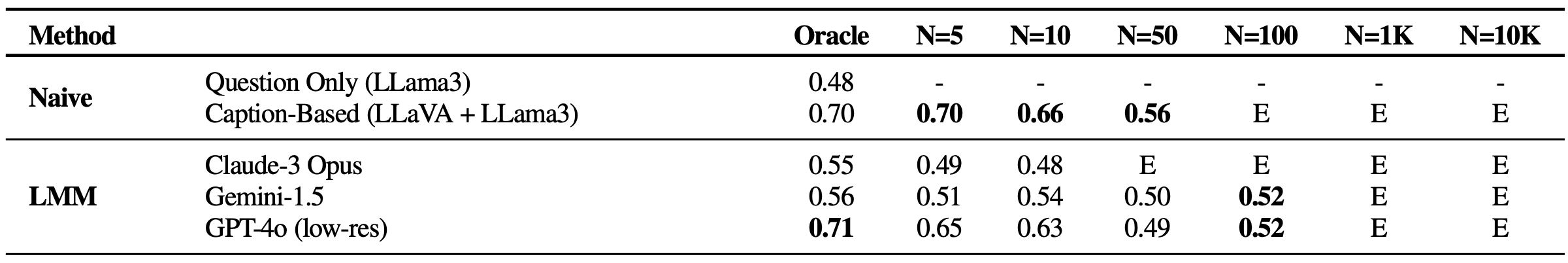

Phenomena in the visual domain

Finally, we found that the accuracy of LMMs is strongly affected by the position of the needle image in the input sequence. For example, LLaVA performs better when the needle image is placed immediately before the query, otherwise experiencing a reduction of up to 26.5%. In contrast, the proprietary models generally perform better when the image is initially positioned, suffering a drop of up to 28.5% when not. This echoes the pattern “Lost in the Middle” A phenomenon seen in the field of Natural Language Processing (NLP), where important information at the beginning or end of a context affects the model's performance. This issue was not apparent in the previous Gemini-style NIAH assessment, which required only text retrieval and reasoning, indicating unique challenges posed by our VHs benchmark.

Needle position vs. performance on VHs for different image settings. When the needle is not ideally positioned, the performance of existing LMMs decreases by up to 41%. Gray boxes: Exceeds the context length.

Mirage: A RAG-Based Solution for Better Performance of VHs

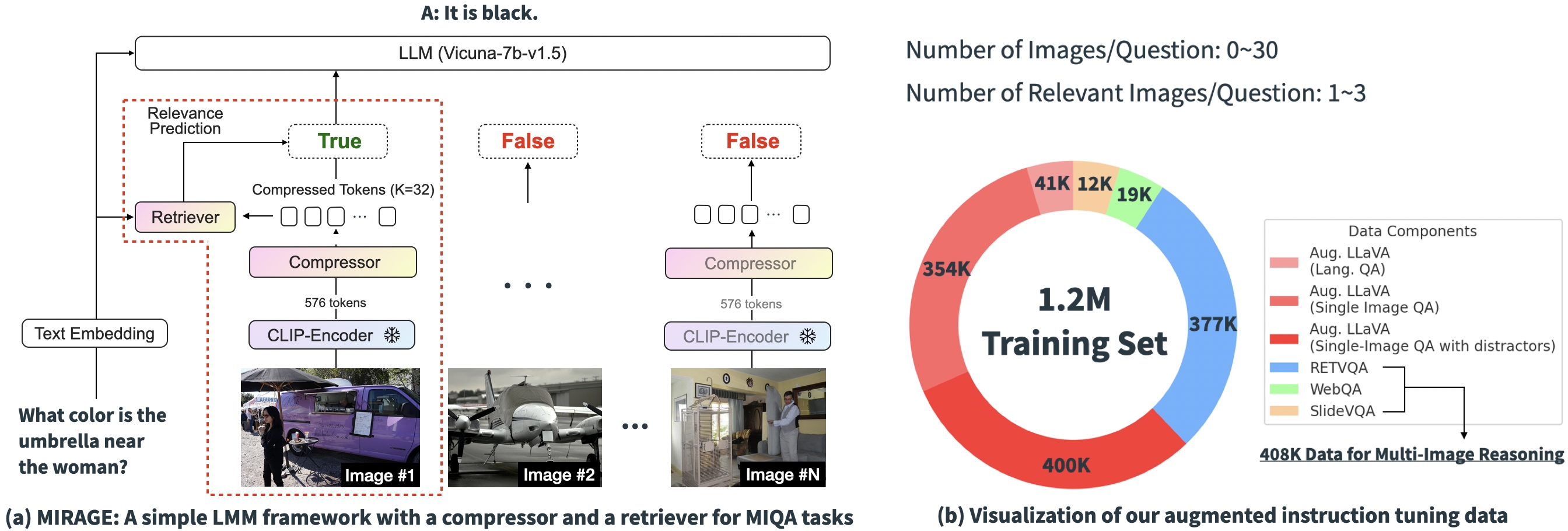

Based on the above experimental results, it is clear that the main challenges of current solutions in MIQA lie in (1) the ability to perform accurately; recovery relevant images from a vast pool of potentially unrelated images without positional bias and (2) Integrate Visual information related to these images to answer the question correctly. To address these issues, we introduce an open-source and simple single-stage training paradigm, “Mirage” (Multi-Image Retrieval Augmented Generation), which lava Model for handling MIQA tasks. The image below shows our model architecture.

Our proposed model consists of several components, each designed to address key issues in MIQA work:

-

Compress existing encodings.: The MIRAGE paradigm leverages a query-aware compression model to reduce visual encoder tokens to a smaller subset (10x smaller), allowing more images in the same context length.

-

Employ a retriever to filter out irrelevant message.: MIRAGE LLM uses a retriever, trained with fine-tuning, to predict whether an image will be relevant, and dynamically drops irrelevant images.

-

Multi-image training data: MIRAGE extends existing single-image instruction fine-tuning data to multi-image reasoning data, and synthetic multi-image reasoning data.

Results

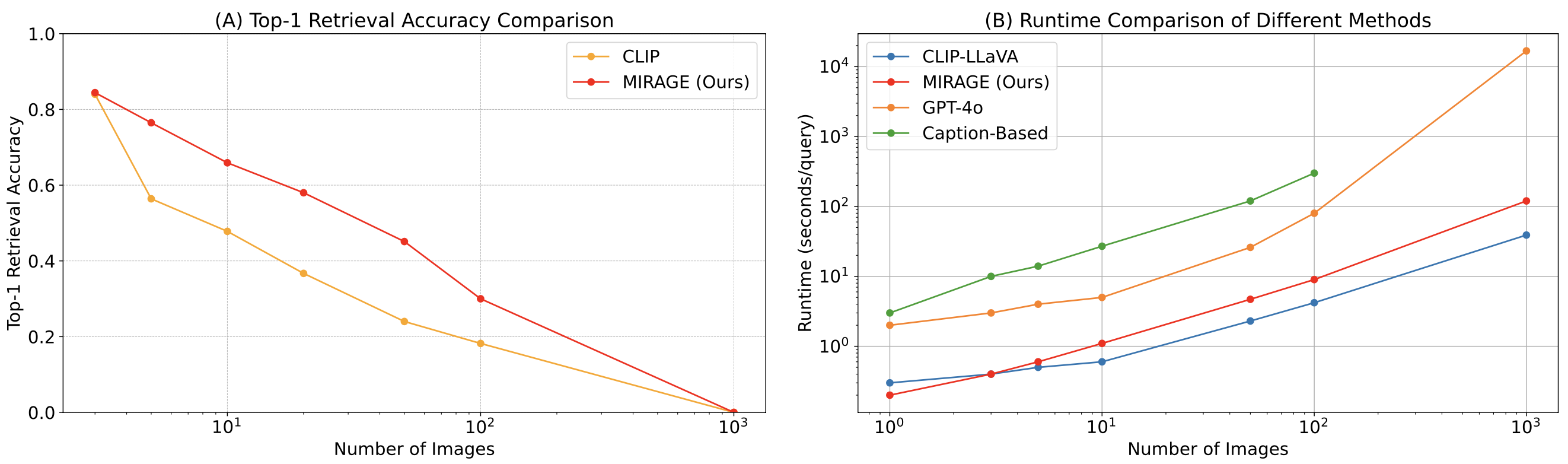

We review the VHs benchmark with MIRAGE. In addition to being able to handle 1K or 10K images, MIRAGE achieves state-of-the-art performance on most single-threaded tasks, albeit with a weak single-image QA backbone at only 32 tokens per image!

We also benchmark Mirage and other LMM-based models on a variety of VQA tasks. On multi-image tasks, MIRAGE exhibits strong recall and accuracy capabilities, outperforming GPT-4, Gemini-v1.5, and Large World Model (LWM). Additionally, it shows competitive single-image QA performance.

Finally, we compare MIRAGE's co-trained retrieval. Clip. Our retriever significantly outperforms CLIP without losing performance. This shows that although CLIP models can be good retrievers for image retrieval of open words, they may not perform well when dealing with text such as Question!

In this work, we developed a visual hysteria (VHs) benchmark and identified three prevalent deficiencies in existing large multimodal models (LMMs):

-

Struggles with visual distractions: In single-needle tasks, the performance of LMMs decreases rapidly as the number of images increases, indicating a significant challenge in filtering out irrelevant visual information.

-

Difficulty reasoning across multiple images: In multi-needle settings, simple methods such as captioning after language-based QA outperform all existing LMMs, highlighting the insufficient ability of LMMs to process information across multiple images.

-

Phenomena in the visual domain: Both the proprietary and open-source models show sensitivity to the position of the needle information within the image sequence, exhibiting a “central loss” phenomenon in the visual domain.

In response, we propose MIRAGE, a pioneering visual retriever-augmented generator (visual-RAG) framework. MIRAGE addresses these challenges with an innovative visual token compressor, a co-trained retrieval, and multi-image instruction tuning data.

After reading this blog post, we encourage all future LMM projects to benchmark their models using the Visual Haystacks framework to identify and address potential deficiencies before deployment. . We also urge the community to explore the answers to multi-image queries to push the frontiers of true Artificial General Intelligence (AGI).

Last but not least, please check out our Project pageAnd arxiv paperand click the star button in our Github repo!

@article{wu2024visual,

title={Visual Haystacks: Answering Harder Questions About Sets of Images},

author={Wu, Tsung-Han and Biamby, Giscard and and Quenum, Jerome and Gupta, Ritwik and Gonzalez, Joseph E and Darrell, Trevor and Chan, David M},

journal={arXiv preprint arXiv:2407.13766},

year={2024}

}