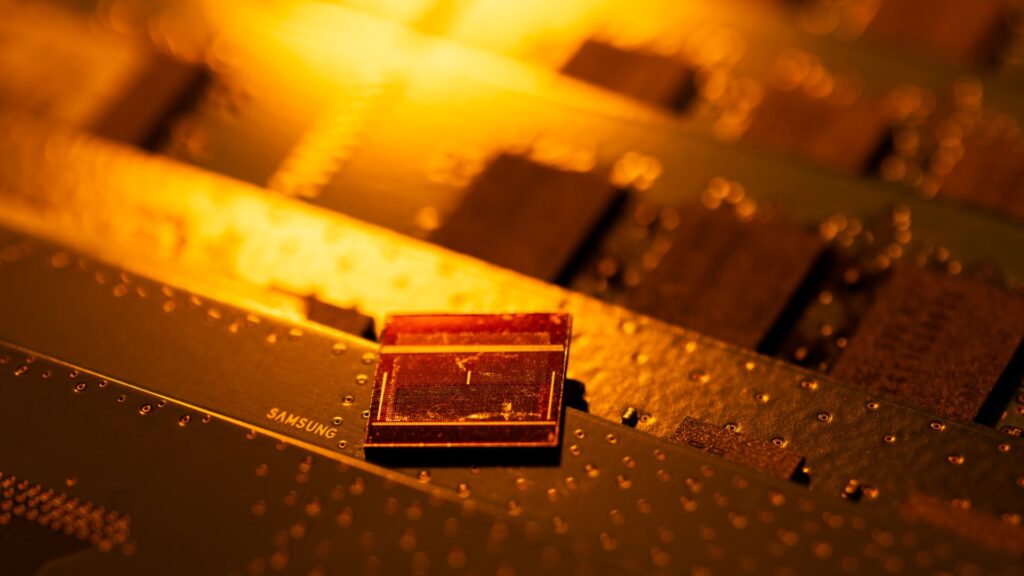

A Samsung Electronics Co. 12-layer HBM3E, TOP, and other DDR modules arranged. Samsung's profits rose sharply in the first quarter of 2024, reflecting a turnaround in the company's core semiconductor business. Distribution and strong sales of Galaxy S24 smartphones. Photographer: SeongJoon Cho/Bloomberg via Getty Images

Bloomberg | Bloomberg | Getty Images

According to analysts, high-performance memory chips are likely to be in tight supply this year, as explosive AI demand leads to a shortage of these chips.

SK Hynix and Micron – the world's two largest memory chip suppliers – are out of high-bandwidth memory chips for 2024, while 2025 stocks are also nearly sold, according to the firms.

“We expect general memory supply to remain tight through 2024,” Kazunori Ito, director of equity research at Morningstar, said in a report last week.

Demand for AI chipsets has boosted the high-end memory chip market, benefiting firms such as Samsung Electronics and SK Hynix, two of the world's top memory chip makers. While SK Hynix already supplies the chips. Nvidiathe company is also reportedly considering Samsung as a potential supplier.

High-performance memory chips play an important role in training large language models (LLMs) such as OpenAI's ChatGPT, which has skyrocketed AI adoption. LLMs need these chips to remember details of past interactions with customers and their preferences to answer questions in a human-like way.

“Manufacturing these chips is more complex and ramping up production is difficult. This will likely create shortages for the rest of 2024 and most of 2025,” said William Bailey, director of Nasdaq IR Intelligence.

Market intelligence firm TrendForce said in March that HBM's production cycle is 1.5 to 2 months longer than DDR5 memory chips typically found in personal computers and servers.

To meet growing demand, SK Hynix plans to expand production capacity by investing in modern packaging facilities in Indiana, US, as well as the M15X fab in Cheongju and the Yongin semiconductor cluster in South Korea.

Samsung said during its first-quarter earnings call in April that its HBM bit supply in 2024 “has more than tripled from last year.” Chip capacity refers to the number of bits of data that a memory chip can store.

“And we've already finished communicating with our customers with that committed supply. In 2025, we'll continue to increase supply at least twice a year or more, and we've already but creating seamless interactions with our customers,” said Samsung.

Micron did not respond to CNBC's request for comment.

Intense competition

Tech giants Microsoft, Amazon and Google are spending billions to train their LLMs to stay competitive, fueling demand for AI chips.

“Major buyers of AI chips—firms like Meta and Microsoft—have signaled that they plan to continue pouring resources into building AI infrastructure. That means they'll have large amounts of AI chips, including HBM, at least until 2024. Will buy,” said Chris. Miller, author of “Chip Wars,” a book on the semiconductor industry.

Chipmakers are racing to produce the most advanced memory chips on the market to capitalize on the AI boom.

SK Hanks said at a press conference earlier this month that it will start mass production of its latest generation of HBM chips, the 12-layer HBM3E, in the third quarter, while Samsung Electronics Co. Ltd. in the second quarter. plans to do so within , to be the first in the industry to ship prototypes of the latest chip.

“Samsung is currently leading the 12-layer HBM3E sampling process. If it can qualify ahead of its peers, I assume it can capture a majority share in late 2024 and 2025, ” said SK Kim, executive director and analyst at Daiwa Securities.