“When we started doing this, I thought it would be so bad that it wouldn’t fool anyone, but I was blown away,” Stephenson, who co-founded the site in 2021, said in an interview. . “And we’re unsophisticated. If we can do that, anybody with a real budget can do such a good job that it’ll fool you, it’ll fool me, and it’s scary.

As a tight With the 2024 presidential election approaching, experts and officials are increasingly sounding the alarm about the potentially destructive power of AI deepfakes, which they fear could further erode the nation’s sense of the truth. are and can destabilize the electorate.

There are signs that AI — and the fear surrounding it — is already having an impact on racing. Late last year, former President Donald Trump falsely accused the producer of an ad that featured his well-documented public misunderstandings, of trafficking in AI-generated content. Meanwhile, real fake photos of Trump and other political figures, Designed to both inspire and wound, has gone viral time and time again, sowing chaos at a critical juncture in the election cycle.

Now some officials are rushing to answer. In recent months, the New Hampshire Department of Justice announced it was investigating a fake robocall featuring President Biden’s AI-generated voice. Washington state warned its voters to be on the lookout for deeply fake photos. And lawmakers from Oregon to Florida have passed bills banning the use of such technology in campaign communications.

And in Arizona, a key swing state in the 2024 contest, top elections officials used deepfakes themselves in a training exercise to prepare staff for the coming onslaught of liars. The practice inspired Stephenson and his colleagues at Arizona Agenda, whose daily newsletter seeks to explain complex political stories to an audience of about 10,000 subscribers.

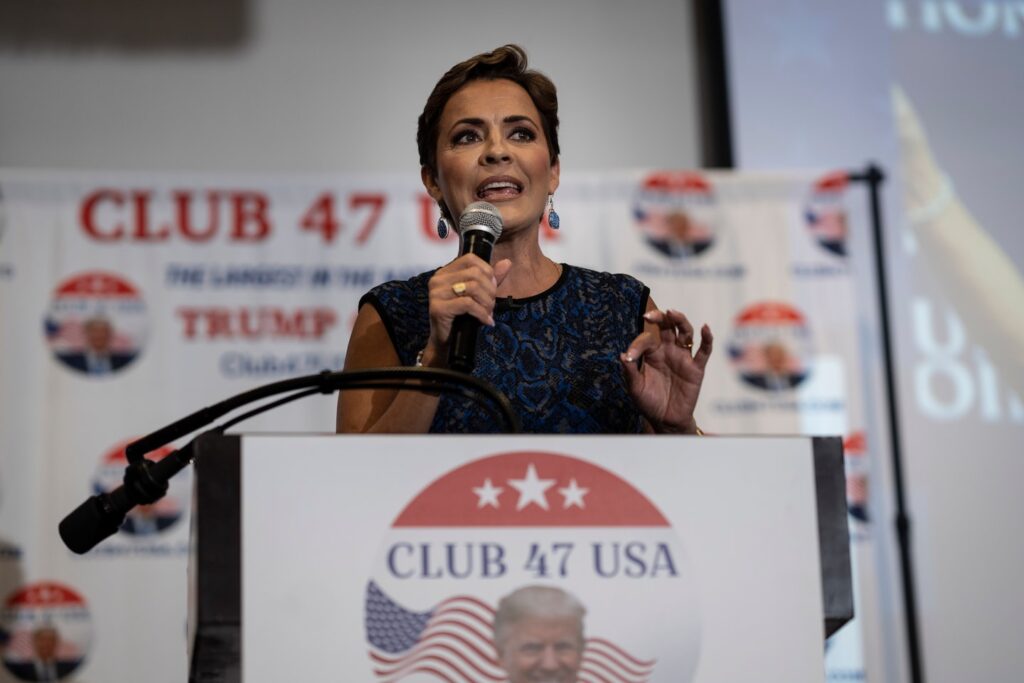

They brainstormed ideas for about a week and enlisted the help of a tech-savvy friend. On Friday, Stephenson published the piece, which included three deepfake clips of Lake.

It begins with a gimmick, telling readers that Lake — a hard-right candidate who has been bolstered by the Arizona agenda in the past — had decided to record a eulogy about how much he wanted from the outlet. is enjoyable But the video quickly pivots to a cheap punchline.

“Subscribe to the Arizona Agenda for real news hitting the hard-hitting agenda,” the fake leak said before adding to the camera: “And a preview of the scary AI coming your way at the next election, like this video, Which is an AI. Deepfake Arizona’s agenda to show you just how good this technology is getting.

By Saturday, the videos had garnered tens of thousands of views — and a very unhappy response from Real Lake, whose campaign attorneys sent a cease-and-desist letter to Arizona’s agenda. The letter demanded “the immediate removal of the above deeply fake videos from all platforms where they have been shared or disseminated.” If the outlet refuses to comply, the letter said, Leake’s campaign will “pursue all available legal remedies.”

A campaign spokesman declined to comment when contacted Saturday.

Stephenson said he was consulting with lawyers on how to respond, but as of Saturday afternoon, he did not plan to remove the videos. Deepfax is a good learning tool, he said, and he wants to arm readers with the tools to bombard them before election season heats up.

“It’s up to all of us to combat this new wave of technological disinformation in this election cycle,” Stephenson wrote in the article accompanying the clips. “Your best defense is to know what’s out there — and to use your critical thinking.”

Hani Fareed, a professor at the University of California at Berkeley who studies digital propaganda and disinformation, said the Arizona Agenda videos were useful public service announcements carefully crafted to limit unintended consequences. Even so, he said, outlets should be careful how they frame their deepfake reporting.

“I’m in favor of PSAs, but there’s a balance,” Fareed said. “You don’t want your readers and viewers to see anything that doesn’t fit their worldview as fake.”

Farid said deepfakes present two distinct “threat vectors.” First, bad actors can make fake videos of people saying things they never said. And second, people can more reliably fake any truly embarrassing or incriminating footage.

This dynamic, particularly during Russia’s invasion of Ukraine, has been a conflict with disinformation, Farid said. Early in the war, Ukraine promoted a deepfake showing Paris under attack, prompting world leaders to react to the Kremlin’s aggression with as much urgency as they could muster. Whether the Eiffel Tower was targeted.

Farid said it was a strong message, but it opened the door to Russia’s baseless claims that the videos from Ukraine, which contained evidence of Kremlin war crimes, were similarly false.

“I’m worried that everything is getting suspicious,” he said.

Stephenson, whose backyard is a political battleground that has recently become a hotbed of conspiracy theories and false claims, has similar fears.

“For years we’ve been fighting over what’s real,” he said. “Objective facts can be written off as fake news, and now objective videos will be written off as deep fake, and deep fake will be considered fact.”

Researchers like Farid are working on software that will make it easier for journalists and others to detect deepfakes. Farid said the tools he currently uses easily classify the Arizona Agenda video as bogus, a hopeful sign for the flood of fakes to come. However, deepfake technology is improving at a rapid pace, and detecting future phonies can be very difficult.

And even Stephenson’s admittedly subpar deepfake managed to fool some people: After Friday’s newsletter was splashed with the headline “Cary Leak Gets Us Solid,” a handful of paying readers Subscription terminated. Most likely, Stephenson suspects, they thought the leak endorsement was genuine.

Megan Vazquez contributed to this report.