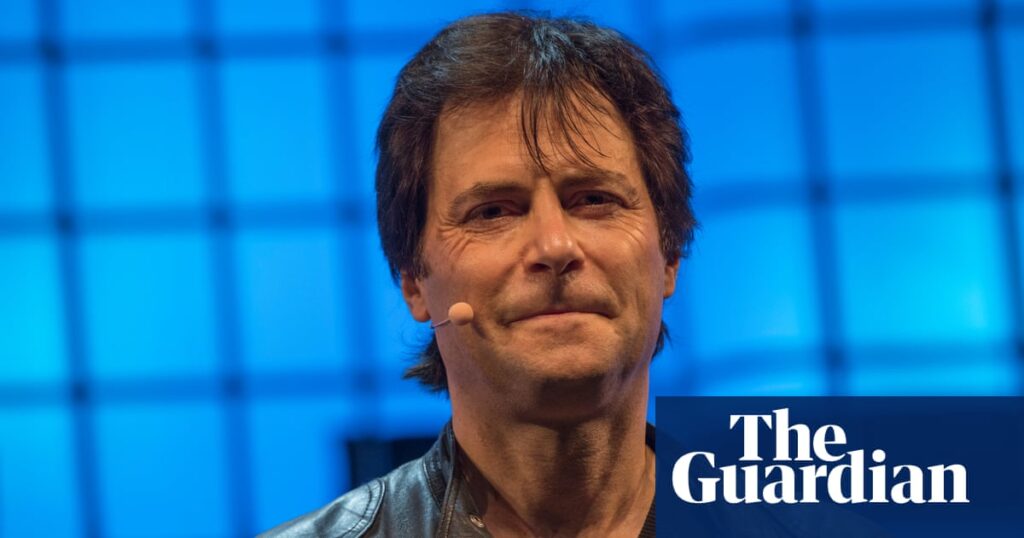

A leading scientist and AI campaigner has warned that Big Tech has succeeded in distracting the world from the threat to humanity that artificial intelligence still poses.

Speaking to the Guardian at the AI Summit in Seoul, South Korea, Max Tegmark said the shift in focus to the broader concept of protecting artificial intelligence from the extinction of life would make it impossible to impose strict regulations on the most powerful creators. Acceptance is at risk of delay. Program

“In 1942, Enrico Fermi built the first reactor with a self-sustaining nuclear chain reaction under a Chicago football field,” Tegmark, who trained as a physicist, said. “When the top physicists of the day found out about it, they were really shocked, because they realized that the single biggest hurdle left in making the atomic bomb had just been cleared. They felt Is it only a few years away – and in fact, with the Trinity exam in 1945, it was three years.

“AI Models That Can Pass the Turing Test. [where someone cannot tell in conversation that they are not speaking to another human] The same caveat applies to the type of AI you can lose control over. That's why you get people like Geoffrey Hinton and Yoshua Benjio – and even a lot of tech CEOs, at least privately – coming out now.”

Tagmark's non-profit Future of Life Institute last year led calls for a six-month “pause” in advanced AI research behind these fears. The launch of OpenAI's GPT-4 model in March of this year was the canary in the coal mine, he said, and proved that the risk was unacceptably close.

Despite thousands of signatures from experts including Hinton and Bengio, two of the three “godfathers” of AI who pioneered the machine learning approach that dominates the field today, no break has been agreed upon.

Instead, AI summits, of which Seoul is the second in the UK after Bletchley Park last November, have led to a new area of AI regulation. “We wanted that letter to legitimize the conversation, and were pretty happy with the way it worked. Once people saw that people like Bengio were upset, they thought, 'That's about it for me. I'm right to worry.' Even the guy at my gas station told me, afterward, that he's worried about AI replacing us.

“But now, we need to go from just talking to walking the walk.”

Since the initial announcement at the Bletchley Park Summit, however, the focus of international AI regulation has shifted away from existential risk.

In Seoul, only one of the three “high-level” groups directly addressed security, and it looked at the “full spectrum” of threats, “from privacy violations to job market disruptions and potentially devastating to the results”. Tagmark argues that extreme risk play isn't healthy – and it's not accidental.

“This is exactly what I predicted from industry lobbying,” he said. “In 1955, the first journal article came out saying that smoking causes lung cancer, and you'd think there would be a regulation very soon. But no, it took until 1980, Because there was a lot of pressure from the industry to divert attention, I think that's what's happening now.

After newsletter promotion

“Of course AI also causes existing harms: there is prejudice, it harms disadvantaged groups … but e.g. [the UK science and technology secretary] As Michelle Donlon said herself, it's not like we can't deal with both. It's like saying, 'Let's not focus on climate change because there's going to be a hurricane this year, so we should just focus on the hurricane.'

Tagmark's critics have made the same argument for its claims: that the industry wants everyone to talk about hypothetical risks in the future to distract from tangible harm in the present, a charge it denies. “Even if you think about it on its own merits, it's quite galactic-minded: it would be quite 4D chess for someone like that. [OpenAI boss] Sam Altman, to avoid regulation, to tell everybody that it could be a light for everybody and then try to convince people like us to sound the alarm.”

Instead, he argues, the tacit support of some tech leaders is because “I think they all feel like they're stuck in an impossible situation where, if they want to stop, they don't.” If the CEO of a tobacco company wakes up one morning and realizes that what they're doing isn't right, they're going to change the CEO The only way to achieve safety in the first place is if the government sets safety standards for everyone.