Complete list of technologies for best performance/wattage

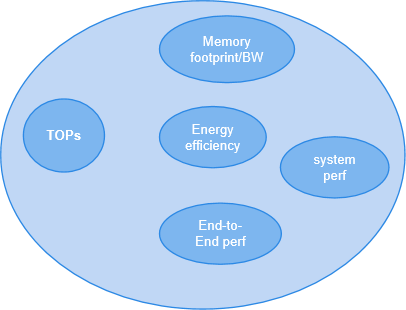

TThe most commonly used metric to describe AI performance is TOPs (Tera Operations Per Second), which indicates compute capacity but oversimplifies the complexity of AI systems. Many other factors beyond TOPs must be considered when it comes to real AI use case system design, including memory/cache size and bandwidth, data types, energy efficiency, etc.

Moreover, each AI use case has its own characteristics and requires comprehensive testing of the entire use case pipeline. This test examines its effects on system components and explores optimization techniques to predict optimal pipeline performance.

In this post, we choose an AI use case — an end-to-end real-time infinite zoom feature with a stable diffusion-v2 in-painting model and study how to build a corresponding AI system with optimal performance/wattage. go It can serve as a proposition, both with well-established technologies and new research ideas that can lead to potential architectural features.

Background on end-to-end video zoom

- As shown in the diagram below, to zoom out video frames (the fish image), we resize and apply a border mask to the frames before feeding them into the stable diffusion inpainting pipeline. are With an input text prompt, this pipeline generates frames with new content to fill the border masked area. This process is applied successively to each frame to achieve a continuous zoom-out effect. To save compute power, we can Sampling video frames sparsely to avoid painting every frame.(eg, rendering 1 frame every 5 frames) if it still provides a satisfactory user experience.

- The Stable Diffusion-v2 in-painting pipeline is pre-trained on the Stable Diffusion-2 model, a text-to-image latent diffusion model created by Stability AI and LAION. The blue box painting in the diagram below shows each function block in the pipeline.

- The stable diffusion-2 model generates 768*768 resolution images, it is trained to remove random noise iteratively (50 steps) to obtain a new image. Denoising process is implemented by Unet and scheduler which is a very slow process and requires a lot of compute and memory.

Below are the 4 models used in the pipeline:

- VAE (Image Encoder). Convert image to low-dimensional latent representation (64*64)

- CLIP (Text Encoder). Transformer architecture (77*768), 85MP

- UNet (diffusion process). Denial of Recursive Processing by a Scheduler Algorithm, 865M

- VAE (Image Decoder). Converts the hidden representation back to an image (512*512).

Most stable diffusion operations (98% for autoencoder and text encoder models and 84% for U-Net) convolutions. The bulk of the remaining U-Net operations (16%) are dense matrix multiplications due to self-addressing blocks. These models can be quite large (variable with different hyperparameters) requiring a lot of memory, for mobile devices with limited memory, exploring model compression techniques to reduce model size. Essential, including quantization (2–4x mode size reduction and 2.-3x speedup from FP16 to INT4), clipping, sparsity, etc.

Power efficiency optimization for AI features such as end-to-end video zoom

For AI features like video zoom, power efficiency is one of the top factors for successful deployment on edge/mobile devices. These battery-powered edge devices store their energy in the battery, in mW-H (milliwatt-hours), 1200WH ie 1200 watts in an hour before it is discharged, if an application uses 2w in an hour. has been, so the battery can power the device for 600h). Power efficiency is measured as IPS/Watt where IPS is measured in seconds (FPS/Watt, TOPS/Watt for image-based applications).

Minimizing power consumption is critical to achieving long battery life for mobile devices, as there are many factors that contribute to high power consumption, including large amounts of memory transactions due to large model sizes. , heavy computation of matrix multiplication etc., let’s have a look. See how to optimize the use case for efficient use of electricity.

- Model optimization.

In addition to quantization, pruning, and quantization, there is also weight sharing. A network has multiple weights While only a small number of weights are useful, the number of weights can be reduced by allowing multiple connections to share the same weight as shown below. The original 4*4 weight matrix is reduced to 4 combined weights and a 2-bit matrix, reducing the total bits from 512 bits to 160 bits.

2. Memory optimization.

Memory is a critical component that consumes more power than matrix multiplication. For example, the power consumption of a DRAM operation can be orders of magnitude higher than a multiply operation. In mobile devices, it is often difficult to accommodate large models within the local device memory. This causes multiple memory transactions between local device memory and DRAM, resulting in high latency and increased energy consumption.

Improving access to off-chip memory is critical to increasing energy efficiency. Article (Improving Off-Chip Memory Access for Deep Neural Network Accelerators [4]) introduced an adaptive scheduling algorithm designed to minimize DRAM accesses. This approach demonstrated substantial energy consumption and latency reduction between 34% and 93%.

A new method (Romanet [5]) suggest minimizing memory accesses to save power. The basic idea is to match the exact block size of the CNN layer partition to DRAM/SRAM resources and maximize data reuse, and tile accesses to minimize the number of DRAM accesses. Scheduling should also be improved. Data mapping on DRAM focuses on mapping data tiles to different columns in the same row to maximize row buffer hits. For large data tiles, the same bank in different chips can be used for chip-level synchronization. Additionally, if the same row is populated across all chips, data is mapped to different banks in the same chip for bank-level parallelism. For SRAM, a similar concept of bank-level parallelism can be applied. The proposed optimization flow can save energy up to 12% for AlexNet, 36% for VGG-16, and 46% for MobileNet. A high-level flow chart of the proposed method and a schematic illustration of DRAM data mapping are shown below.

3. Dynamic power scaling.

The system power can be calculated as P=C*F*V², where F is the operating frequency and V is the operating voltage. Techniques like DVFS (Dynamic Voltage Frequency Scaling) were developed to improve runtime power. It measures the voltage and frequency depending on the workload capacity. In deep learning, layer-wise DVFS is not suitable because voltage scaling has long latency. On the other hand, frequency scaling is fast enough to maintain each layer. Dynamic Frequency Scaling (DFS) by Layer[6] The technique is proposed for NPU, in which the power consumption can be predicted to determine the highest allowable frequency with a power model. It has been proven that DFS improves latency by 33%, and saves energy by 14%.

4. Dedicated low-power AI HW accelerator architecture. To accelerate deep learning approximations, specialized AI accelerators have shown high power efficiency, achieving similar performance with lower power consumption. For example, Google’s TPU is designed to speed up matrix multiplication by reusing input data multiple times for calculations, unlike CPUs that fetch data for each calculation. This approach conserves power and reduces data transfer latency.