TThat news anchor has a deeply unusual air as he delivers a partisan and insulting message in Mandarin: Taiwan's outgoing president, Tsai Ing-wen, is as efficient as limp spinach, her tenure in office economic. Surrounded by weakness, social problems and protests.

“Water Spinach sees water. Spinach turned out to be water. Palak is not just a name,” says the presenter, in an extended metaphor about Tsai Being “halo tsai” – a phrase related to the Mandarin word for water spinach.

This is not a traditional broadcast journalist, though the lack of impartiality is no longer a shock. Anker is created by an artificial intelligence program, and the segment is trying, albeit clumsily, to influence Taiwan's presidential election.

The video's source and creator are unknown, but the clip is designed to make voters suspicious of politicians who want Taiwan closer to China, which claims the autonomous island as its own. It is part of the land. This is the latest example of an AI-generated disinformation game subgenre: the deepfake news anchor or TV presenter.

Such avatars are proliferating on social networks, spreading state-backed propaganda. Experts say this type of video will continue to proliferate as the technology becomes more widely accessible.

“It doesn't have to be perfect,” said Tyler Williams, director of investigations at the disinformation research company Graphica. “If a user is just scrolling through X or TikTok, they're not picking up on small things on a small screen.”

Beijing has already experimented with AI-powered news anchors. In 2018, state news agency Xinhua unveiled Qiu Hao, a digital news presenter, promising to bring viewers news “24 hours a day, 365 days a year”. Although the Chinese public is generally enthusiastic about the use of digital avatars in the media, Qiu Hao failed to catch on more widely.

China is at the forefront of the disinformation element of this trend. Last year, pro-China bot accounts on Facebook and X distributed AI-generated deepfake videos of news anchors representing a fictitious broadcaster called Wolf News. In one clip, the US government was accused of failing to tackle gun violence, while another highlighted China's role at an international summit.

In a report released in April, Microsoft said Chinese state-backed cyber groups targeted Taiwan's elections with AI-generated disinformation content, using fake news anchors or TV-style presenters. is also included. In a clip copied by Microsoft, the AI-generated anchor made unsubstantiated claims about the private life of the eventually successful pro-independence candidate – Lai Ching-tae – alleging that she fathered children out of wedlock. are

Microsoft said the news anchors were created by the CapCut video editing tool, developed by Chinese company ByteDance, which owns TikTok.

Clint Watts, general manager of Microsoft's threat analysis center, points to China's official use of synthetic news anchors in its local media market, which has also allowed the country to improve the format. It has now become a tool for spreading disinformation, although so far it has had little discernible effect.

“The Chinese are more focused on trying to get AI into their systems — propaganda, disinformation — they went there too fast. They're trying everything. It's not particularly effective,” Watts said. said.

Third-party vendors like CapCut offer the News Anchor format as a template, so it's easy to customize and produce in large quantities.

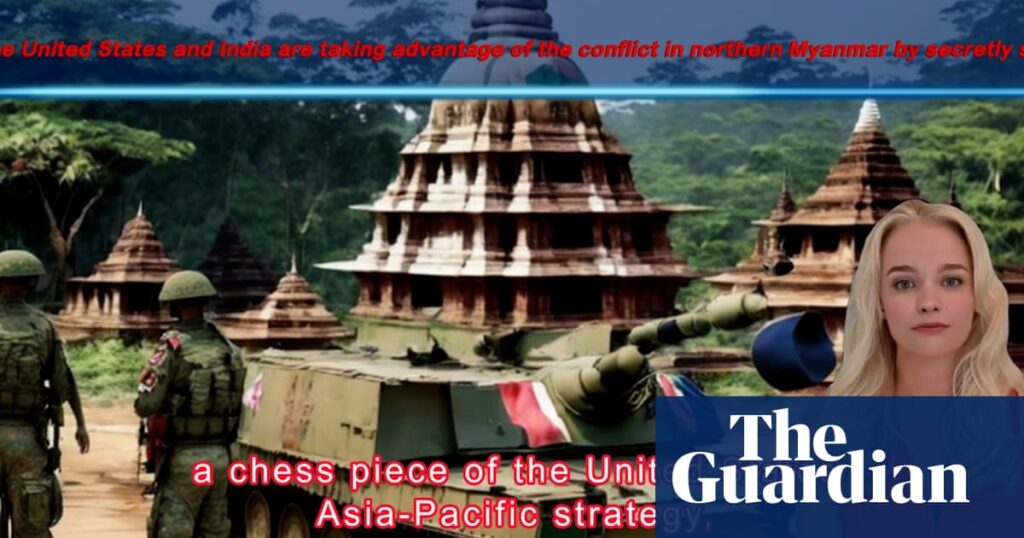

There are also clips that feature avatars that act like a cross between a professional TV presenter and an influencer speaking directly to the camera. A video produced by a Chinese state-backed group called Storm 1376 – also known as Spamouflage – features an AI-generated blonde, female presenter accusing the US and India of secretly Selling weapons to the Myanmar army.

The overall effect is far from convincing. Despite the realistic-looking render, the video is undermined by a harsh sound that is clearly computer-generated. Other examples discovered by NewsGuard, an organization that monitors misinformation and disinformation, show a TikTok account linked to the spam trap using AI-generated avatars to comment on US news such as food costs and gas prices. do One video features an avatar with a computer-generated voice discussing Walmart's prices under the slogan: “Is Walmart lying to you about the weight of their meat?”

The Avatar videos are part of a pro-China network that was “expanding” ahead of the US presidential election, NewsGuard said. It noted 167 accounts created since last year were linked to the spam trap.

Other nations have experimented with deepfake anchors. Iranian state-backed hackers recently disrupted TV streaming services in the United Arab Emirates to allow a deepfake newsreader to broadcast a report on the war in Gaza. The Washington Post reported on Friday that the Islamic State terror group is using AI-generated news anchors — in helmets and fatigues — to broadcast propaganda.

And one European state is openly trying out AI-powered presenters: Ukraine's foreign ministry has launched an AI spokeswoman, Victoria Shay, using the likeness of Rosalie Nombre – a Ukrainian singer and media personality. Who gave permission to use his photo. The result, at first glance, at least, is impressive.

Last year, Beijing published guidelines for tagging content, which said images or videos created using AI must be clearly watermarked. But Jeffrey Ding, an assistant professor at George Washington University who focuses on the technology, said it's an “open question” how the tagging requirements will be implemented in practice, particularly with regard to state propaganda.

And while China's guidelines call for reducing “false” information in AI-generated content, Chinese regulators' priority is to “control the flow of information and ensure that the content being generated is He is not politically sensitive and does not cause social disruption,” said Ding. . This means that when it comes to fake news, “for the Chinese government, what counts as disinformation on the Taiwan front may be very different from the correct or correct interpretation of disinformation”.

Experts don't believe computerized news anchors are effective decoys yet: Despite Avatar's best efforts, Tsai's pro-independence party won in Taiwan. Macrina Wang, NewsGuard's deputy news verification editor, said the avatar content she had seen was “pretty raw” but the volume was increasing. He said the videos were clearly fake to the trained eye, with slight movement and a lack of light or shadow on the avatar's figure being among the giveaways. However, some comments under TikTok videos show that people have fallen for it.

“There is a danger that the average person thinks that [avatar] A real person,” he said, adding that AI is making video content “more compelling, clickable and viral.”

Microsoft's Watts said the more likely evolution of the newscaster strategy is footage of a real-life news anchor being manipulated, rather than a fully AI-generated persona. We could see “any mainstream news media anchor being manipulated into saying something they didn't say,” Watts said. This is “highly likely” from a completely artificial effort.

In their report last month, Microsoft researchers said they had not come across many examples of AI-generated content influencing the offline world.

“Rarely have creative AI-powered content jobs in nation-states gained much traction on social media, and in only a few cases have we tricked real audiences with such content,” the report said. “.

Instead, audiences are turning to simple fakes like fake text stories emblazoned with media logos.

Watts said there's a chance a fully AI-generated video could sway an election, but the tool to create such a clip doesn't yet exist. “I guess the tool used with this video … is not on the market yet.” The most effective AI video messenger is not yet a newscaster. But it underscores the video's importance to states trying to create voter confusion.

Threat actors will also be waiting for an example of an AI-generated video that grabs an audience's attention – and then replicates it. Both OpenAI and Google have demonstrated AI video creators in recent months, though neither has released their tools to the public.

“The most effective use of artificial personas in videos that people actually see will be in the commercial space first. And then you'll see threat actors move in that direction.

Additional research by Chi Hui Lin