A technology by itself is not enough. For it to be used, it needs other elements, such as common sense, good habits, and accepting shared responsibility for its consequences. Without this kind of social hall, technologies are used ineffectively or incompletely. A good example of this would be an mRNA vaccine COVID outbreak They were an amazing medical success – and yet, widely understood, they didn’t go down as well as they should have. Absence of the elements required to bring a technology useful to the human world would not be appropriate to call it technology. If we can’t understand how technology works, we risk succumbing to magical thinking.

Another way of saying this is that we need cartoons in our heads of how technologies work. I don’t know that much about making vaccines for myself, but I have a cartoon of the vaccine, and that gives me an idea; It’s good enough to help me follow the news about vaccines, and understand the development process, risks and potential future of the technology. I have similar cartoons about rockets, financial regulation and nuclear power. They’re not perfect, but they give me enough insight. Even experts use cartoons to talk to each other: sometimes a simplified view of things helps them see the forest for the trees.

At this point, I am experiencing some tension with many in my community of computer scientists. I believe that the cartoons we broadcast about AI are counterproductive. We have brought artificial intelligence into the world with ideas that are unhelpful and annoying. Worst of all, perhaps, is the sense of human depravity and doom that many of us share. I have trouble understanding why some of my colleagues say that what they are doing could lead to human extinction, and yet argue that it is still worth doing. It’s hard to understand this way of talking without wondering if AI is becoming a new kind of religion.

Outside of apocalyptic environments, we don’t do a good job of explaining what things are and how they work. Most non-technical people can better understand the Forked abstraction when it’s broken down into concrete chunks you can tell stories about, but in the computer science world it can be a tough sell. We generally prefer to treat AI systems as giant immutable continuums. Perhaps, to some extent, there is resistance to exposing what we do because we want to approach it mystically. The general term, which itself begins with the phrase “artificial intelligence,” is about the idea that we are creating new beings rather than new devices. This concept is furthered by anthropomorphizing biological terms such as “neurons” and “neural networks” and “learning” or “training”, which computer scientists use all the time. It’s also a problem that there is no fixed definition of “AI”. It is always possible to dismiss any specific comment about AI as not addressing any of its other possible definitions. The lack of mooring for the term coincides with a metaphysical sensibility according to which the human framework will soon be transcended.

Is there a way to explain AI that isn’t in terms of human obsolescence or suggesting alternatives? If we talk about our technology in a different way, maybe there will be a better way to bring it to society. In “There Is No AI,” an earlier article I wrote for this magazine, I talked about rethinking large-model AI as a form of human cooperation rather than a new creature on the scene. In this piece, I hope to explain how such AI works by floating above the often-mysterious technical details and instead emphasizing how technology modifies— And depends — on human input. It’s not a primer in computer science, but a story about beautiful things in time and space that serves as a metaphor for how we’ve learned to manipulate information in new ways. I find that most people can’t follow the usual stories about how AI works as well as they can follow stories about other technologies. I hope the alternatives I present here will be useful.

We can create our human-centered cartoon about large model AI in four steps. Every step is easy. But they will add something easy to visualize and use as a thinking tool.

I. The tree

The first step, and in some ways the easiest, can be difficult to explain. We can start with a question: How can you use a computer to determine whether a picture is a cat or a dog? The problem is that cats and dogs look largely alike. Both have eyes and snout, tail and claws, four legs and fur. It’s easy for a computer to measure an image—to determine whether it’s lighter or darker, or more blue or red. But this kind of measurement will not distinguish a cat from a dog. We can ask similar questions about other examples. For example, how can a program analyze whether a passage was written by William Shakespeare?

At a technical level, the basic answer is a deep concatenation of data that we call a neural network. But the first thing to understand about this answer is that we are dealing with a technology of complexity. Neural networks, the most fundamental entry point into AI, are like a folk technology. When researchers say that AI has “emergent properties”—and we say that a lot—that’s another way of saying that we didn’t know what the network would do until we tried to build it. will AI is not the only field that is this way; Medicine and economics are similar. In such fields, we try things, and try again, and find techniques that work better. We do not start with a master theory and then use it to calculate an ideal result. Similarly, we can work with complexity, even if we cannot fully predict it.

Let’s try to imagine how to separate the cat image from one of the dogs. Digital images are made up of pixels, and we need to do something to move beyond just a list of them. One approach is to apply a grid to the image that measures a bit more than just color. For example, we can measure the degree to which the colors in each grid square change—we now have a number in each square that can represent the importance of sharp edges in that patch of the image. A single layer of such measurements would still not distinguish cats from dogs. But we can place a second grid on top of the first, and then another, and another, while measuring something about the first grid. We can create a tower of layers, with the patch measuring the bottom of the image, and each subsequent layer measuring the layer below it. This basic idea has been around for almost half a century, but only recently have we found the right ways to make it work well. No one really knows if there is still a better way.

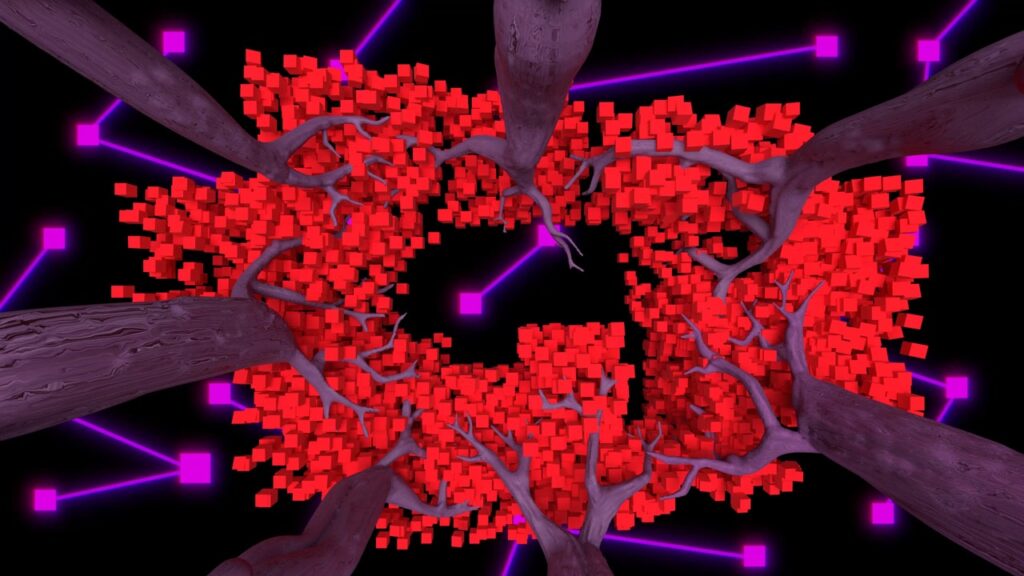

Here I will make my cartoon almost like an illustration in a children’s book. You can think of the long structure of these grids as the trunk of a great tree jutting out of the picture. (The trunk is probably rectangular rather than round, since most pictures are rectangular.) Within the tree, each small square on each grid is decorated with a number. Picture yourself climbing a tree and looking in with an x-ray as you go up: the numbers you get at the top depend on the numbers below.

Alas, what we have so far won’t be able to tell cats from dogs. But now we can start “training” our tree. (As you know, I dislike the anthropomorphic term “training,” but we’ll let it go.) Imagine that the bottom of our tree is flat, and you can slide pictures under it. Now take a collection of cat and dog pictures that are clearly and correctly labeled “cat” and “dog” and slide them, one by one, under the bottom layer. Measurements will move toward the topmost layer of the tree—the canopy layer, if you will, that people can see in helicopters. First, the results displayed by the umbrella will not be consistent. But we can dive into the tree—with a magic laser, let’s say—adjust the numbers in its various layers to get a better result. We can expand on the numbers that are most helpful in distinguishing cats from dogs. This process is not straightforward, as changing the number on one layer can cause changes in other layers. Finally, if we succeed, the numbers on the leaves of the canopy will all be two when there is a dog in the picture, and all two when there is a cat.

Now, amazingly, we’ve built a tool—a trained tree—that distinguishes cats from dogs. Computer scientists call the grid elements found at each level “neurons” to suggest a connection to biological brains, but the similarity is limited. While biological neurons are sometimes organized into “layers,” such as in the cortex, they are not always. In fact, a cortex has fewer layers than an artificial neural network. However, with AI, it’s been found that adding many layers greatly improves performance, which is why you see the term “deep” often, as in “deep learning”—its That means many layers.