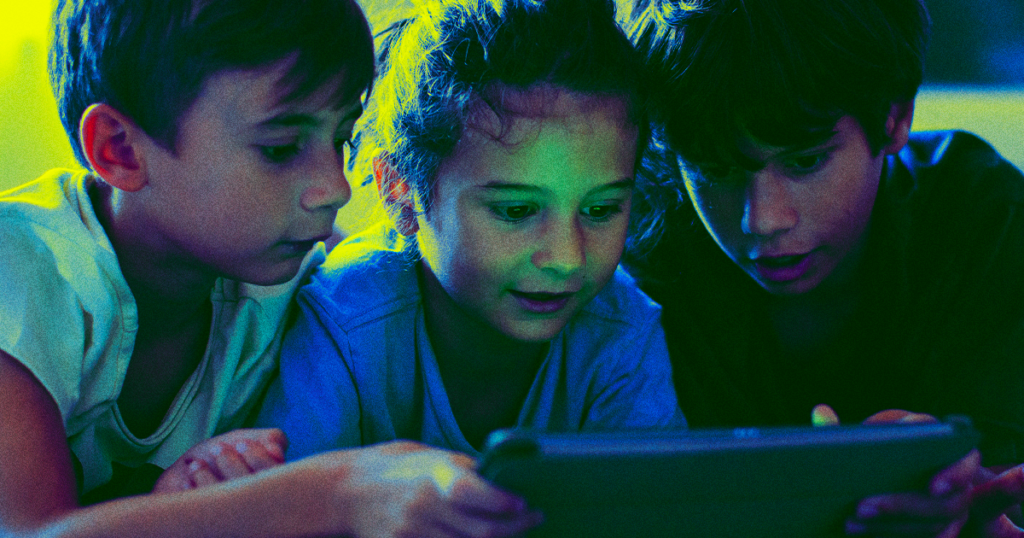

“Young children across the country are slumped in front of iPads being subjected to artificial flow.”

Go beyond the limit

The AI Hustle Brothers are back at it. And this time, their get-rich-quick schemes are strengthening your kids’ brains.

AI Scammers Are Using Generative Tools To Create Weird YouTube Kids Videos, It’s A Worry Wired The report shows. The videos are often created in a style similar to the addictive hit YouTube and Netflix show Cocomelon, and are rarely marked as AI-generated. And as Wired Notice, given the ubiquity of content and style, a busy parent might not notice if this AI-spun mash — much of which is already garnering millions of views and subscribers on YouTube — is playing in the background. was

In other words, pill-possessed toddlers appear to be consuming copious amounts of brain-liquid AI material, and parents have no clue.

Cocomelon heel

As Wired According to reports, a simple YouTube search will return a slew of videos of AI cash grabbers teaching others how to use a collection of AI programs — OpenAI’s ChatGPT for scripting, ElevenLabs’ voice-generating AI, Adobe Express AI suite, and more — to create videos from a few minutes to an hour long.

Some claim their videos are educational, but the quality varies. It’s also highly unlikely that any of these mass-produced AI videos would have been pushed out of consultation with child development experts, and if the AI was intended to be used by media-illiterate young children. to make money through fever dreams born from, “We’re helping them learn!” The argument feels very thin.

per Wiredresearchers like Tufts University neuroscientist Eric Hoyle worry about how this dark combination of dirty AI content and long screen time will affect today’s kids.

“Across the country, little kids are drowning in front of iPads being subjected to artificial flow,” the scientist recently wrote on his substack. Internal perspective. “There is no other word but dystopian.”

YouTube said Wired that its “fundamental approach” to countering the onslaught of AI-generated content flooding its platform would be to require creators to self-disclose when they’ve created altered or artificial content. What’s realistic.” It also reportedly emphasized that it already uses a system of “automated filters, human review, and user feedback” to moderate the YouTube Kids platform. Is.

Clearly, though, a substantial amount of children’s automated material is slipping through the self-reporting cracks. And some experts aren’t convinced that this complimentary code approach is cutting it enough.

“The importance of human oversight, especially of creative AI, is incredibly important,” said Tracy Piezo Frey, senior AI advisor at the media literacy nonprofit Common Sense Media. Wired. “This responsibility should not be placed entirely on the shoulders of families.”

More on AI content: OpenAI CTO says it’s releasing Sora this year.