× Close

Credit: Simon Fraser University

Imagine sweeping around an object with your smartphone and getting a realistic, fully editable 3D model that you can view from any angle. This is fast becoming a reality, thanks to advances in AI.

Researchers at Simon Fraser University (SFU) in Canada have unveiled new AI technology to do just that. Soon, instead of just taking 2D photos, everyday users will be able to take 3D captures of real-life objects and edit their shapes and appearance, just like they do with regular 2D photos today. do with

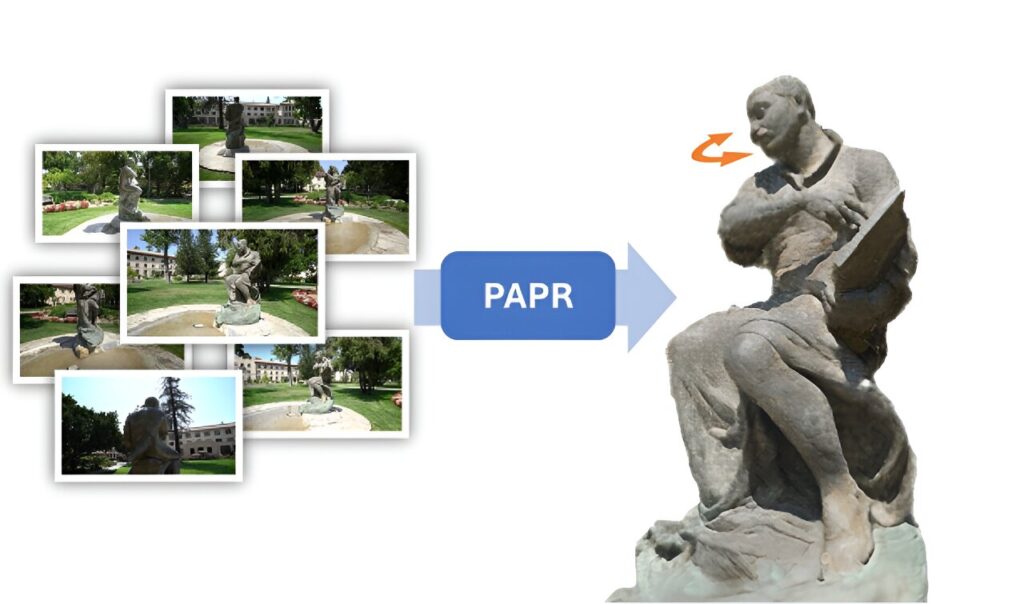

In a new paper appearing on arXiv Presented at the preprint server and the 2023 Conference on Neural Information Processing Systems (NeurIPS) in New Orleans, Louisiana, researchers demonstrated a new technique called Proximity Attention Point Rendering (PAPR) that uses 2D images of an object. Can convert the set to cloud. 3D points that represent the shape and appearance of an object.

Each point then gives users a knob to control the object — dragging a point changes the object’s shape, and editing a point’s properties changes the object’s shape. Then, in a process called “rendering,” the 3D point cloud can then be viewed from any angle and converted into a 2D image that renders the modified object as it would in real life. Taken from an angle.

Using new AI technology, the researchers showed how the sculpture could be brought to life—the technology automatically converted a set of images of the sculpture into a 3D point cloud, which is then animated. The end result is a video of the sculpture whose head turns from side to side as the viewer is guided on a path around it.

“AI and machine learning are truly leading to a paradigm shift in the reconstruction of 3D objects from 2D images. The tremendous success of machine learning in fields such as computer vision and natural language is encouraging researchers to explore how traditional 3D How graphics pipelines can be re-engineered with the same deep learning-based building blocks that were responsible for the runaway AI success stories of late,” Simon Fraser, assistant professor of computer science at University (SFU). said Dr. Lee, director of the APEX Lab and senior author on the paper.

“It turns out that doing this successfully is much more difficult than we expected and requires overcoming a number of technical challenges. What excites me most are the many possibilities that consumer technology has to offer. — 3D could become as common a medium for visual communication and expression as 2D is today.”

One of the biggest challenges in 3D is how to represent 3D shapes in a way that users can easily and intuitively modify them. A previous approach, known as Neural Radiance Fields (NeRFs), does not allow simple shape modification because it requires the user to provide a description of what happens with each continuous coordinate. Is. A more recent approach, known as 3D Gaussian splatting (3DGS), is also not suitable for shape modification because the surface of the shape may crack or fragment after modification.

More information:

Yanshu Zhang et al., PAPR: Proximity focus point rendering, arXiv (2023). DOI: 10.48550/arxiv.2307.11086