“I've had this idea for a long time,” says Demas Hassabis, head of Google DeepMind and leader of Google's AI efforts. Hassabis has been thinking and working on AI for decades, but four or so. Five years ago, something really crystallized. One day soon, he realized, “we'll have this universal assistant. It's multimodal, it's with you all the time. Call it. Star Trek the communicator; Call her voice. his; Call it what you will. “That's what's helpful,” continued Hasabis, “it's just useful. You get used to being there whenever you're needed.”

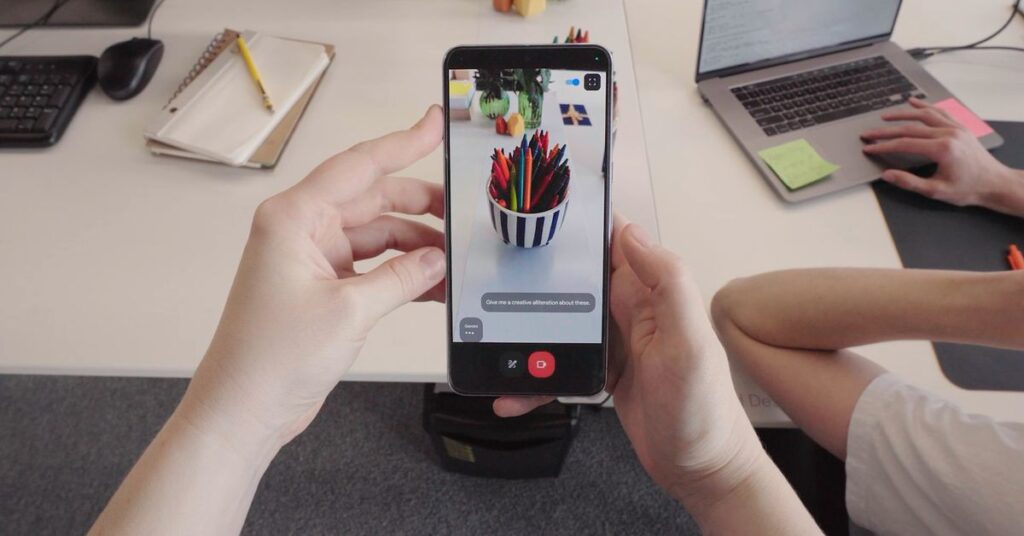

At Google I/O, the company's annual developer conference, Hasabis showed off a very early version of what he hopes will become the universal assistant. Google calls it Project Astra, and it's a real-time, multimodal AI assistant that can see the world, know what things are and where you've left them, and answer questions or almost anything. can help you do that too. In an incredibly impressive demo video in which Hasabis swears he is in no way a fake or a fraud, an Astra user at Google's London office uses the system to identify a part of a speaker, their Asked to find missing glasses, review code and more. All of this works in virtually real-time and in a very interactive manner.

The Astra is just one of many Gemini announcements at this year's I/O. There's a new model called Gemini 1.5 Flash, designed to be faster for common tasks like summarizing and captioning. Another new model, called Veo, can create video from a text prompt. The Gemini Nano, a model designed to be used natively on devices like your phone, is said to be faster than ever. The context window for Gemini Pro, which refers to how much information the model can consider in a given query, is doubling to 2 million tokens, and Google says the model will follow instructions. I am better than before. Google itself is making rapid progress on the models and bringing them to consumers.

Going forward, Hasabis says, the story of AI will be less about the models themselves and more about what they can do for you. And that story is all about agents: bots that don't just talk to you, but actually get things done on your behalf. “Our history in agents is longer than our typical model work,” he says, pointing to the AlphaGo system that played the game nearly a decade ago. Some of these agents, he envisions, will be very simple tools for performing tasks, while others will be more like assistants and companions. “I think it can sometimes come down to personal preference,” he says, “and understanding your context.”

Hasabis says Astra is much closer to previous products than the way a true real-time AI assistant should work. When Gemini 1.5 Pro, the latest version of Google's major mainstream language model, was rolled out, Hasabis says he knew the underlying technology could start working well for something like Straw. But the model is only part of the product. “We had the components six months ago,” he says, “but the only problem was speed and latency. Without that, usability is not enough.” Therefore, speeding up the system has been one of the team's most important tasks for the past six months. This meant improving the model, but also improving the rest of the infrastructure to work well and at scale. Fortunately, Hasabis laughs, “That's what Google does so well!”

Many of Google's AI announcements at I/O are about giving you more and easier ways to use Gemini. A new product called Gemini Live is a voice-only assistant that lets you have a back-and-forth conversation with a model, interrupting it when it gets too long or calling back to earlier parts of the conversation. Is. A new feature in Google Lens lets you search the web by shooting video and describing it. Much of this is enabled by Gemini's large contextual window, which means it can access a lot of information at once, and Hasabis says it's your assistant's Communicating together is essential to feeling normal and natural.

Know who agrees with that assessment, by the way? OpenAI, which has been talking about AI agents for some time. In fact, the company demoed a product similar to the Gemini Live barely an hour after Hasabis and I spoke. Both companies are increasingly fighting for the same territory and it looks like how AI can change your life and how you can use it over time.

How exactly will those assistants work, and how will you use them? No one knows for sure, not even mathematicians. One thing Google is focusing on right now is trip planning — it's created a new tool for using Gemini to create an itinerary for your vacation that you can then work with Assistant can edit. Eventually there will be many more features like this. Hasabis says he's happy with phones and glasses as key tools for these agents, but also says there's “potentially room for some interesting factors.” Astra is still in the early prototype stage and represents only one way you can interact with a system like Gemini. The DeepMind team is still researching how to bring multimodal models together and how to balance very large general models with smaller and more focused models.

We're still very much in the “speeds and fades” era of AI, in which every incremental model matters and we obsess over parameter sizes. But pretty soon, at least computationally, we're starting to ask different questions about AI. Better questions. Questions about what these assistants can do, how they do it, and how they can improve our lives. Because tech is far from perfect, but it's getting better really fast.