× Close

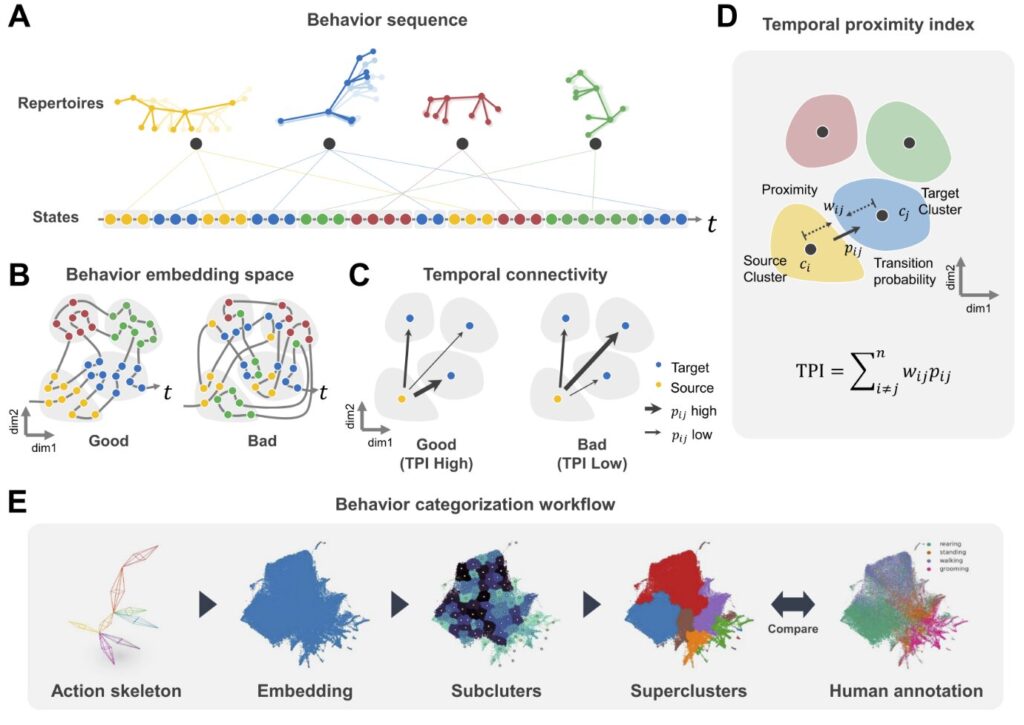

A new behavioral embeddedness evaluation metric: TPI (Temporal Proximity Index). (A) Motion of a mouse's 3D action skeleton over time, with each color representing a standard behavioral repertoire. (C) The quality of temporal connectivity can be estimated from the total value of the product of transition probabilities between clusters and the distance between clusters (TPI). (Left) Frequent transitions to nearby clusters indicate good temporal connectivity. (Right) Some changes in nearby clusters indicate poor temporal connectivity. (E) Workflow for unsupervised animal behavior analysis. Credit: International Journal of Computer Vision (2024). DOI: 10.1007/s11263-024-02072-0

Animal behavior analysis is a fundamental tool in a variety of studies, from basic neuroscience research to understanding the causes and treatments of diseases. It is widely applied not only in biological research but also in various industrial fields including robotics.

Recently, efforts have been made to accurately analyze animal behaviors using AI technology. However, there are still limitations for AI to recognize different behaviors as intuitively as human observers.

Traditional animal behavior research mainly involves filming animals with a camera and analyzing low-dimensional data such as the timing and frequency of specific movements. The analysis method provided the AI with relevant results for each piece of training data, which is equivalent to simply feeding the AI with questions with an answer key.

Although this method is straightforward, it requires time- and labor-intensive human supervision to generate data. Observer bias is also a factor, as the results of the analysis can be distorted by the subjective judgment of the experimenter.

To address these limitations, a joint research project led by C. Justin Lee, director of the Center for Cognition and Sociality within the Institute for Basic Science, and Cha Myeong, chief investigator (CI) of the Data Science Group at the IBS Center The team of Mathematical and Computational Sciences (also a professor at KAIST's School of Computing) has developed a new analysis tool called SUBTLE (Spectrogram-UMAP-based Temporal-Link Embedding). SUBTLE 3D classifies and analyzes animal behavior through AI learning based on movement information.

The paper is published in International Journal of Computer Vision.

First, the research team recorded the mice's movements using multiple cameras, extracting the coordinates of nine key points such as the head, legs and hips to obtain 3D action skeleton movement data over time.

They then reduced this time series data to two dimensions for embedding, a process that creates a set of vectors corresponding to each piece of data, making complex data more concise and meaningful. can be presented in

× Close

Planning the SUBTLE framework. (A) Process of obtaining and analyzing 3D coordinates of key points from mouse movements. 1) On the left, it shows the process of extracting 3D raw coordinates of mouse movements using AVATAR3D, while on the right, it shows the process of processing and analyzing the 3D coordinate data obtained from AVATAR3D. 2) Draw a 3D action skeleton using an avatar. 3) Extract kinematic features and wavelet spectrogram from key point coordinates. 4) perform nonlinear t-SNE and UMAP algorithms; The embedding using UMAP developed in this study is called SUBTLE. (B) Nonlinear mapping results. It shows the embedding results using t-SNE and UMAP with increasing number of clusters (k). t-SNE shows a tangled thread-like shape over time, while UMAP shows a well-correlated grid shape over time. Additionally, UMAP consistently achieves higher TPI scores than t-SNE across all cluster numbers. Credit: International Journal of Computer Vision (2024). DOI: 10.1007/s11263-024-02072-0

Next, the researchers clustered similar behavioral states into sub-clusters and grouped these sub-clusters into super-clusters that represented standard behavioral patterns (reservoir-like), such as walking, standing, preparing. To do etc.

In the process, they proposed a new metric for evaluating behavioral data clusters called the Temporal Proximity Index (TPI). This metric measures whether each cluster contains the same behavioral state and effectively represents temporal dynamics, as humans consider temporal information important when classifying behavior.

CI Cha Meeyoung said, “The introduction of new evaluation metrics and benchmark data to support the automation of animal behavior classification is the result of a collaboration between neuroscience and data science. We expect these algorithms to be used in various industries.” will be beneficial for those requiring behavioral pattern recognition, including the robotics industry, which aims to mimic animal movements.”

“We have developed an efficient behavior analysis framework that minimizes human intervention while applying complex human behavior pattern recognition methods,” said C.Justin Lee, director of the study, who led the research. “Understands Animal Behavior as a Tool for Gaining Deeper Insights into the Principles of Behavioral Recognition in the Brain.”

Additionally, the research team transferred the SUBTLE technology to Actnova in April last year, which specializes in AI-based clinical and non-clinical behavioral test analysis. The team used Actnova's animal behavior analysis system, AVATAR3D, to capture 3D animal movement data for the study.

The research team has also made SUBTLE's code open source, and a user-friendly graphical interface (GUI) is available through the SUBTLE web service to facilitate the analysis of animal behavior for researchers who are not familiar with programming. .

More information:

Jea Kwon et al, SUBTLE: An unsupervised platform with temporal link embedding that maps animal behavior, International Journal of Computer Vision (2024). DOI: 10.1007/s11263-024-02072-0