A major problem with text-to-image generators is their ability to duplicate the original works used for training, thereby infringing the artist's copyright. Under US law, if you create an original work and 'fix' it in tangible form, you own its copyright – literally, the right to copy it. A copyrighted image cannot, in most cases, be used without permission from the creator.

In May, Google's parent company, Alphabet, faced a class-action copyright lawsuit from a group of artists who claimed it used images to train its AI-powered image generator, Imagine, without permission. What was the use of their work? Stability AI, Midjourney and DeviantArt — all of which use Stability's Stable Diffusion tool — are facing similar suits.

To avoid this problem, researchers at the University of Texas (UT) at Austin and the University of California (UC), Berkeley, have developed a diffusion-based generative AI framework that is trained only on images that can be identified. Corrupted outside, to be removed. It is likely that AI will memorize and replicate an original task.

Diffusion models are advanced machine learning algorithms that produce high-quality data by gradually introducing noise into a dataset and then learning to reverse the process. Recent studies show that these models can memorize examples from their training set. This has clear implications for privacy, security and copyright. Here's an example that isn't related to artwork: an AI that needs to be trained on x-ray scans but doesn't memorize specific patient images, which would violate patient privacy. To avoid this, modelers can introduce image corruption.

With their ambient diffusion framework, the researchers demonstrated that a diffusion model can be trained to produce high-quality images using only very poor samples.

Daras et al.

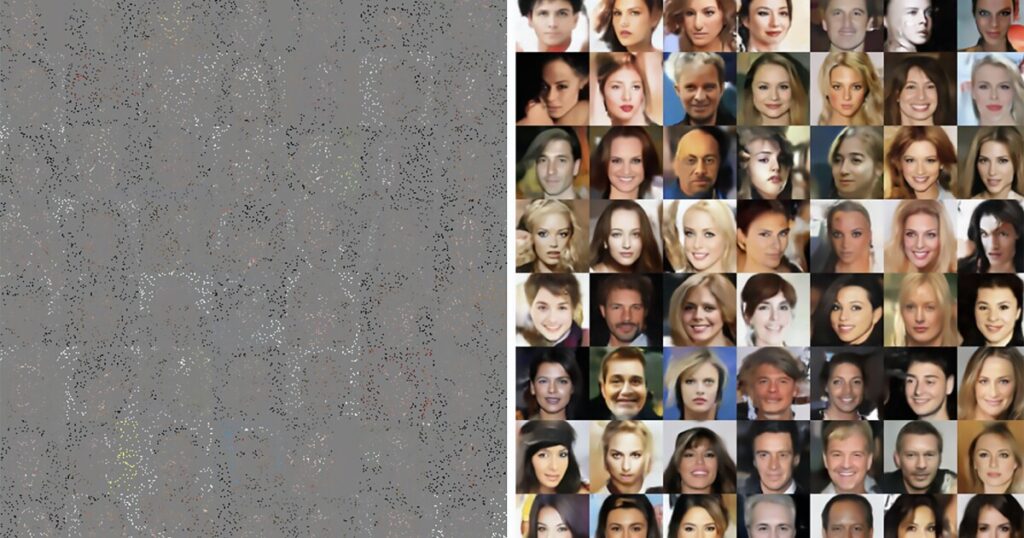

The image above shows the difference in image output when distortion is used. The researchers first trained their model with 3,000 'clean' images from CelebA-HQ, a database of high-quality images of celebrities. When indicated, it produced nearly identical images to the original (left panel). Then, they retrained the model using 3,000 worst-case images, where up to 90% of individual pixels were randomly masked. While the model produced lifelike human faces, the results were less similar (right panel).

“The framework could also be useful for scientific and medical applications,” said Adam Clevans, professor of computer science at UT Austin and co-author of the study. “This would be essentially true for any research where it would be expensive or impossible to obtain a complete set of uncorrupted data, from black hole imaging to certain types of MRI scans.”

As with current text-to-image generators, the results aren't perfect every time. The point is that artists can take some comfort in knowing that a model like Ambient Diffusion will not miss and duplicate their original works. Will this prevent other AI models from remembering and duplicating their original images? No, but that's what courts are for.

The researchers have made their code and ambient diffusion model open source to encourage further research. It is available on GitHub.

The study was published on the preprint website. arXiv.

Source: At UT Austin