Image credit: Shy kids

OpenAI's video generation tool Sora surprised the AI community in February with realistic video that looks miles ahead of competitors. But the carefully staged debut left out a lot of details — details that a filmmaker filled in through early access to create a short using Sora.

Shy Kids is a digital production team based in Toronto that was selected by OpenAI as one of the few to create short films primarily for OpenAI's promotional purposes, although they were given considerable creative freedom in creating “airhead”. had gone In an interview with visual effects news outlet fxguide, post-production artist Patrick Cederberg described “actually using Sora” as part of his work.

Perhaps the most important point for most people is this: while a post featuring OpenAI's shorts might lead readers to believe that they sprung more or less entirely from Sora, the reality is that these were professional productions. , which was complete with strong storyboarding, editing, color correction. and post-work such as rotoscoping and VFX. Just like Apple says “shot on iPhone” but doesn't show studio setups, professional lighting, and color work after the fact, Sora's post only talks about what it tells people to do. gives, not how they actually did it.

Cederberg's interview is interesting and fairly non-technical, so if you're at all interested, head over to fxguide and read it. But here are some interesting nuggets about Sora's usage that tell us that as impressive as it is, this model might be less than we thought.

Control is still the thing that is most desired and also the most exciting at this point. … The closest we could get was just to be very descriptive in our notation. Defining the wardrobe for the characters, as well as the balloon type, was our approach to consistency since there is no feature yet for full control over shot-to-shot / generation-to-generation consistency.

In other words, matters that are simple in traditional filmmaking, such as choosing the color of a character's clothing, require extensive work and scrutiny in a generative system, as each shot is created independently of the others. This can obviously change, but at the moment it's definitely a lot of work.

Sora outputs also had to be looked at for unwanted elements: Cederberg explained how the model would routinely generate a face on a balloon for the main character's head, or a string hanging from the front. They had to be removed in post, another time-consuming process, if they could not get a signal to remove them.

Precise timing and movement of the characters or the camera isn't really possible: “There's a little bit of temporal control over where these different movements happen in the actual race, but it's not precise … it's like shooting in the dark. ” said. Cedarburg.

For example, unlike manual animations, timing a gesture like a wave is a very approximate, suggestion-based process. And a shot like an upward pan on the character's body can reflect what the filmmaker wants – so in this case the team rendered a shot in portrait orientation and cropped pan in post. The produced clips were also often in slow motion for some reason.

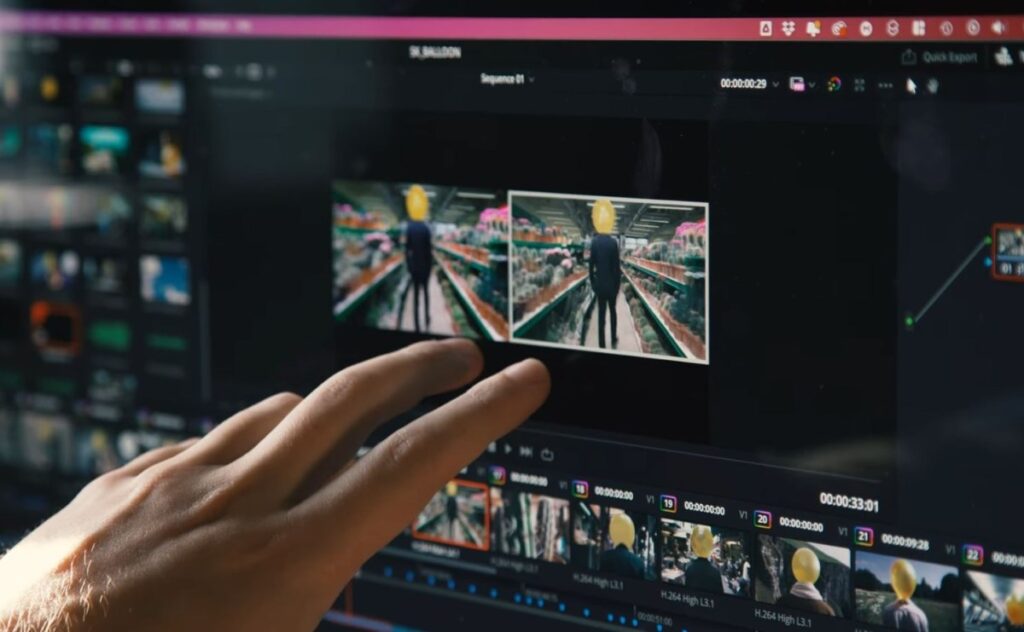

An example of a shot as it came out of Sora and how it ended up in the short. Image credit: Shy kids

In fact, the use of everyday filmmaking language, such as “panning right” or “tracking shot” were generally inconsistent, Cederberg said, which the team found quite surprising.

“The researchers, before they approached the artists to play with this tool, weren't really thinking like filmmakers,” he said.

As a result, the team ended up using hundreds of generations, every 10 to 20 seconds, and only a handful. Cederberg estimated the ratio at 300:1—but of course we'd all be surprised at the ratio of a typical shoot.

The team actually made a little behind-the-scenes video explaining some of the issues, if you're curious. As with a lot of AI-related content, the comments are pretty critical of the whole effort — albeit one similar to the AI-powered ad we've seen recently.

The last interesting wrinkle concerns copyright: if you ask Sora to give you a “Star Wars” clip, she'll refuse. And if you try to get around it with “marauding guy with a laser sword on a retro-futuristic spaceship”, it will also refuse, as it recognizes through some mechanism that you What are you trying to do? He also refused to do an “Aronofsky typeshot” or a “Hitchcock zoom.”

On the one hand, this makes perfect sense. But that begs the question: if Sora knows what these are, does that mean the model was trained on the material, better able to recognize that it's infringing? OpenAI, which keeps its training data cards close to the vest – to the point of absurdity, as CTO Meera Murthy's interview with Joanna Stern – will almost certainly never tell us.

As for Sora and its use in filmmaking, it's clearly a powerful and useful tool in its place, but it's not the place to “make movies out of cloth.” Yet. As another villain once famously said, “That comes later.”