Whether you see AI as an incredible tool with massive benefits or a social disease that only benefits big tools, a powerful new chip can train them faster than ever before. Cerebras Systems has unveiled the world’s fastest AI chip – Wafer Scale Engine 3 (WSE-3), which powers the Cerebras CS-3 AI supercomputer with a peak performance of 125 petaFLOPS. And it’s scalable to an insane degree.

Before an AI system can create a cute but unusual video of a cat owner waking up, it needs to be trained on a frankly staggering amount of data, consuming the energy of more than 100 households in the process. Is. But the new chip, and the computers built with it, will help speed up the process and make it more efficient.

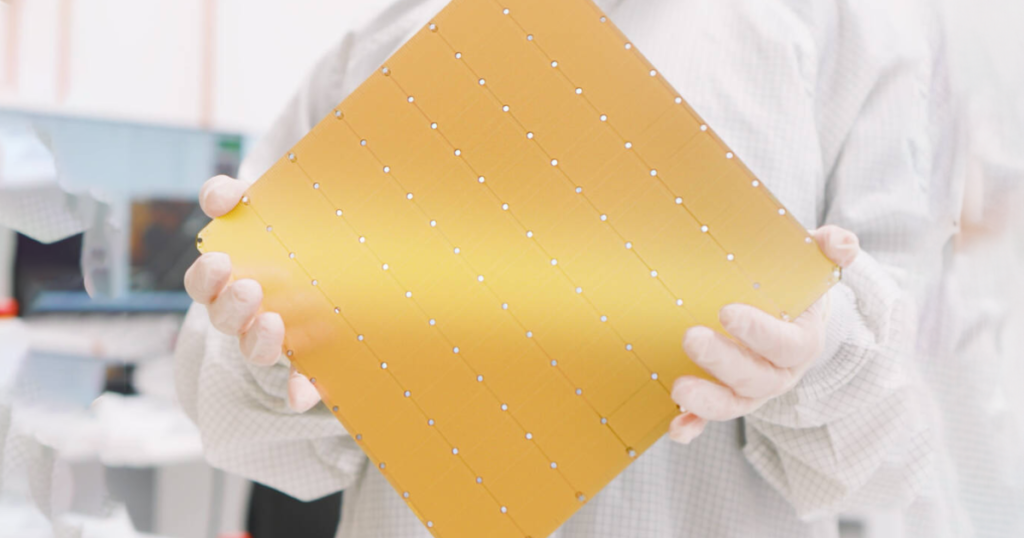

Each WSE-3 chip, about the size of a pizza box, packs a punch. Four trillion The transistors provide twice the performance of the company’s previous model (which also held the previous world record) for the same price and power draw. When bundled into a CS-3 system, they can apparently deliver the performance of a room full of servers in a unit the size of a mini-fridge.

Cerebras says the CS-3 is running 900,000 AI cores and 44 GB of on-chip SRAM, providing high AI performance of up to 125 petaFLOPS. In theory it should be sloppy enough to make it into the world’s top 10 supercomputers – although of course it hasn’t been tested to those benchmarks, so we can’t be sure how well it actually performs. will demonstrate.

To store all that data, external memory options include 1.5 TB, 12 TB or the massive 1.2 petabytes (PB), which is 1,200 TB. CS-3 can train AI models with 24 trillion parameters – by comparison, most AI models are currently in the billions of parameters, with GPT-4 estimated at around 1.8 trillion. Cerebras says CS-3 should be able to train a trillion-parameter model as easily as current GPU-based computers train a billion-parameter model.

Thanks to the wafer production process of the WSE-3 chips, the CS-3 is designed to be scalable, allowing 2,048 units to be assembled into a barely-there supercomputer. It will be capable of up to 256 exaFLOPS, where the world’s top supercomputers are currently still playing with more than one exaFLOP. The company claims that this kind of power will allow it to train the Lama 70B model from scratch in just one day.

It already feels like AI models are advancing at an alarming rate, but this kind of tech is only going to crank the firehose even higher. No matter what you do, it looks like AI systems will be coming to your jobs and even your hobbies faster than ever.

Source: Cerebras [1],[2]