- Meta’s AI image generator, Imagine, has been accused of racial bias.

- The device was unable to produce images of an Asian man with a white woman.

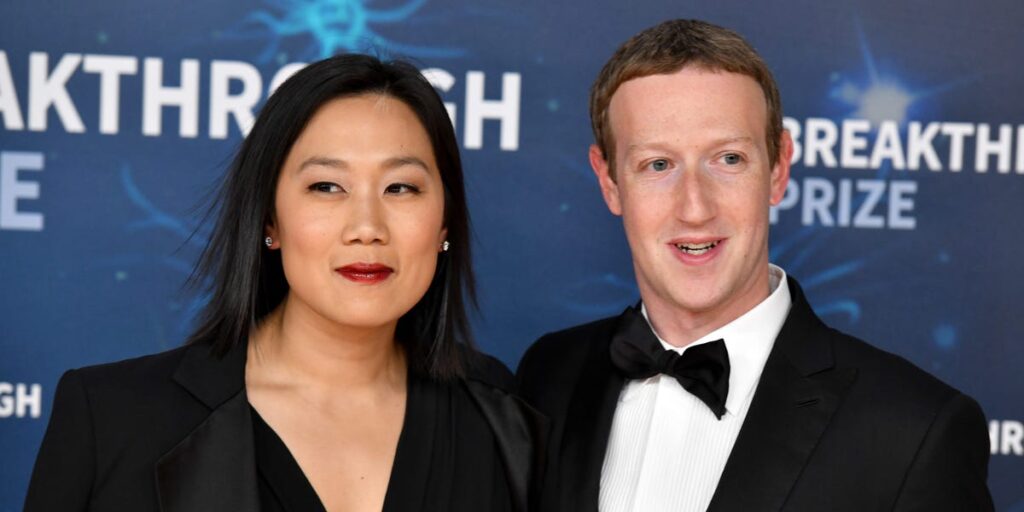

- The apparent bias is surprising because Meta CEO Zuckerberg is married to a woman of East Asian heritage.

Meta’s AI image generator has been accused of racial bias after users discovered it failed to produce an image of an Asian man with a white woman.

gave AI powered image generator, Imagine, was launched late last year. It’s able to take almost any written input and instantly turn it into a realistic image.

But users found that the AI was unable to generate images of mixed-race couples. When Business Insider asked the tool to produce a picture of an Asian man with his white wife, only pictures of Asian couples were shown.

AI’s apparent bias is surprising since Meta CEO Mark Zuckerberg is married to a woman of East Asian heritage.

Priscilla Chan, the daughter of Chinese immigrants to America, met Zuckerberg while studying at Harvard. The couple got married in 2012.

Some users took to X to share photos of Zuckerberg and Chan, joking that they had successfully created images using Imagine.

the edge First to report the problem Wednesday, when reporter Miya Sato claimed she tried “dozens of times” to photograph Asian men and women with white partners and friends.

Sato said that the image generator was only able to return an accurate image of the races described in his notation.

Meta did not immediately respond to a request for comment from BI, which was made outside normal business hours.

Meta is by no means the first major tech company to be blasted for “racist” AI.

In February, Google was forced to halt its Gemini image generator after users discovered it was generating historically inaccurate images.

Users found that the image generator would generate images of Asian Nazis, black Vikings or even female medieval knights in 1940s Germany.

The tech company was accused of being overly “woke” as a result.

On time, Google said “Gemini’s AI image generation produces a wide range of people. And that’s generally a good thing because people all over the world use it. But it’s missing the mark here.”

But AI’s racial biases have long been a concern.

“When predictive algorithms or so-called ‘AI’ are used on such a large scale, it’s becoming more and more common,” AI ethics expert and CEO of Women in Tech Network Frauenloop Dr. Naqeema Steffelbauer previously told Business Insider. It can be difficult to recognize that these predictions are often wrong. Based on crowd opinions, stereotypes, or rapid regurgitation of lies.”

“Algorithmic predictions, retrotyping, and unfairly targeting individuals and communities, based on data from Reddit,” he said.

Generative AIs like Gemini and Imagine are trained on large amounts of data taken from society at large.

If the data used to train the model has fewer images of mixed-race couples, that’s why the AI is struggling to generate these types of images.